Clemson University Clemson University

TigerPrints TigerPrints

All Dissertations Dissertations

5-2023

How to Make Agents and In4uence Teammates: Understanding How to Make Agents and In4uence Teammates: Understanding

the Social In4uence AI Teammates Have in Human-AI Teams the Social In4uence AI Teammates Have in Human-AI Teams

Christopher Flathmann

Clemson University

Follow this and additional works at: https://tigerprints.clemson.edu/all_dissertations

Recommended Citation Recommended Citation

Flathmann, Christopher, "How to Make Agents and In4uence Teammates: Understanding the Social

In4uence AI Teammates Have in Human-AI Teams" (2023).

All Dissertations

. 3339.

https://tigerprints.clemson.edu/all_dissertations/3339

This Dissertation is brought to you for free and open access by the Dissertations at TigerPrints. It has been

accepted for inclusion in All Dissertations by an authorized administrator of TigerPrints. For more information,

please contact [email protected].

How to Make Agents and Influence Teammates:

Understanding the Social Influence AI

Teammates Have in Human-AI Teams

A Dissertation

Presented to

the Graduate School of

Clemson University

In Partial Fulfillment

of the Requirements for the Degree

Doctor of Philosophy

Human Centered Computing

by

Christopher Flathmann

May 2023

Accepted by:

Dr. Nathan McNeese, Committee Chair

Dr. Brian Dean

Dr. Eileen Kraemer

Dr. Brygg Ulmer

Dr. Laine Mears

Abstract

The introduction of computational systems in the last few decades has enabled

humans to cross geographical, cultural, and even societal boundaries. Whether it was

the invention of telephones or file sharing, new technologies have enabled humans to

continuously work better together. Artificial Intelligence (AI) has one of the highest

levels of potential as one of these technologies. Although AI has a multitude of

functions within teaming, such as improving information sciences and analysis, one

specific application of AI that has become a critical topic in recent years is the creation

of AI systems that act as teammates alongside humans, in what is known as a human-

AI team.

However, as AI transitions into teammate roles they will garner new respon-

sibilities and abilities, which ultimately gives them a greater influence over teams’

shared goals and resources, otherwise known as teaming influence. Moreover, that

increase in teaming influence will provide AI teammates with a level of social influ-

ence. Unfortunately, while research has observed the impact of teaming influence by

examining humans’ perception and performance, an explicit and literal understand-

ing of the social influence that facilitates long-term teaming change has yet to be

created. This dissertation uses three studies to create a holistic understanding of the

underlying social influence that AI teammates possess.

Study 1 identifies the fundamental existence of AI teammate social influence

ii

and how it pertains to teaming influence. Qualitative data demonstrates that social

influence is naturally created as humans actively adapt around AI teammate teaming

influence. Furthermore, mixed-methods results demonstrate that the alignment of AI

teammate teaming influence with a human’s individual motives is the most critical

factor in the acceptance of AI teammate teaming influence in existing teams.

Study 2 further examines the acceptance of AI teammate teaming and social

influence and how the design of AI teammates and humans’ individual differences can

impact this acceptance. The findings of Study 2 show that humans have the greatest

levels of acceptance of AI teammate teaming influence that is comparative to their

own teaming influence on a single task, but the acceptance of AI teammate teaming

influence across multiple tasks generally decreases as teaming influence increases.

Additionally, coworker endorsements are shown to increase the acceptance of high

levels of AI teammate teaming influence, and humans that perceive the capabilities of

technology, in general, to be greater are potentially more likely to accept AI teammate

teaming influence.

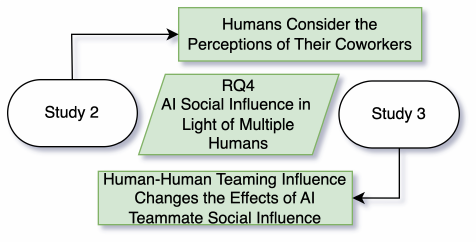

Finally, Study 3 explores how the teaming and social influence possessed by

AI teammates change when presented in a team that also contains teaming influence

from multiple human teammates, which means social influence between humans also

exists. Results demonstrate that AI teammate social influence can drive humans to

prefer and observe their human teammates over their AI teammates, but humans’

behavioral adaptations are more centered around their AI teammates than their hu-

man teammates. These effects demonstrate that AI teammate social influence, when

in the presence of human-human teaming and social influence, retains potency, but

its effects are different when impacting either perception or behavior.

The above three studies fill a currently under-served research gap in human-

AI teaming, which is both the understanding of AI teammate social influence and

iii

humans’ acceptance of it. In addition, each study conducted within this dissertation

synthesizes its findings and contributions into actionable design recommendations

that will serve as foundational design principles to allow the initial acceptance of AI

teammates within society. Therefore, not only will the research community benefit

from the results discussed throughout this dissertation, but so too will the developers,

designers, and human teammates of human-AI teams.

iv

Dedication

Dedicated to Harrison,

one of the best friends someone could have.

Hey Harrison, I simply wanted to use this time to update you on some things

that have been going on, as I haven’t gotten to in almost a year. First, Kelsea and

I are doing great. I know you always had your doubts, but I appreciate how you

always had faith and hope this would pull through, even if you didn’t get to meet

her. Second, One Piece is apparently entering the final arch, which means we should

hopefully find out what the one piece is within the next few years. The manga is

getting really good right now, and it turns out that Luffy is actually a sun god so

that’s pretty cool. Attack on Titan still hasn’t finished, and MAPPA is milking that

for every penny. Elden Ring turned out to be insanely good, and you would have

loved it. I wish you could have been here so we could have enjoyed these things

together, but I hope to be able to enjoy these things for both of us in the future. You

truly were one of the best friends someone could have had. RIP in pepperoni.

Stop counting only those things you have lost. What is gone, is gone. So ask

yourself this. What is there that still remains to you? - Eiichiro Oda, Adapted

Translation

v

Acknowledgments

First, I’d like to thank my advisor Nathan J. McNeese who has been with

me for every step of this Ph.D. Were it not for this mentorship, I would not be the

researcher or person that I am today. Whether I need help in my work or personal

life, I feel that I can always ask a question, expect an answer, and move forward

confidently when working with you. I also feel you are an advisor that grows along

your students, and you continue to learn, adapt, and grow with each new person you

welcome into our world. At the end of the day, I am happy that you are someone I

can grow alongside due to our relentless desire to push each other.

To my dissertation committee, Brian Dean, Laine Mears, Eileen Kraemer, and

Brygg Ullmer, I would like to thank your for you time and expertise throughout this

process. Moreover, I would like to thank each of you for being influential at different

points in my academic career. Brian Dean, thank you for helping me grow as an

undergraduate student, a teacher, a researcher, and a person; you have been a strong

influence for almost a decade now. Laine Mears, thank you for helping me during

the formative years of my degree by always asking how my research leaves the lab,

which has helped me become the applied researcher that I am today. Eileen Kraemer,

thank you for your continued service and expertise through a variety of challenges

that have faced me, both from a research and a degree perspective. Finally, Brygg

Ullmer, thank you for not only providing expertise but also always having the most

vi

entertaining questions to answer. Once again, I appreciate each of you in a unique

way, and this journey has been a delight because of the relationships we have been

able to build.

As an aside, I would also like to extend my gratitude to the staff at Clemson’s

School of Computing. Everyone there has been fantastic in helping me transition

degrees and jobs. I’d especially like to that Kaley Allen and Adam Rollins for making

sure I can make rent each month.

It is also important that I acknowledge my colleagues that work with me on

a daily basis. The TRACE Research Group has helped me in my day-to-day and in

my life. I feel that we have built a family within Clemson, and we can continue to

trust and push each other every day. I cannot wait to see what you all do.

I would also like to thank those in TRACE who have been with me for years

now. First, I would like to thank our former TRACE member, Lorenzo Barberis

Canonico, as he helped me gain momentum in this program. I would like to thank

Beau Schelble for essentially going through this entire program with me and always

having my back when I need it. Rui Zhang, you have been a joy to work with, and

it has been an honor to grow with you over the years. Finally, I would like to thank

Rohit Mallick for stepping up and grabbing the torch as the senior members of our

group move on to the next stages of their lives. I truly believe that not just these

specific students but all TRACE students stand to be major changes of force in the

world, and I cannot wait to see what we are able to accomplish.

To my friends, I would like to extend a very warm thank you. A lot of us have

moved one with our lives, and I’m happy to see all of you thriving. Wayne, Nancy,

Harrison, and Will, thank you for always being the first ones to show up and the last

ones to leave. Most notably, I would like to thank Wayne for watching every DCOM

with me; I thought this dissertation would be the hardest thing I ever accomplished

vii

but that run definitely gives it some competition. Wayne, I guess if you want to

finally know what I do for a job, feel free to read this document. Also, thank you

Caitlin Lancaster, for being a good roommate and friend for these final moments of

my degree. The companionship you all provided and continue to provide have made

this experience not only bearable but enjoyable.

And last but not least, I would like to thank those closest to me. I would like

to say thank you and I love you to my parents, Tod and Connie. You may not always

understand what I do, but I know you have always been there to push me forward

and support whatever random opportunity I decide to pursue. Whether it was my

undergrad, graduate degree, career pursuits, or my collection of disjointed hobbies, I

feel you have always been behind me. I could not and probably would not have done

this if it was not for the two of you.

To Zack and Rachel, I would like to thank you for helping throughout this

process by always providing some sort of distraction. Whether it is random dinner

invites, trips, or simply getting ice cream, your companionship and support has helped

alleviate whatever stress I or this job impose on me.

And finally, I would like to thank my partner, Kelsea. You truly are the main

reason I move forward at this point. You have not only helped me along through this

process, but you have also enriched my life in a way that I did not know was possible.

I cannot wait to build a life with you, and I cannot wait to help you through this

process as well.

A new age is coming. An age of daring and mighty.

And no one can turn back. - Eiichiro Oda, Translated

viii

Table of Contents

Title Page . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . i

Abstract . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . ii

Dedication . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . v

Acknowledgments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . vi

List of Tables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xi

List of Figures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . xv

1 Introduction and Overview of Dissertation . . . . . . . . . . . . . . 1

1.1 Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.2 Problem Motivation . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.3 Research Motivation . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

1.4 Research Questions and Gaps . . . . . . . . . . . . . . . . . . . . . . 12

1.5 Summary of Studies . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

1.6 Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

2 Background . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

2.1 Human-AI Teamwork . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

2.2 Human-Centered Artificial Intelligence and Designing for Artificial In-

telligence Acceptance . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

2.3 Social Influence in Teamwork . . . . . . . . . . . . . . . . . . . . . . 43

2.4 Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

3 Platform Selection . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

3.1 Rocket League . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

4 Study 1: Using Teaming Influence to Create a Foundational Un-

derstanding of Social Influence in Human-AI Dyads . . . . . . . . 67

4.1 Study 1: Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

4.2 Study 1a & 1b: Task . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

ix

4.3 Study 1a & 1b: Participants and Demographics . . . . . . . . . . . . 73

4.4 Study 1a & 1b: Measurements . . . . . . . . . . . . . . . . . . . . . . 74

4.5 Study 1a: Overview and Research Questions . . . . . . . . . . . . . . 81

4.6 Study 1a: Experimental Design . . . . . . . . . . . . . . . . . . . . . 82

4.7 Study 1a: Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

4.8 Study 1a: Discussion . . . . . . . . . . . . . . . . . . . . . . . . . . . 108

4.9 Study 1b: Overview and Research Questions . . . . . . . . . . . . . . 117

4.10 Study 1b: Qualitative Methods . . . . . . . . . . . . . . . . . . . . . 117

4.11 Study 1b: Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 119

4.12 Study 1b: Discussion . . . . . . . . . . . . . . . . . . . . . . . . . . . 146

5 Study 2: Examining the Acceptance and Nuance of AI Teammate

Teaming Influence From Both Human and AI Teammate Perspec-

tives . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 155

5.1 Study 2: Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . 155

5.2 Study 2a: Research Questions . . . . . . . . . . . . . . . . . . . . . . 157

5.3 Study 2a: Methods . . . . . . . . . . . . . . . . . . . . . . . . . . . . 157

5.4 Study 2a: Experimental Results . . . . . . . . . . . . . . . . . . . . . 166

5.5 Study 2b: Research Questions . . . . . . . . . . . . . . . . . . . . . . 175

5.6 Study 2b: Methods . . . . . . . . . . . . . . . . . . . . . . . . . . . . 175

5.7 Study 2b: Experimental Results . . . . . . . . . . . . . . . . . . . . . 184

5.8 Study 2: Individual Differences Results . . . . . . . . . . . . . . . . . 194

5.9 Discussion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 200

6 Study 3: Understanding the Creation of AI Teammate Social In-

fluence in Multi-Human Teams . . . . . . . . . . . . . . . . . . . . . 211

6.1 Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 211

6.2 Methods . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 213

6.3 Study 3: Quantitative Results . . . . . . . . . . . . . . . . . . . . . . 223

6.4 Study 3: Qualitative Results . . . . . . . . . . . . . . . . . . . . . . . 243

6.5 Study 3: Discussion . . . . . . . . . . . . . . . . . . . . . . . . . . . . 256

7 Final Discussions & Conclusion . . . . . . . . . . . . . . . . . . . . . 265

7.1 Revisiting Research Questions . . . . . . . . . . . . . . . . . . . . . . 265

7.2 Contributions of the Dissertation . . . . . . . . . . . . . . . . . . . . 279

7.3 Future Work . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 292

7.4 Closing Remarks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 294

Appendices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 296

A Surveys . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 297

B Study 2 Vignettes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 317

Bibliography . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 319

x

List of Tables

1.1 Research Questions . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

1.2 Research Gaps Being Closed By Research Questions . . . . . . . . . . 13

1.3 Studies that Address Each Research Question . . . . . . . . . . . . . 15

3.1 Potential Platforms . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

4.1 Study 1 Participant Demographics . . . . . . . . . . . . . . . . . . . 75

4.2 Study 1 2x2 experimental design. . . . . . . . . . . . . . . . . . . . . 84

4.3 Descriptive statistics for score. . . . . . . . . . . . . . . . . . . . . . . 86

4.4 Descriptive statistics for score difference. . . . . . . . . . . . . . . . . 89

4.5 Descriptive statistics for workload. . . . . . . . . . . . . . . . . . . . 90

4.6 Descriptive statistics for perceived social influence in comparison to

the AI teammate. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

5.1 Study 2a Demographic Information . . . . . . . . . . . . . . . . . . . 159

5.2 Study 2a Experimental Manipulations, creating a 2x2 experimental de-

sign. Manipulation 1 is a within-subjects manipulation with seven con-

ditions presented in a randomized order. Manipulation 2 is a between-

subjects manipulation with two conditions randomly assigned to par-

ticipants. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 162

5.3 Post-Scenario questions shown after each vignette. Questions were

provided a seven-point Likert scale, Strongly disagree ⇐⇒ Strongly

Agree. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 165

5.4 Linear model for effects of conditions on the perceived capability of AI

to complete workload. Each model is built upon and compared to the

one listed above it. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 167

5.5 Linear model for effects of conditions on potential helpfulness. Each

model is built upon and compared to the one listed above it. . . . . . 168

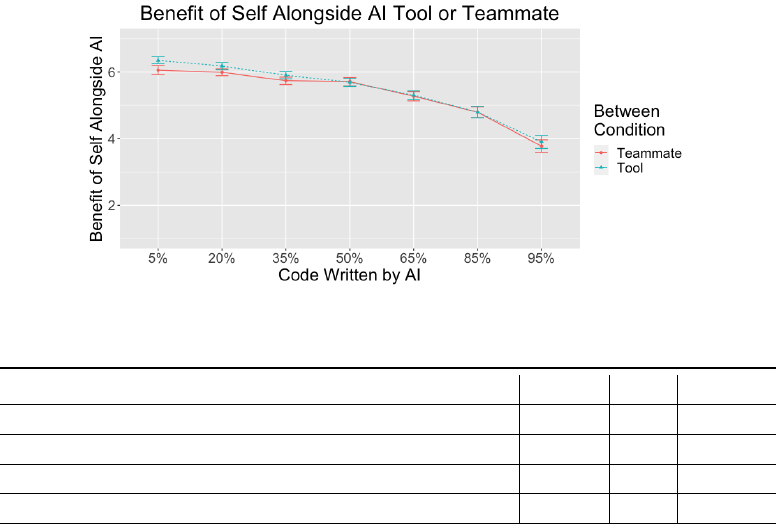

5.6 Linear model for effects of conditions on one’s own perceived benefit.

Each model is built upon and compared to the one listed above it. . . 169

5.7 Linear model for effects of conditions on job security. Each model is

built upon and compared to the one listed above it. . . . . . . . . . . 170

5.8 Linear model for effects of conditions on likelihood to adopt. Each

model is built upon and compared to the one listed above it. . . . . . 172

5.9 Study 2b Demographic Information . . . . . . . . . . . . . . . . . . . 177

xi

5.10 Study 2b: Tasks that need to be completed by software developers and

are assigned to teammates in surveys. . . . . . . . . . . . . . . . . . . 180

5.11 Study 2b experimental manipulations. Manipulation 1 varies the num-

ber of tasks completed by the AI teammate, and in turn the human

participant. Manipulation 2 varies the endorsement provided to en-

courage AI teammate adoption. The descriptions in Manipulation 2

are not the full bullet point list showed to participants. . . . . . . . . 181

5.12 Linear model for effects of responsibility and capability endorsement

on capability of AI teammate. Each model is built upon and compared

to the one listed above it. . . . . . . . . . . . . . . . . . . . . . . . . 185

5.13 Table of the Selected Model’s Fixed Effects of Responsibility, Capabil-

ity Endorsement Methods, and Interactions on the Perceived Capabil-

ity of the AI Teammate. Effect sizes shown for significant effects and

effects that neared significance. . . . . . . . . . . . . . . . . . . . . . 185

5.14 Linear model for effects of responsibility and capability endorsement on

helpfulness of AI teammate. Each model is built upon and compared

to the one listed above it. . . . . . . . . . . . . . . . . . . . . . . . . 187

5.15 Table of the Selected Model’s Fixed Effects of Responsibility, Capabil-

ity Endorsement, and Interactions on AI Helpfulness. Effect size only

shown for significant effects. . . . . . . . . . . . . . . . . . . . . . . . 187

5.16 Linear model for effects of responsibility and capability endorsement

on helpfulness of one’s self. Each model is built upon and compared

to the one listed above it. . . . . . . . . . . . . . . . . . . . . . . . . 188

5.17 Table of the Selected Model’s Fixed Effect of Responsibility on Help-

fulness of Self. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 188

5.18 Linear model for effects of responsibility and capability endorsement

on job security. Each model is built upon and compared to the one

listed above it. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 190

5.19 Table of the Selected Model’s Fixed Effects of Responsibility and Ca-

pability Endorsement on Job Security. Effect size only shown for sig-

nificant effects. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 190

5.20 Linear model for effects of responsibility and capability endorsement

on likelihood to adopt. Each model is built upon and compared to the

one listed above it. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 191

5.21 Table of the Selected Model’s Fixed Effects of Responsibility, Capabil-

ity Endorsement, and Interactions on Adoption Likelihood. Effect size

only shown for significant effects. . . . . . . . . . . . . . . . . . . . . 191

5.22 Model Comparisons and Coefficients for AI Helpfulness . . . . . . . . 194

5.23 Model Comparisons and Coefficients for One’s Own Perceived Benefit 195

5.24 Model Comparisons and Coefficients for Perceived Job Security . . . 196

5.25 Model Comparisons and Coefficients for Perceived AI Capability . . . 197

5.26 Model Comparisons and Coefficients for Adoption . . . . . . . . . . . 198

xii

6.1 Study 3 2x3 experimental design. . . . . . . . . . . . . . . . . . . . . 214

6.2 Study 3 Demographic Information . . . . . . . . . . . . . . . . . . . . 217

6.3 Human-Machine-Interaction-Interdependence Subscales . . . . . . . . 220

6.4 Marginal means for the effects of AI count and teammate identity on

perceived performance. . . . . . . . . . . . . . . . . . . . . . . . . . . 224

6.5 Marginal means for the effects of AI count and teammate identity on

perceived performance. . . . . . . . . . . . . . . . . . . . . . . . . . . 226

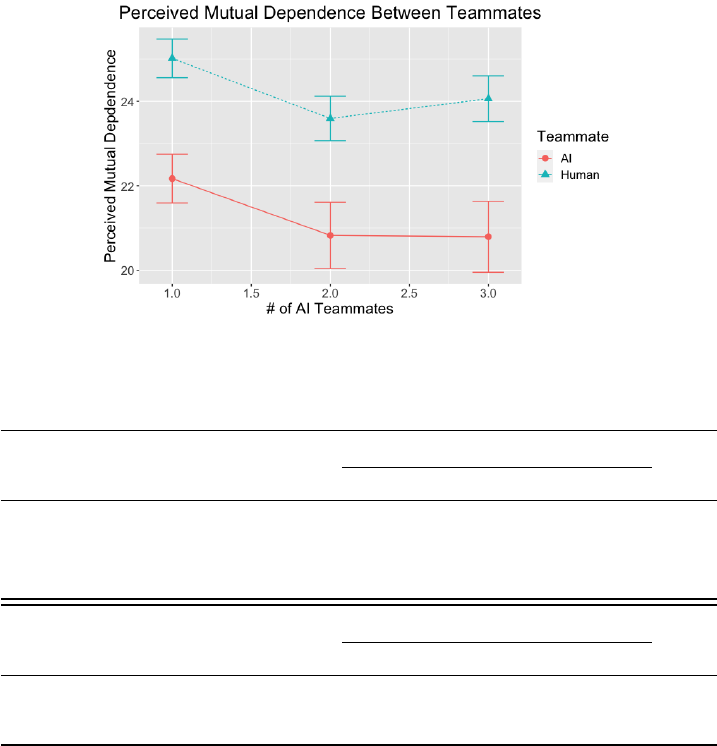

6.6 Marginal means for the effects of AI count and teammate identity on

perceived mutual dependence. . . . . . . . . . . . . . . . . . . . . . . 227

6.7 Marginal means for the effects of training type and teammate identity

on perceived conflict. . . . . . . . . . . . . . . . . . . . . . . . . . . . 229

6.8 Marginal means for the effects of number of AI teammates and team-

mate identity on perceived power compared to others. . . . . . . . . . 230

6.9 Marginal means for the effects of number of AI teammates and team-

mate identity on perceived future interdependence from the teammate

to one’s self. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 232

6.10 Marginal means for the effects of number of AI teammates on perceived

future interdependence from one’s self to teammates. . . . . . . . . . 234

6.11 Marginal means for the effects of teammate identity on on perceived

information certainty from one’s self to their teammates. . . . . . . . 238

6.12 Marginal means for the effects of the number of AI teammates on

perceived workload. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 239

6.13 Marginal means for the effects of the number of AI teammates on AI

teammate acceptance. . . . . . . . . . . . . . . . . . . . . . . . . . . 240

A.1 Study 1 Demographics . . . . . . . . . . . . . . . . . . . . . . . . . . 298

A.2 Negative Attitudes Towards Agents Survey . . . . . . . . . . . . . . . 298

A.3 Disposition to Trust Artificial Teammate Survey . . . . . . . . . . . . 299

A.4 Teammate Performance Survey . . . . . . . . . . . . . . . . . . . . . 300

A.5 Teammate Trust Survey . . . . . . . . . . . . . . . . . . . . . . . . . 300

A.6 Team Effectiveness Survey . . . . . . . . . . . . . . . . . . . . . . . . 301

A.7 Team Workload Survey . . . . . . . . . . . . . . . . . . . . . . . . . . 302

A.8 Influence and Power Survey . . . . . . . . . . . . . . . . . . . . . . . 302

A.9 Artificial Teammate Acceptance Survey . . . . . . . . . . . . . . . . . 303

A.10 Study 2 Demographics . . . . . . . . . . . . . . . . . . . . . . . . . . 304

A.11 Need for Power Scale . . . . . . . . . . . . . . . . . . . . . . . . . . . 305

A.12 Motivation to Lead Scale . . . . . . . . . . . . . . . . . . . . . . . . . 307

A.13 Creature of Habit . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 308

A.14 Big Five Personality - Mini IPIP . . . . . . . . . . . . . . . . . . . . 310

A.15 Workplace Fear of Missing Out . . . . . . . . . . . . . . . . . . . . . 311

A.16 Cynical Attitudes Towards AI . . . . . . . . . . . . . . . . . . . . . . 311

A.17 General Computer Self-Efficacy . . . . . . . . . . . . . . . . . . . . . 312

xiii

A.18 Computing Technology Continuum of Perspective Scale . . . . . . . . 313

A.19 Human-Machine-Interaction-Interdependence Questionaire . . . . . . 316

B.20 Study 1 Vignette Template . . . . . . . . . . . . . . . . . . . . . . . . 317

B.21 Study 2 Example Vignette. Number of tasks changes as a within sub-

jects condition. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 318

xiv

List of Figures

1.1 Graphic displaying the underexplored role social influence plays in

human-AI teaming during human-AI interaction. A 1-human 1-AI

dyad is shown to reduce figure complexity and increase readability. . 2

1.2 Graphical Representation of Studies . . . . . . . . . . . . . . . . . . . 15

3.1 Rocket League Screen Shot . . . . . . . . . . . . . . . . . . . . . . . . 63

3.2 RL Bot Interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

3.3 RLBot Team Size Modification . . . . . . . . . . . . . . . . . . . . . 65

4.1 Experimental Procedure for Study 1a . . . . . . . . . . . . . . . . . . 83

4.2 AI teaming influence and variability’s effect on participants’ scores dis-

playing the main effect of teaming influence (Figure 4.2a), the inter-

action effect between teaming influence level and variability (Figure

4.2b). Figures also display the three way interaction between round,

teaming influence level, and variability with Figure 4.2c showing the

low AI teaming influence condition and Figure 4.2d showing the high

AI teaming influence condition. Error bars represent 95% confidence

intervals. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 88

4.3 Interaction effect between AI teaming influence and variability on par-

ticipants’ score difference. Error bars represent 95% confidence intervals. 89

4.4 Main effect of AI teaming influence level on participants’ perceived

workload level (Figure 4.4a) and the main effect of AI teaming influence

on the participants’ perceived level of teaming influence in comparison

to their AI teammate (Figure 4.4b). Error bars represent bootstrapped

95% confidence intervals. . . . . . . . . . . . . . . . . . . . . . . . . . 91

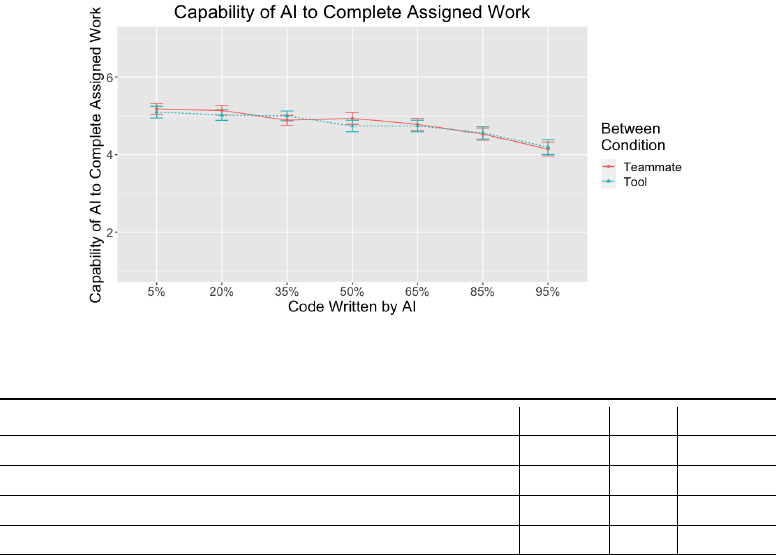

5.1 Figure of the capability of AI system to complete responsibility based

on responsibility and identity. Error bars denote 95% confidence interval.167

5.2 Graph of the potential helpfulness of AI based on responsibility and

identity. Error bars denote 95% confidence interval. . . . . . . . . . 168

5.3 Graph of potential benefit of self alongside AI system based on respon-

sibility and identity. Error bars denote 95% confidence interval. . . . 169

5.4 Figure of job security when working with AI based on responsibility

and identity. Error bars denote 95% confidence interval. . . . . . . . . 170

xv

5.5 Graph of likelihood to adopt AI based on teammate responsibility and

identity. Error bars denote 95% confidence interval. . . . . . . . . . . 172

5.6 Graph of AI capability based on teammate responsibility and capability

endorsement. Error bars denote 95% confidence interval. . . . . . . . 185

5.7 Graph of helpfulness of AI based on teammate responsibility and ca-

pability endorsement. Error bars denote 95% confidence interval. . . . 187

5.8 Graph of helpfulness of self based on teammate responsibility and ca-

pability endorsement. Error bars denote 95% confidence interval. . . . 188

5.9 Graph of job security based on teammate responsibility and capability

endorsement. Error bars denote 95% confidence interval. . . . . . . . 190

5.10 Graph of likelihood to adopt AI based on teammate responsibility and

capability endorsement. Error bars denote 95% confidence interval. . 191

6.1 Figure of task performance based on the number of AI teammates and

whether or not the perception is towards the human or AI teammate.

Error bars denote 95% confidence intervals. . . . . . . . . . . . . . . . 224

6.2 Figure of perceived performance based on the number of AI teammates

and whether or not the perception is towards the human or AI team-

mate. Error bars denote 95% confidence intervals. . . . . . . . . . . . 226

6.3 Figure of perceived mutual dependence based on the number of AI

teammates and whether or not the perception is towards the human

or AI teammate. Error bars denote 95% confidence intervals. . . . . . 227

6.4 Figure of perceived conflict based on training type and whether or

not the perception is towards the human or AI teammate. Error bars

denote 95% confidence intervals. . . . . . . . . . . . . . . . . . . . . . 229

6.5 Figure of perceived power one has compared to others based on the

number of AI teammates and whether or not the perception is towards

the human or AI teammate. Error bars denote 95% confidence intervals.230

6.6 Figure of perceived future interdependence from teammates to one’s

self based on the number of AI teammates and whether or not the

perception is towards the human or AI teammate. Error bars denote

95% confidence intervals. . . . . . . . . . . . . . . . . . . . . . . . . . 232

6.7 Figure of perceived future interdependence from one’s self to team-

mates based on the number of AI teammates. Error bars denote 95%

confidence intervals. . . . . . . . . . . . . . . . . . . . . . . . . . . . . 234

6.8 Figure of perceived future interdependence from one’s self to team-

mates based on the number of AI teammates, teammate identity, and

the training participants had. Error bars denote 95% confidence intervals.236

6.9 Figure of perceived information certainty from one’s self to teammates

based on teammate identity. Error bars denote 95% confidence intervals.238

6.10 Figure of perceived workload based on the number of AI teammates.

Error bars denote 95% confidence intervals. . . . . . . . . . . . . . . . 239

xvi

6.11 Figure of AI teammate acceptance based on the number of AI team-

mates. Error bars denote 95% confidence intervals. . . . . . . . . . . 240

7.1 RQ1 Study Relationships . . . . . . . . . . . . . . . . . . . . . . . . . 268

7.2 RQ2 Study Relationships . . . . . . . . . . . . . . . . . . . . . . . . . 271

7.3 RQ3 Study Relationships . . . . . . . . . . . . . . . . . . . . . . . . . 274

7.4 RQ4 Study Relationships . . . . . . . . . . . . . . . . . . . . . . . . . 277

xvii

Chapter 1

Introduction and Overview of

Dissertation

1.1 Overview

“The potato can, and usually does, play a twofold part: that of a nutritious

food, and that of a weapon ready forged for the exploitation of a weaker

group in a mixed society.” –Salaman Redcliffe, The History and Social

Influence of the Potato

“If the simple potato can have a great deal of social influence on the way

humans interact with each other and society, what is stopping AI team-

mates from doing the same?” –Christopher Tod Flathmann, How to Make

Agents and Influence Teammates

The integration of AI systems in the coming years has the opportunity to revo-

lutionize modern workforces and provide them with highly analytical systems capable

of performing actions not feasible by humans [438]. However, more than being tools

1

used by humans, the concept of AI promises the ability to create autonomous systems

that can listen, interpret, think, and act autonomously without the direct control of

human users [406, 269]. Specifically, human-AI teaming serves as a fully realized im-

plementation of this idea, as it allows AI systems to be designed, created, and viewed

as an autonomous teammate that can act interdependently with humans rather than

being used as a simplistic tool [329]. Bridging AI systems away from tooling and

towards teaming is, however, not a trivial task and may not be an entirely frictionless

experience. Importantly, new frictions toward AI teammates’ integration may arise as

the transition from tool to teammate presents a greater level of responsibility, ability,

and most of all social influence for an AI system interacting with humans. Thus, this

increase in social influence must be explicitly understood by researchers if AI team-

mates are going to be able to enter future societies and workforces. The following

chapter provides a definition and contextualization for this social influence, details

the challenges preventing us from understanding this social influence, and summarizes

the work completed by this dissertation.

Figure 1.1: Graphic displaying the underexplored role social influence plays in human-

AI teaming during human-AI interaction. A 1-human 1-AI dyad is shown to reduce

figure complexity and increase readability.

2

1.2 Problem Motivation

While human-AI teaming research has gained traction over the last several

years, the current state of said research has (1) largely ignored the critical teaming

component of social influence, while also (2) minimizing the consideration of the

initial acceptance of AI teammates in real-world settings. These two challenges are

discussed in detail below and serve as the central motivations of this dissertation.

As AI technology progresses, human-AI teaming serves as a unique application

of AI technology where AI teammates have more complex roles than simplistic tools

because the role, responsibility, and autonomy of a teammate is greater than that of

a tool [329]. This increase in role and responsibility has ultimately led AI teammates

to have greater use of shared resources to accomplish shared goals within a human-AI

team, otherwise known as having a teaming influence (Top/Bottom Purple Boxes,

Figure 1.1). The presence of this teaming influence has been shown in previous

research to heavily contribute to various teaming results, such as team performance

[329], trust [284], shared understanding [111], ethics collaboration [143] and team

cognition [390] (Right-most red box, Figure 1.1).

Despite research acknowledging the impacts of AI teammate teaming influence

on the above-mentioned teaming factors and on human teammates [480], there is

likely an unexplored secondary influence that contributes to these human-AI teaming

factors known as Social Influence (Middle Yellow box, Figure 1.1). Social influence is

defined as the “change in an individual’s thoughts, feelings, attitudes, or behaviors that

results from interaction with another individual or a group” [355]. Within human-

human teams, social influence often occurs as a result of teammates interacting with

each other’s teaming influence [240]. Based on this understanding, a representation

of how AI teaming influence potentially leads to social influence through human-AI

3

teaming interaction is shown in Figure 1.1. Despite the importance of social influence

to teaming [240], this potential representation is inherently limited as research has

yet to explicitly explore how interaction with teaming influence creates AI teammate

social influence that facilitates lasting change in humans’ behaviors and perceptions.

As such, a holistic understanding of human-AI teaming and AI teammate impacts

cannot be achieved without understanding AI teammate social influence. Moreover,

this holistic understanding is critical as all of these factors, including social influence,

will exist in real-world teams that eventually work with AI teammates.

Furthermore, not only would understanding AI teammate social influence in-

crease an understanding of human-AI teaming, but the existence of AI teammate

teaming and social influence may ultimately impact the technology’s acceptance,

meaning ignoring the concept may hinder the acceptance of the actual technology

of AI teammates. Generally, technology acceptance is often dictated by two key

factors- perceived utility and ease-of-use- which have been synthesized into the tech-

nology acceptance model (TAM) [105]. While the perceived utility has maintained

a relatively constant presence in the TAM, it has yet to be explored from the per-

spective of AI teammate teaming influence. Additionally, ease-of-use has often been

heavily updated to better accommodate new mediums, modalities, and applications

[451]. However, the existence of social influence from AI teammates means that hu-

mans will not just use AI teammates, but AI teammates will in fact use humans.

Meanwhile, the only consideration of social influence in ease-of-use and technology

acceptance is external social influence, such as peer pressure from human friends to

accept technology, and not social influence presented by the technology itself [451].

Thus, the potential acceptance of AI teammates cannot be understood without a

consideration of the role AI teammate social influence and teaming influence plays

on humans’ acceptance.

4

The above two challenges present two unique roadblocks and gaps that pre-

vent the progression of human-AI teaming: (1) a minimal understanding of how AI

teammate teaming influence becomes social influence prevents AI teammate impacts

from being holistically understood, and (2) the initial acceptance of AI teammates

may be miscalculated without an understanding of the acceptance of AI teammate

teaming and social influence. Thus, this dissertation tackles these roadblocks with

the goal of making AI teammates that are both beneficial to and accepted by human

teammates.

1.3 Research Motivation

While the above problem motivation identifies the specific practical knowledge

gaps that inspire this work, these problems must be solved through the understanding

and subsequent closure of existing research gaps. Specifically, this dissertation is a

synthesis of two research domains: Human-AI Teaming and Human-Centered AI.

Both domains provide an understanding of rapidly advancing fields of technology

that have seen a high degree of crossover in recent years. Moreover, the importance

of both AI teammate and teaming influence, and the acceptance of said teaming and

social influences, necessitates the crossover between these two domains to achieve a

holistic picture. In addition to the fields of Human-AI Teaming and Human-Centered

AI, the general research field of social influence also provides key considerations for

this dissertation.

1.3.1 Human-AI Teaming

Over the past decade, AI systems and their applications have advanced in a

variety of ways. For instance, Natural Language Processing, Decision Making, Digital

5

Assistants, and even Recommender Systems have all uniquely propelled AI as a tech-

nology forward [459]. However, one of the most promising applications of AI technol-

ogy, which will collectively build on a multitude of other AI domains, is the creation of

AI teammates. AI teammates are created to function as autonomous systems along-

side humans by leveraging their unique computational strengths to complete tasks in

real-world contexts [285]. Specifically, the definition of human-AI teams is as follows:

“interdependence in activity and outcomes involving one or more humans and one or

more autonomous agents, wherein each human and autonomous agent is recognized

as a unique team member occupying a distinct role on the team, and in which the

members strive to achieve a common goal as a collective” [329]. While the defini-

tion and concept of AI teammates has yet to see consistent real-world and applied

application, research domains have recently begun to heavily explore the concept for

future application [389]. Fortunately, past research has found immense potential in

human-AI teaming as an application of AI technology, as AI teammates are able

to utilize computational capabilities to complement potential weaknesses in human

teammates, leading to greater levels of efficiency [285]. However, the potential mag-

nitude of these efficiency gains is not always presented alongside similar increases in

perceptions of AI systems, as the trust of AI teammates is often lower than that of

human teammates [284]. Moreover, perceptual impacts are not simply isolated to

human-AI relationships but also impact the perceptions humans have for each other,

meaning human-human relationships can also be impacted by AI teammates [140].

Due to the potential misalignment of performance gains and human-factors

issues surrounding human-AI teams, a large portion of recent research has shifted to-

wards more critically examining specific human factors and human teaming concepts

in human-AI teaming, many of which are listed above [329]. However, the common

exploration of these factors simply looks at the final impact that the existence of AI

6

teammate teaming influence has on said factors without consideration for the grad-

ual change process of social influence that facilitates these results. For instance, the

interactions humans have with AI teammates have shown demonstrable impacts on

trust [284], and the same can be said for other human factors such as shared under-

standing [111] or ethics [259]. Additionally, research has only examined how external

social influences, such as organizational policy, can also impact human-AI collabo-

ration and teaming [262]. However, the social influence presented by AI systems in

human-AI teams has yet to be explored, and it has only received minor exploration in

the broader domain of human-AI interaction [184]. Thus, existing human-AI teaming

research has a blind spot in its understanding as it is agnostic of the social influence

AI teammates themselves have on human teammates, which has created the following

gap: the explicit and literal exploration of the social influence AI teammates have as

a result of their teaming influence in human-AI teams has not been explored.

The above research gap must also be expanded to a second research gap in

human-AI teaming. Specifically, the social influence of AI teammates is not going to

be the only social influence humans experience in human-AI teams, which can include

human-human and human-AI relationships. Humans already provide a great deal of

social influence in the teams they are a part of [165]. Moreover, within multi-human

human-AI teams, there is competition between different social influences, which ul-

timately diminishes the impact of an individual’s social influence and even impacts

existing relationships on teams [156]. Therefore, while creating a base understanding

of how social influence from an AI teammate will impact human teammates, this

understanding needs to be further extended to accommodate (1) existing human re-

lationships that could be impacted by this social influence and (2) the presence of

competing human influence that may weaken AI teammate social influence. Thus,

the above research gap must be extended to include: AI teammate social influence

7

has not yet been explicitly studied in contexts with existing human relationships and

teaming influence from multiple humans.

1.3.2 AI Acceptance & Human-Centered AI

As mentioned above, the TAM has historically been a critical component in

determining the potential acceptance of emerging technologies [105]. Moreover, its

relevance and importance have not faded over the years as it has been repeatedly

updated to accommodate new technologies alongside our growing understanding of

human-computer interaction [89]. Indeed, it is not unusual for a newly emerging field

of technology, such as human-AI teaming, to require updates to the TAM. Impor-

tantly, then, updating the TAM to better accommodate human-AI teaming will not

negate existing understandings of ease-of-use and perceived utility as they are still ap-

plicable to human-AI teaming and AI technologies [17, 16], but will rather widen the

TAM’s applicability to this novel human-computer interaction. For instance, the per-

ceived reliability of a system- a critical component to general technology acceptance-

is also an important factor to AI teammates as humans will need to rely on them

in teaming situations [99]. Thus, the exploration of improving human-AI interaction

from the perspective of existing factors that impact ease-of-use is critical.

Recently, in hopes of improving both perceived utility and ease-of-use, AI re-

search has shifted its focus towards the idea of designing AI to be human-centered.

Specifically, this human-centeredness is often achieved by creating design recommen-

dations for AI systems that researchers and developers can use as guidelines for build-

ing AI systems that benefit humans [22]. These include recommendations for AI

systems that consider the differences between individuals that may impact how they

perceive, accept and interact with AI [474]. These recommendations can often be

8

targeted towards increasing the perceived utility of an AI system, such as increases

in algorithmic precision and explainability and the creation of educational materials

[161, 29, 301]. However, recommendations can also specifically target the ease-of-use

of AI tools, such as the use of voice interaction, conversational speech, or visual com-

munication [449, 104, 223]. Thus, while modern research in AI tooling has commonly

tackled the concepts that help garner AI acceptance, these findings may become less

relevant due to the unique teaming and social influence imposed by AI teammates

as opposed to AI tools. Thus, the following research gap exists: AI teammate accep-

tance does not yet consider the social influence associated with AI teammates’ teaming

influence, resulting in a miscalculation of potential acceptance.

In light of recent events in 2023, the above problem motivations and human-

centered AI research motivations have gained increasing levels of importance. At the

time of writing this document, new and powerful AI platforms, such as OpenAI’s

ChatGPT, have been introduced, and they have been rapidly propelled into society’s

focus due to their ability to benefit a variety of work domains, including software

development or even academic writing [437, 429]. However, in conjunction with this

introduction, tens of thousands of layoffs have occurred in the tech sector, and bil-

lions have been further invested in AI platforms [14]. While these platforms may

not be the direct cause of these layoffs, the timely introduction of these platforms

as well as their proposed capabilities pose a potential disruption to the workforce

[34], and workers’ perspectives on these technologies may begin to shift from interest

to hesitancy. As such, in solving the above research and problem motivation, this

dissertation demonstrates the damage that can be caused to technology acceptance

due to the implementation of AI systems as tools or teammates.

9

1.3.3 Relevant Concepts of Social Influence to AI Teammates

While this dissertation does not focus on directly extending existing literature

in the field of social influence due to its breadth and variety, it is still important to

explicitly reiterate this dissertation’s scoping of social influence in human-AI teams, as

the concept of social influence is highly broad [400, 240]. Using the definition provided

above along with the scoping of human-AI teaming, the concept of “interactions” is

being scoped to only refer to the interactions humans have with AI teammates when

both are working in a shared environment to complete a shared goal. In other words,

this dissertation examines how the teaming influence an AI teammate has on a shared

task and goal ultimately creates social influence on humans’ perceptions and behaviors.

This scoping places social influence as an interaction that is the result of natural

teaming processes and shared goals and not the result of targeted social influence and

manipulation done with the explicit goal of manipulating others [412, 98]. While the

concept of social influence has been used to better design the social components of AI

systems, such as the conversational language used by them [346], the explicit study

of human-AI social influence that arises from human-AI teaming influence has yet to

be fully studied, as mentioned previously.

Importantly, the concept of social influence sees explicit operationalization

within teaming research. For example, team interdependence is often wholly reliant

on the social influence received from teammate interaction, as efficient reception of

this social influence enables efficient interdependent teaming [165]. Furthermore,

beneficial teammate interactions with leaders often consist of leaders having social

influence through exemplar behaviors and not underhanded manipulation [409, 19].

The inclusion of technology into teaming has also enabled modern teams to better

mediate and use social influence in different hierarchies of work [256]. Thus, social

10

influence as a concept is not only relevant to teaming, but also to teams within the

digital age and AI agents as a whole. In other words, even if social influence changes

in its manifestation, it will still exist in a recognizable form within human-AI teams.

Despite the importance of social influence to teaming and the ability of digital systems

to impose social influence, technology-mediated/imposed social influence has not yet

considered the ability of AI teammates to possess and impose high levels of social

influence. Thus, the following gap exists: technology-mediated social influence has

yet to be studied from the perspective of AI teammates.

Furthermore, one cannot simply look at the role of AI in this social influence

process, but also the role of the human that will experience said social influence.

Within the field of social influence, the concepts of applying and receiving social

influence are known as persuasion [325] and susceptibility, respectively [4]. Rather

than diving into the technical specifics of these two concepts, a real-world example

provides a clear illustration. Take for instance two players on a basketball team:

one player has the ball (the human teammate) and the other is open for a pass

(the AI teammate). While both teammates are working towards a shared goal, an

AI can attempt to persuade the human teammate by getting open and asking for a

pass. However, the human may not pass as they lack susceptibility to AI teammate

social influence due to, say, negative perceptions of the AI teammate. Extending this

analogy, one can see that it is not enough for an AI to be persuasive in its use of social

influence, humans also need to be susceptible to an AI teammate’s social influence to

accept said social influence, in turn accepting the AI teammate. As such, given this

dissertation’s focus on both acceptance and social influence, these concepts will be

used as proxies for (1) the innate acceptance humans could have for AI teammates and

their teaming and social influence (susceptibility) and (2) the ways AI teammates can

be designed to better promote acceptance (persuasion). Thus, the following research

11

gap exists: Factors that mediate the susceptibility and persuasion of AI teammate

social influence have not been empirically examined.

1.4 Research Questions and Gaps

This dissertation answers a multitude of research questions targeting an un-

derstanding of social influence in human-AI teaming with a perspective of how their

answers may shed light on the potential acceptance of AI teammates. In Table 1.1,

these research questions are listed, and they serve as the center of discussion for the

entirety of this dissertation.

RQ# Research Question

RQ1 How does teaming influence applied by an AI teammate become a

social influence that affects human teammates?

RQ2 How do varying amounts of AI teammate teaming influence mediate

humans’ perceptions and reactions to AI teammate social influence?

RQ3 How accepting are humans to AI teammate teaming and social influ-

ence, and can AI teammate design increase acceptance?

RQ4 Does the role of AI social influence change in teams with existing

human-human teaming and social influence?

Table 1.1: Research Questions

Not only is this work guided by the answering of critical research questions,

but this dissertation also works to close multiple research gaps pertaining to human-

AI teaming, human-centered AI, and the social influence of AI technology. Thus,

Table 1.2 outlines how the research questions listed above target specific gaps, which

are derived from this dissertation’s problem and research motivations. Additionally,

the gaps listed are not exclusive to research domains, but actually, target gaps in-

cluding both research and practical efforts in human-centered AI. Closing these gaps

in addition to answering the above research questions ultimately ensure that the

contributions of this dissertation serve both research and industry efforts.

12

Research Gap Research Question

The explicit and literal exploration of the social

influence AI teammates have as a result of their

teaming influence in human-AI teams has not been

explored

RQ1, RQ2

AI teammate social influence has not yet been

explicitly studied in contexts with existing human

relationships and teaming influence from multiple

humans.

RQ1, RQ2, RQ4

AI teammate acceptance does not yet consider the

social influence associated with AI teammates’ teaming

influence, resulting in a miscalculation of potential

acceptance.

RQ2, RQ3

Technology-Mediated Social Influence has yet to be

studied from the perspective of AI teammates.

RQ1, RQ2, RQ4

Factors that mediate the susceptibility and persuasion

of AI teammate social influence are not empirically

examined

RQ3

Table 1.2: Research Gaps Being Closed By Research Questions

13

1.5 Summary of Studies

This dissertation is composed of three overarching studies. Each study is

summarized below; however, detailed breakdowns of each study are included further

in this document. Importantly, given the little understanding we have of AI teammate

social influence, this dissertation works from the perceptive of manipulating teaming

influence and linking these manipulations to the outcomes of social influence and

acceptance. In turn, an analytical connection can be made between AI teammate

social influence and acceptance.

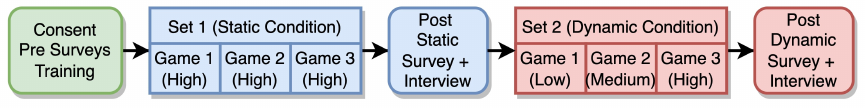

The general design of this dissertation is that Study 1 provides a founda-

tional understanding of teaming and social influence, and this understanding is then

extended by Study 2 and Study 3 in different ways. The first study of this work

manipulates teaming influence by manipulating AI teammate behavior, and it details

how and why teaming influence ultimately becomes social influence. Study 2 extends

this understanding by explicitly linking teaming influence to acceptance by examin-

ing how variations in teaming influence impact acceptance. Finally, Study 3 extends

Study 1 by exploring the creation of social influence in more complex environments

where multiple human teammates exist, and it manipulates the number of AI team-

mates as well. In summary, each study has been linked to the Research Questions

it explicitly addresses in Table 1.3, and a figure representing the structure of this

dissertation can be found in Figure 1.2.

14

Figure 1.2: Graphical Representation of Studies

Study # Short Study Title Research Questions Addressed

1

Using Teaming Influence to

Create a Foundational

Understanding of Social

Influence in Human-AI Dyads

RQ1, RQ2, RQ3

2

Understanding Acceptance and

Susceptibility Towards AI

Teammate Social Influence

RQ2, RQ3, RQ4

3

Understanding the Impact of AI

Teammate Social Influence that

Exists Alongside Human Social

Influence

RQ1, RQ2, RQ4

Table 1.3: Studies that Address Each Research Question

15

1.5.1 Study 1: Using Teaming Influence to Create a Founda-

tional Understanding of Social Influence in Human-AI

Dyads

Through 2 sub-studies (Study 1a and Study 1b), Study 1 observes how the

more familiar concept of teaming influence ultimately impacts human-AI teams and

becomes social influence. This first observation is critical as the existence and docu-

mentation of AI social influence have not yet been explicitly done, meaning a foun-

dational exploration of this existence has to be documented. Study 1a examines

how varying levels of teaming influence change human performance and perceptions.

Study 1b provides an in-depth exploration of how an AI teammate’s teaming influence

becomes social influence.

For study 1a, Results indicate that high levels of teaming influence harm per-

formance, but if AI teammates decrease their teaming influence over time they can

“set the tone” and socially influence humans to improve their own performance. For

study 2b, results show that humans rapidly interpret AI teammate teaming influence

as social influence and quickly adapt, but some conditions need to be met for this to

happen. Specifically, humans need to feel a sense of control, justify their adaptation

through skill gaps or technology limitations, and observe AI teammate behavior to

determine how to best adapt. The results of these two studies not only verify the fun-

damental existence of AI teammate social influence but also empirically demonstrate

its impact on perception and performance.

16

1.5.2 Study 2: Examining the Acceptance and Nuance of AI

Teammate Teaming Influence From Both Human and

AI Teammate Perspectives

Study 2 of this dissertation is inspired by the most prevalent finding of Study

1: ideal teaming influence levels are not universal but a result of AI design and

humans’ past experiences. Furthermore, Study 1 saw that the optimal level of teaming

influence was not binary in that high or low levels were not ideal, but rather that ideal

levels of teaming influence exist on a spectrum that is highly personal. Thus, Study

2 utilizes two factorial survey studies to examine the acceptance of teaming influence

from the following angles: (1) the ideal level of teaming influence humans want when

given a higher fidelity spectrum of options; (2) how changes in the presentation of AI

teammates to humans can mediate humans’ levels of acceptance towards accepting

AI teammate teaming influence; and (3) the individual differences humans have that

can mediate their acceptance of AI teammate teaming influence.

For teaming influence allocation, participants often had declining perceptions

of AI as teaming influence increased across multiple tasks, but this was not the case

when teaming influence was allocated across a singular task, which saw the highest

levels of adoption likelihood when teaming influence was evenly shared between hu-

mans and AI. On the other hand, perceptions such as job security always trended

down as AI teaming influence increased, regardless of whether that teaming influence

was shared across multiple tasks or a single task. Additionally, these perceptions

were shown to be more positive when communicating AI as a tool, having coworker

endorsements, and having been previously observed by the participant. Finally, in-

dividual differences results show that common individual differences measures were

not consistently associated with AI teammate adoption, except for in the case of

17

one’s perceived capability of computers, which had a positive relationship with AI

teammate adoption. These results demonstrate that in regard to AI teammate ac-

ceptance, the teaming influence and design of AI teammates have demonstrably more

impact than commonly measured individual differences, which is often the case with

technology acceptance.

1.5.3 Study 3: Understanding the Creation of AI Teammate

Social Influence in Multi-Human Teams

Given that teaming influence is not solely contained to human-AI relation-

ships, the contributions of this dissertation would not be complete if they did not

examine how AI teammate teaming and social influence impact human-AI teaming

when multiple sources of teaming influence exist. Thus, Study 3 focuses on the role

AI teammate social influence can play outside of dyad teams where human-human so-

cial collaboration is present. Additionally, the manipulations for Study 3 manipulate

the level of teaming influence of AI teammates while also manipulating the amount

of preexisting experience human teammates have with each other, with the goal of

increasing prior experience with human-human teaming influence. However, unlike

Study 1, the amount of AI teaming influence is manipulated by varying the num-

ber of AI teammates applying teaming influence rather than the frequency a single

teammate applies teaming influence. This is an important difference, as AI teammate

teaming influence as defined through the number of AI teammates present will likely

become more salient over time due to an increasing prevalence of AI teammates within

teams. Study 3 also examines the impact of this teaming influence on human-human

and human-AI relationships through the lens of interdependence, which is a critical

consideration in teaming and social influence as stated above.

18

Results show that there is actually a gap between human-human and human-

AI perceptions of interdependence with humans often perceiving themselves to be

significantly more interdependent with other humans than AI despite AI teammates

having significantly higher perceived performance. Additionally, this gap grows as the

amount of AI teaming influence increases through population increases. However, an

evaluation of the qualitative data shows that, while perceived interdependence less-

ened for AI teammates, humans became more behaviorally interdependent with AI

teammates as a result of increasing teaming influence. Thus, Study 3 derives that

as AI teammate teaming influence becomes social influence, said social influence can

negatively impact humans’ perception of AI teammates, but it can positively impact

their behavioral adaptation around AI teammates. Additionally, human-human rela-

tionships and perceptions were shown to be strengthened by humans creating deeper

understandings of their human teammates and having much stricter expectations for

their AI teammates. Given these results, Study 3 concludes with the finding that AI

teammate teaming influence will become social influence in multi-human teams, but

humans prefer the presence of human teaming and social influence in these teams.

1.6 Conclusion

With the technology required to create AI teammates becoming more of a

reality every day, there still exists a gap that will prevent the initial formation of

human-AI teams. Specifically, while a large portion of research has acknowledged

the teaming influence AI teammates will have, this dissertation is the first to broadly

and explicitly study the social influence that stems from teaming influence to create

lasting change in human teammates. Moreover, each study provides a unique and

novel contribution. Study 1 provides one of the first explorations of how teaming

19

influence ultimately becomes social influence in human-AI teams and how variances

in teaming influence change the impacts of social influence. Study 2 is one of the first

studies to explicitly examine the acceptance of AI teammates, especially in terms of

the acceptance of AI teammate teaming influence. Finally, Study 3 provides one of

the first explorations of if AI teammates teaming influence can become social influence

when multiple humans exist in a human-AI team. The novel contributions from these

three studies provide a foundational understanding of the existence of teaming and

social influence in human-AI teams and the relationship between said influences and

human acceptance. Thus, this dissertation enables researchers and practitioners alike

to ensure that the potential benefits of human-AI teams are not squandered because

humans reject the concept and block its initial formation.

20

Chapter 2

Background

Before diving into the specific studies that comprise this dissertation, a deep

dive into the past research that this work is based on should be discussed. Specifically,

this work builds on three different areas: (1) human-AI teaming; (2) human-centered

AI and AI acceptance; and (3) social influence in relevance to human-AI teaming.

For (1), human-AI teaming is projected to be a highly opportune context for AI to

be integrated and will serve as the contextual motivation that helps scope the con-

tributions and environments that this dissertation targets. For (2), the domain of

human-centered artificial intelligence will serve as the problem and deliverables moti-

vation of this dissertation, meaning the outcomes and interpretations of this research

are targeted toward human-AI teaming but from a human-centered AI perspective.

Lastly, for (3), the components of social influence that are relevant to human-AI

teaming serve as the theoretical and historical motivation for this work. These three

domains are discussed below within these contexts and will enable the later presen-

tation of the three studies within this dissertation.

21

2.1 Human-AI Teamwork

The domain of Human-AI teaming serves as a highly interdisciplinary cross-

roads between computing, teaming psychology, and human-computer interaction do-

mains. Moreover, this interdisciplinary nature often results in a fast-paced and turbu-

lent research community that is racing to maintain pace with computationally driven

research. In fact, this turbulence is one of the key motivators for this research, as new

innovations are created every day and continue to make AI teammates more viable.

However, this viability comes at a cost, and that cost is that of social influence. While

technology has historically mediated social influence in teams, AIs possess both the

qualities of technology and teammate, meaning they will mediate social influence as

a technology while also owning and utilizing social influence as a teammate. This

merger is what makes AI teammates different from basic AI systems that are used

as tools. Specifically, this work examines the important components of human-AI

teaming: (1) its current state; and (2) the recent shift towards human-factors that

are driving the domains future. This work uses these components to define the en-

vironmental context in which the studies and contributions of this dissertation are

aimed.

2.1.1 The Current State of Human-AI Teaming

As of now, human-AI teaming is still in its infancy; however, definitions and

instances of human-AI teaming have begun to appear in recent years. This study

utilizes the following definition of human-AI teaming: “at least one human working

cooperatively with at least one autonomous agent, where an autonomous agent is

a computer entity with a partial or high degree of self-governance with respect to

decision-making, adaptation, and communication” [329]. In other words, agents or

22

AI teammates within human-AI teams must have at least some teaming influence over

their own decisions, adaptation, and communication. Human-AI teaming’s potential

to society and the workforce is clear as evidence by a wide range of conceptual and

empirical research. For example, human-AI teams’ performance has the potential to

far surpass human-human teams if AI teammates are designed correctly [285, 110],

and in domains where human-human teams may remain superior, human-AI teams

can serve as high-quality training methods [299]. Additionally, the incorporation of AI

teammates is one that requires a transition of human workers from roles that perform

repetitive tasks to those that require nuance and high-level problem solving, thus

allowing human and AI teammates to complement each other [392, 199, 44]. Thus,

shifting from human-human teaming to human-AI teaming centers around more than

just adding an AI teammate to a team, but rather a realignment of existing goals,

roles, and behaviors in existing teams.

This shift does not mean that maximizing the contribution of an AI-agent in

turn maximizes the benefit to a human [40, 43, 39]. Rather, the creation of effective

human-AI teams needs to be guided by the effective use of both humans and AIs.

Unfortunately, this balance is not always a given due to the fractured nature of

human-AI teaming research. Specifically, AI-agent research is often conducted from a

computer science perspective, which for a long period of time was agnostic of potential

human collaborators and has been more focused on algorithmic design and validity

of AIs [354]. On the other hand, human research has historically been conducted by

psychology and human-factors researchers who lacked the expertise to build real-AI

systems and were often relegated to Wizard-of-Oz studies where a human mascaraed

as an AI [102, 276, 443, 399]. While this human research produced important findings,

its use of human stand-ins for AIs resulted in research results that are more advanced

than the current state of AI research [285], which means not only do computational

23

and human research come from two different domains, but also two different temporal

perspectives. Human research looks further in the future while computer research is

looking at the tools available now; however, these two directions have continuously

drifted towards each other and recently begun to intersect with the creation of multiple

real-world autonomous systems being pioneered by human-factors research groups

[38, 299, 364], and more computer science oriented research beginning to consider

human compatibility [42, 41].

Fortunately, this merger is rapidly advancing the potential application of

human-AI teams in a variety of contexts, which provides a clear motivation for how

wide-reaching the contributions of this dissertation will be. For example, military

contexts have repeatedly shown interest in the integration of AI-agent systems, es-

pecially alongside humans [80, 37, 394, 71, 369]. Moreover, the military domain has

already recognized the importance of both technological and human advances in the

creation of human-AI teams, which has led to the formation of multiple funding pro-

grams targeted at the intersection of these topics [321, 320, 319]. Importantly, one

of these funding programs even includes attention towards the concepts of trust and

influence as influence and the organization of it have been a critical component to

military domains [322]. Similarly, medical teams can widely use human-AI teams

whether its in data management and diagnosis [10, 238, 69, 188, 413, 238], patient

care [214, 162, 210, 55, 23, 279], or even surgery teams [84, 173, 174, 232, 483, 293].

Other domains that could benefit from human-AI teaming include search and res-

cue [347, 8, 12], finance trading and planning [351, 342, 195] and manufacturing

[258, 139, 213, 57, 169, 389], just to name a few. Due to the scoping of this disserta-

tion, it is not important to dive any further into the application of human-AI teaming

within these domains; however, highlighting the wealth of research coming out of all

domains is important for demonstrating the breadth of application society will see

24

for human-AI teaming. Importantly, as computing and human-AI research advances,

the application of human-AI teaming to these domain areas inches ever closer, and

new potential contexts for application are appearing often.

2.1.2 Human Factors and Their Importance to Human-AI

Teaming

As the interdisciplinary field of human-AI teaming has grown closer over the

years, research has increasingly identified the criticality of human-factors. While the

concept of human-centered AI has come a long way (discussed later), teaming derives

not from human-centered technology but from human-human interactionThis does

not, however, mean that the later discussion on human-centered AI is irrelevant,

as the highly human nature of teaming makes general human-centeredness critical

[471, 458]. Despite this, it is not enough for an AI teammate to utilize basic human-

centered design, but rather they must be designed with teaming factors in mind [480].

Specifically, human factors within teaming have been a major component of teaming

research, and as human-centered AI has grown, so too has the wealth of research

targeting human factors in human-AI teaming.

Specifically, it is important to review which human factors are being researched

within human-AI teaming, their identified importance to human-AI teaming, and

their relationship to influence within a team. It’s also important to mention that

efficient human-AI teaming requires the holistic conclusion of all of these factors,

including general human-centered design; however, that cannot be done until each