NIEHS Report on

Evaluating Features and

Application of Neurodevelopmental

Tests in Epidemiological Studies

NIEHS 01

June 2022

National Institute of

Environmental Health Sciences

Division of the National Toxicology Program

NIEHS Report on

Evaluating Features and Application of

Neurodevelopmental Tests in

Epidemiological Studies

NIEHS Report 01

June 2022

National Institute of Environmental Health Sciences

Public Health Service

U.S. Department of Health and Human Services

ISSN: 2768-5632

Research Triangle Park, North Carolina, USA

Evaluating Features and Application of Neurodevelopmental Tests in Epidemiological Studies

ii

Foreword

The National Institute of Environmental Health Sciences (NIEHS) is one of 27 institutes and

centers of the National Institutes of Health, part of the U.S. Department of Health and Human

Services. The NIEHS mission is to discover how the environment affects people in order to

promote healthier lives. NIEHS works to accomplish its mission by conducting and funding

research on human health effects of environmental exposures, developing the next generation of

environmental health scientists, and providing critical research, knowledge, and information to

citizens and policymakers, to help in their efforts to prevent hazardous exposures and reduce the

risk of preventable disease and disorders connected to the environment. NIEHS is a foundational

leader in environmental health sciences and committed to ensuring that its research is directed

toward a healthier environment and healthier lives for all people.

The NIEHS Report series began in 2022. The environmental health sciences research described

in this series is conducted primarily by the Division of the National Toxicology Program (DNTP)

at NIEHS. NIEHS/DNTP scientists conduct innovative toxicology research that aligns with real-

world public health needs and translates scientific evidence into knowledge that can inform

individual and public health decision-making.

NIEHS reports are available free of charge on the NIEHS/DNTP website and cataloged in

PubMed, a free resource developed and maintained by the National Library of Medicine (part of

the National Institutes of Health).

Evaluating Features and Application of Neurodevelopmental Tests in Epidemiological Studies

iii

Table of Contents

Foreword ......................................................................................................................................... ii

About This Report............................................................................................................................v

Peer Review .................................................................................................................................. vii

Publication Details ....................................................................................................................... viii

Acknowledgments........................................................................................................................ viii

Conflict of Interest ....................................................................................................................... viii

Abstract .......................................................................................................................................... ix

Preface ..............................................................................................................................................x

Introduction ......................................................................................................................................1

Psychometric Tests ..........................................................................................................................3

Background .................................................................................................................................3

Test Domains ..............................................................................................................................6

Omnibus Tests .....................................................................................................................7

Clinical Assessment Instruments .........................................................................................7

Domain-specific Tests .........................................................................................................7

Methodology for Identifying Psychometric Tests and Extracting Test Information .......................9

Process of Selecting Tests ...........................................................................................................9

Test Information Extraction ......................................................................................................11

Potential Limitations of Test Selection and Data Extraction Approach ...................................12

Data Availability .......................................................................................................................13

Part 1: Principles for Evaluating Psychometric Tests ....................................................................14

Reliability ..................................................................................................................................16

Validity ......................................................................................................................................17

Standardized Administration Methods ......................................................................................18

Normative Data .........................................................................................................................19

Part 2: Potential Sources of Bias Related to Selection and Administration of

Neurodevelopmental Assessments in Epidemiological Studies ........................................21

Test Attributes ...........................................................................................................................31

Selection of Psychometric Tests ........................................................................................31

Appropriateness of Tests for Assessment .................................................................................32

Participant Age ...................................................................................................................32

Culture................................................................................................................................33

Language ............................................................................................................................34

Score Derivation ................................................................................................................34

Factors Related to Test Administration.....................................................................................35

Standardized Test Administration ......................................................................................35

Test Examiner ....................................................................................................................35

Test Administration, Environment, and Conditions ..........................................................37

Longitudinal Studies ..........................................................................................................38

Evaluating Features and Application of Neurodevelopmental Tests in Epidemiological Studies

iv

Clinical Diagnoses .............................................................................................................39

References ......................................................................................................................................40

Appendix A. Psychometric Tests Considered for Evaluation .................................................... A-1

Appendix B. Test Evaluation Tables ...........................................................................................B-1

Appendix C. References for DNT Test Information Extraction Database ..................................C-1

Appendix D. Supplemental Files ................................................................................................ D-1

Tables

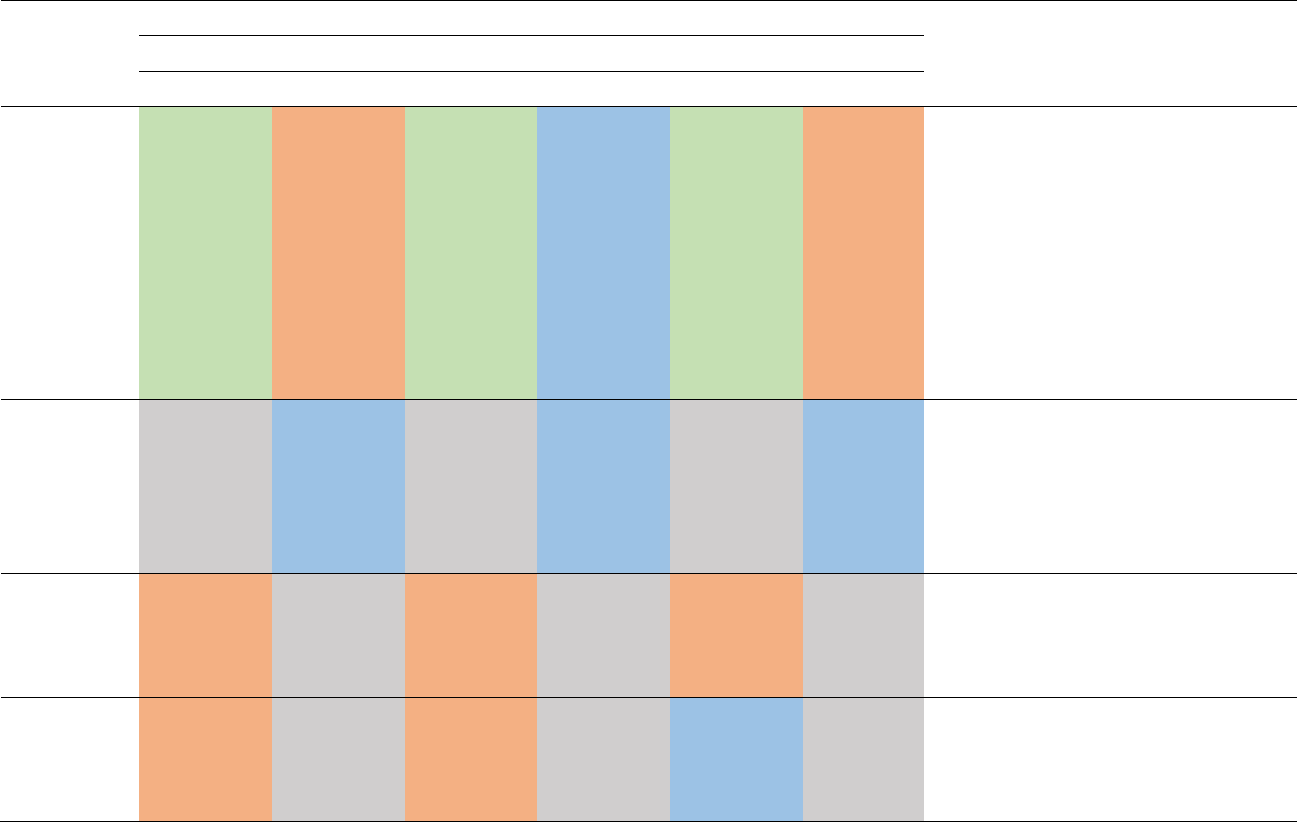

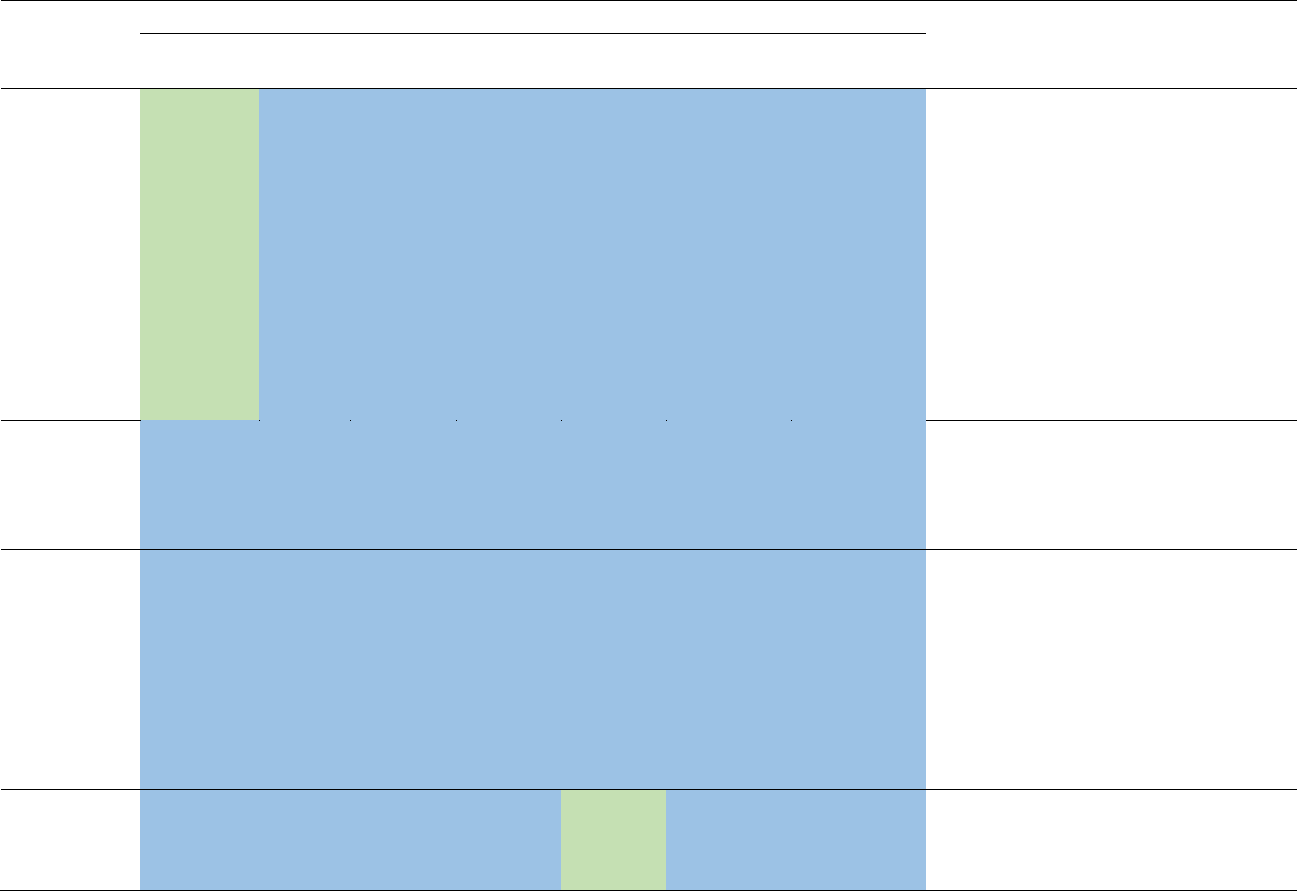

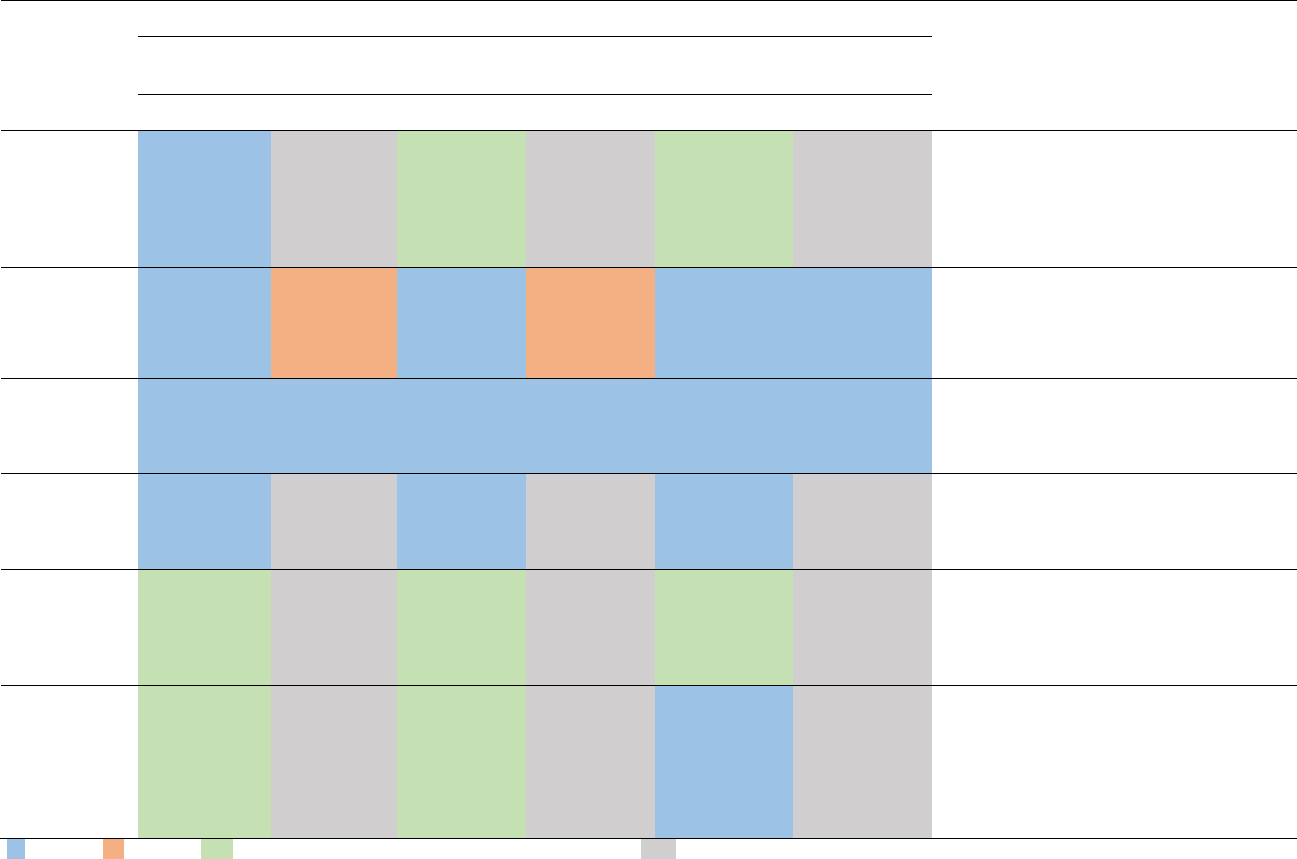

Table 1: Summary of Factors Influencing Neurodevelopmental Test Performance and the

Likelihood of Bias ...........................................................................................................24

Table 2: Likelihood of Bias for Individual Factors Related to Psychometric Test Administration

in Epidemiological Neurodevelopmental Toxicity Studies .............................................31

Evaluating Features and Application of Neurodevelopmental Tests in Epidemiological Studies

v

About This Report

Authors

Roberta F. White

1

, Joseph M. Braun

2

, Leonid Kopylev

3

, Deborah Segal

3

, Christopher A.

Sibrizzi

4

, Alexander J. Lindahl

4

, Pamela A. Hartman

4

, John R. Bucher

5

1

Boston University, Boston, Massachusetts, USA

2

Brown University, Providence, Rhode Island, USA

3

U.S. Environmental Protection Agency, Washington, District of Columbia, USA

4

ICF, Fairfax, Virginia, USA

5

Division of the National Toxicology Program, National Institute of Environmental Health

Sciences, Research Triangle Park, North Carolina, USA

Boston University, Boston, Massachusetts, USA

Contributed to conception and design, and contributed to drafting of report

Roberta F. White, Ph.D., A.B.P.P./C.N., Lead Author

Brown University, Providence, Rhode Island, USA

Contributed to conception and design, and contributed to drafting of report

Joseph M. Braun, R.N., M.S.P.H., Ph.D., Lead Author

Division of the National Toxicology Program, National Institute of Environmental Health

Sciences, Research Triangle Park, North Carolina, USA

Contributed to conception or design and contributed to drafting of report

John R. Bucher, Ph.D., Project Lead

U.S. Environmental Protection Agency, Washington, District of Columbia, USA

Contributed to conception or design and contributed to drafting report

Leonid Kopylev, Ph.D., Project Co-lead

Deborah Segal, M.E.H.S., Project Co-lead

ICF, Fairfax, Virginia, USA

Contributed to conception or design and contributed to drafting report

Christopher A. Sibrizzi, M.P.H., Lead Work Assignment Manager

Extracted data and contributed to drafting report

Alexander J. Lindahl, M.P.H.

Contributed to drafting report

Pamela A. Hartman, M.E.M.

Evaluating Features and Application of Neurodevelopmental Tests in Epidemiological Studies

vi

Contributors

Division of the National Toxicology Program, National Institute of Environmental Health

Sciences, Research Triangle Park, North Carolina, USA

Provided oversight for external peer review

Sheena L. Scruggs, Ph.D.

Mary S. Wolfe, Ph.D.

Critically reviewed draft report

Mamta V. Behl, Ph.D.

Kyla W. Taylor, Ph.D.

Kelly Government Services, Research Triangle Park, North Carolina, USA

Supported external peer review

Elizabeth A. Maull, Ph.D. (retired from NIEHS, Research Triangle Park, North Carolina, USA)

U.S. Environmental Protection Agency, Washington, District of Columbia, USA

Critically reviewed draft report

Krista Christensen, Ph.D.

Elizabeth G. Radke, Ph.D.

ICF, Fairfax, Virginia, USA

Provided contract oversight

David F. Burch, M.E.M.

Jessica A. Wignall, M.S.P.H.

Extracted data

Yousuf Ahmad, M.P.H.

Kathleen A. Clark, B.A.

Lindsey M. Green, M.P.H.

Camryn R. Lieb, B.A.

Alessandria J. Schumacher, B.A.

Prepared and edited report

Sarah K. Colley, M.S.P.H.

Jeremy S. Frye, M.S.L.S

Kaitlin A. Geary, B.S.

Tara Hamilton, M.S.

Courtney R. Lemeris, B.A.

Rachel C. McGill, B.S.

Supported external peer review

Canden N. Byrd, B.S.

Blake C. Riley, B.S.

Megan C. Rooney, B.A.

Evaluating Features and Application of Neurodevelopmental Tests in Epidemiological Studies

vii

Peer Review

The Division of the National Toxicology Program (DNTP) at the National Institute of

Environmental Health Sciences (NIEHS) conducted an external peer review of the draft NIEHS

Report on Evaluating Features and Application of Neurodevelopmental Tests in Epidemiological

Studies by letter in May 2021 by the experts listed below. Reviewer selection and document

review followed established DNTP practices. The reviewers were charged to:

(1) Peer review the draft NIEHS Report on Evaluating Features and Application of

Neurodevelopmental Tests in Epidemiological Studies.

(2) Comment on whether the draft document and draft database are clearly stated and

objectively presented.

DNTP carefully considered reviewer comments in finalizing this report.

Peer Reviewers

Kim N. Dietrich, Ph.D.

Professor Emeritus, Department of Environmental Health, Division of Epidemiology and

Biostatistics

University of Cincinnati College of Medicine

Cincinnati, Ohio, USA

Nancy Fiedler, Ph.D.

Deputy Director and Professor, Environmental and Occupational Health Sciences Institute,

Exposure Science and Epidemiology

Rutgers University

New Brunswick, New Jersey, USA

Evaluating Features and Application of Neurodevelopmental Tests in Epidemiological Studies

viii

Publication Details

Publisher: National Institute of Environmental Health Sciences

Publishing Location: Research Triangle Park, NC

ISSN: 2768-5632

DOI: https://doi.org/10.22427/NIEHS-01

Report Series: NIEHS Report Series

Report Series Number: 01

Official citation: White RF, Braun JM, Kopylev L, Segal D, Sibrizzi CA, Lindahl AJ, Hartman

PA, Bucher JR. 2022. NIEHS report on evaluating features and application of

neurodevelopmental tests in epidemiological studies. Research Triangle Park, NC: National

Institute of Environmental Health Sciences. NIEHS Report 01.

Acknowledgments

This work was supported by the Intramural Research Program (ES103316, ES103318, and

ES103319) at the National Institute of Environmental Health Sciences (NIEHS), National

Institutes of Health and performed for NIEHS under contracts GS00Q14OADU417 (Order No.

HHSN273201600015U) and HHSN271201800012I.

Conflict of Interest

Individuals identified as authors in the About This Report section have certified that they have

no known real or apparent conflict of interest related to neurodevelopmental tests in

epidemiological studies.

Evaluating Features and Application of Neurodevelopmental Tests in Epidemiological Studies

ix

Abstract

Psychometric tests are routinely used to assess facets of neurodevelopment in epidemiological

studies and are a tool for estimating the potential effects of toxicants on the nervous system.

When assessing the validity and reliability of results from a series of epidemiological studies, the

specific psychometric tests utilized and factors affecting their administration in human

populations present unique challenges. This report describes the historical application of

psychometric tests to the study of the neurodevelopmental toxicity of methylmercury and defines

neurodevelopmental domains that are assessed with these instruments. Principles are proposed

for evaluating the validity and reliability of psychometric tests and for identifying potential

sources of bias in the selection and administration of these tests in human populations.

Evaluating Features and Application of Neurodevelopmental Tests in Epidemiological Studies

x

Preface

Exposures to an increasing number of substances in our environment are being recognized as

affecting human neurological development. The purpose of this report is to provide information

to assist in determining if a given psychometric test is adequate for assessing a specific

neurobehavioral domain or trait in human studies of neurotoxicants. Psychometric tests used in

epidemiology studies that were reviewed as part of the U.S. Environmental Protection Agency’s

Integrated Risk Information System assessment of methylmercury were selected for review. The

reviewed tests included those that were widely used in this literature, as well as those used

frequently in studies of other neurotoxicants.

The psychometric tests were assigned to broad neurodevelopmental domains, and principles

were developed and used to evaluate 81 psychometric tests for reliability, validity, normative

data, and standardized methods for administering the tests. This report provides examples of

factors that could present problems and introduce bias in the test results. The examples explain

elements that would lead to different levels of bias and cover many aspects of study

performance. We hope this information is useful to regulatory agencies charged with interpreting

and evaluating the quality of epidemiology studies using these psychometric tests, and to the

research community in designing epidemiology studies to assess factors affecting neurobehavior.

Evaluating Features and Application of Neurodevelopmental Tests in Epidemiological Studies

1

Introduction

This report presents basic principles for reviewing neurodevelopmental tests used in research that

assess associations of exposure to known or putative neurotoxic chemicals during

neurodevelopment. The emphasis is on the validity and reliability of psychometric tests used to

assess neurodevelopment and methodological aspects of administering and interpreting them in

epidemiological studies. Psychometric tests are used extensively because they provide a valid

and noninvasive means to quantitatively assess brain function or dysfunction. Referenced

throughout the document are psychometric tests designed to assess specific neurodevelopmental

traits, and the scores obtained from these tests, which are used to quantify and compare the

quality of performance on tests that assess these neurodevelopmental traits in and across

individuals or populations.

The impetus for these principles and this document grew out of the need for assistance in

assessing the quality of and potential biases in these tests when applying systematic review

methodology to pediatric environmental epidemiology literature. Specifically for this document,

this was done to aid in the development of the in-process U.S. Environmental Protection Agency

(EPA) Integrated Risk Information System (IRIS) toxicological review of methylmercury

(MeHg). To develop these principles, an evaluation was conducted on psychometric tests used to

estimate the effects of MeHg on neurodevelopmental outcomes (see Process of Selecting Tests

section). MeHg was a model toxicant to develop this document and associated principles because

the developmental neurotoxicity of MeHg has been extensively studied for over 50 years in

cohorts around the world, and the literature evaluating neurodevelopmental effects of MeHg

captures many representative psychometric tests used in epidemiological research on many other

neurotoxicants.

The work described here is important because it recognizes the challenges involved in

conducting and reviewing population-based studies of neurodevelopmental toxicity due to a wide

array of neurodevelopmental outcomes, the differing psychometric properties of available tests,

and the variations in methods used to administer, score, and interpret test results in individual

studies. To address this challenge, it is essential to understand the role that psychometric tests

play in assessing neurodevelopment, the validity and reliability of these tests, and the least biased

methods to administer, score, and interpret psychometric tests.

While some sub-disciplines of epidemiology, such as molecular epidemiology, have similar

principles or “best practices” to maintain high validity and reliability by conducting external and

internal quality assurance and quality control procedures (e.g., duplicate measurements, standard

calibration materials, and interlaboratory proficiency programs) (Gallo et al. 2011), this

document is not intended to serve as a set of best practices for the administration of psychometric

tests when studying developmental neurotoxicants. Rather, its goal is to provide the necessary

background information for understanding psychometric principles and how psychometric tests

can be validly and reliability developed, evaluated, and employed when studying neurotoxicity in

epidemiological studies. Ultimately, the principles proposed here are designed to assist in

systematic reviews of epidemiological studies of potentially neurotoxic chemical exposures and

aid in the design of future research studies.

Evaluating Features and Application of Neurodevelopmental Tests in Epidemiological Studies

2

This document is organized as follows. The first section (Psychometric Tests) provides

background on psychometric tests and neurodevelopment. The second section (Methodology

for Identifying Psychometric Tests and Extracting Test Information) describes the methods for

identifying and selecting psychometric tests and extracting information on psychometric features

of the selected tests. Then, the principles discussed in this document are presented in two main

parts. In Part 1 (Principles for Evaluating Psychometric Tests), the neurodevelopmental domains

and the instruments that have been used to assess neurodevelopment in MeHg research are

described. Psychometric features, strengths, and weaknesses of these instruments are assessed

with the goal of providing information that can be used to determine if an instrument is more

or less suitable for addressing a research question of interest. In Part 2 (“Potential Sources of

Bias Related to Selection and Administration of Neurodevelopment Assessments in

Epidemiological Studies”), principles related to the application of psychometric tests are

described along with potential sources of bias related to using the tests to assess

neurodevelopment in epidemiological research.

Evaluating Features and Application of Neurodevelopmental Tests in Epidemiological Studies

3

Psychometric Tests

Background

The psychometric outcomes that were evaluated in this document derive from three traditions

that have developed over the last 100+ years in the fields of psychology and psychometric

assessment. These include the design and evolving development of quantified psychometric

tests, the growth of the field of neuropsychological testing, and the exponential increase in

neurodevelopmental assessment using psychometric tools in research studies (Lezak 1976).

Throughout this document, these tests are purposely referred to as “neurodevelopmental” and are

used in the life-span sense of development/neurodevelopment. While the term “developmental”

is often understood to refer to health and functioning in the prenatal and childhood periods,

changes in brain structure, function, and organization begin at conception and continue across

the life span. Thus, this document uses a life-span developmental psychology approach to

characterize brain health and function from conception through death. Indeed, this approach

aligns with the approach adopted by many epidemiological studies that were established to study

the effect of early life neurotoxicant exposures on neurodevelopment from infancy through

childhood and adolescence and into adulthood. Thus, tests are included and discussed in this

document that assess adult function, as the administration of childhood tests would be

inappropriate at older ages. This approach is consistent with the principle that very early

exposures can affect brain structure, organization, and function across the life span and possibly

in different ways at different points in the life span.

Psychometric test development began in the early 20th century when psychologists introduced

the theory of g or general intelligence, a term for an individual’s overall level of cognitive skills

that affects their intellectual functioning. Early tests from Piaget, Binet, and Wechsler were

developed to measure g. Although primitive compared with the sophisticated tests used

contemporaneously, they included many features still used in contemporaneous tests, including

several subtests; standardized instructions and test materials; scoring rules, and basic norms

allowing for comparisons of performance among examinees of the same age, including child and

adult populations. These tests (especially Stanford-Binet and Wechsler) gave rise to the concept

of IQ as an entity, generally quantified as a standard score of 100 as average, with a standard

deviation (SD) of 15 or 16, depending on the test. It should be noted that such scores are not

meant to serve as the sole measures of an individual’s aptitude or potential for future success

given that they are influenced by a variety of factors (Spreen and Strauss 1991). Systematic

differences in test performance may exist if individuals come from a population that is distinct

from the test’s target population or population used to design the test in terms of race/ethnicity,

culture, socioeconomic status, etc. Thus, the scores are a kind of benchmark but are not a

concrete entity.

Tests of academic achievement (reading, writing, mathematical skills) also began to appear in

the educational psychology literature as school psychologists and personnel required means of

assessing whether students were progressing at the expected rate in academic knowledge for age

and grade level. These tests also grew in sophistication and became standardized over time.

Other tests that attempted to assess child development/neurodevelopment also began to appear

that would allow pediatricians and other clinicians to determine if a child was on a normal

Evaluating Features and Application of Neurodevelopmental Tests in Epidemiological Studies

4

neurodevelopmental trajectory or was behind or ahead for his/her age. Tests were also developed

to determine if children had typical patterns of cognitive, behavioral, emotional, social,

psychiatric, or personality traits (Spreen and Strauss 1991).

Around the mid-20th century, the field of clinical neuropsychology emerged and grew

dramatically for several decades. In this field, psychometric tests are used to assess brain

function to determine if it is normal or abnormal. If abnormalities are noted, the

neuropsychologist may opine on the specific brain structures or systems that are dysfunctional or

their likely neurological cause. It had been known since the 1800s that some parts of the brain

were responsible for specific kinds of behavior. Discoveries of these relationships stemmed from

brain autopsy findings from symptomatic patients. For example, patients with lesions in certain

parts of the frontal lobes developed the inability to speak (expressive aphasia), while patients

with lesions in posterior portions of the temporal lobes could speak but could not comprehend

the speech of others (receptive aphasia). As more associations between specific brain regions and

behavioral and cognitive functions became apparent, the notion of structure-function

relationships within the brain became more prominent. An impetus for this field was the absence

of neuroimaging techniques that are now available by which clinicians could visualize brain

tumors, strokes, and other kinds of lesions related to neurological illnesses (e.g., multiple

sclerosis plaques, effects of traumatic brain injury). When patients presented to physicians with

new acute neurological symptoms, it was important to diagnose how the brain was

malfunctioning and which parts of the brain might be affected in order to determine whether a

neurosurgical intervention might help or cure the patient (White 1992).

Although neurologists and other clinicians could assess behaviors to try to localize structural

damage in the brain, the techniques were generally specific to individual clinicians and not

systematically quantified or comparable among clinicians. Individual neuropsychologists and the

field of neuropsychology applied standardized psychometric methods to the evaluation of

specific aspects of brain function in attempts to determine whether a neurological condition

might be present and associated with abnormal test scores, where the lesions/abnormalities in the

brain might be located, and possible underlying diagnoses (e.g., dementias, seizure disorders). In

fact, neurosurgeons sometimes relied on the lesion location diagnoses of neuropsychologists to

treat patients. Some neuropsychologists approached this work by developing new tests designed

to assess specific aspects of brain function. For example, the Halstead-Reitan group developed a

battery of 10 tests that assessed motor function on both sides of the body, conceptual reasoning

skills, working memory, and other skills. Other neuropsychological groups developed their own

tests of brain function but also drew from existing psychometric tests that had already been

developed, standardized, and normed to determine if these instruments could aid in assessing the

functional integrity of the brain and locating lesions in specific brain areas or systems. Thus, the

existing IQ tests and their subtests became included in this clinical and research endeavor. As

time has passed, an extensive literature has developed describing what these various kinds of

tests demonstrate about specific types of brain damage and neurological disorders, validating the

tests applied as measures of CNS function and, in fact, as specific indicators of certain kinds of

disordered (or functional) brain-behavior relationships. This knowledge continues to evolve

given the capacity to “watch” the brain and its systems function as participants undergo tests and

carry out behaviors through methods like functional brain imaging (White and Reuben In Press).

Initially, the field of neuropsychology was focused heavily on adults. This probably arose from

the greater number of adult patients with neurological disorders. It also could be related,

Evaluating Features and Application of Neurodevelopmental Tests in Epidemiological Studies

5

however, to the fact that localized lesions with predictable behavioral anomalies are more

common in adults. When a lesion or disorder appears in the developed adult brain, structure-

function relationships are well established, and it is often clear where the problem might be.

Neurodevelopment is more complicated. During child development, the brain is more plastic

with regard to how specific structures mediate behaviors, is capable of compensating when a

structure or brain area is diseased (or even absent), can be influenced by acute or chronic

exposures during susceptible periods, and develops and expresses behavior in a dynamic fashion

given the circumstances occurring at any stage of development. Because of all these

considerations, structure-function relationships in early brain development are more diffuse and

less “focal” than in adults, and insults to the developing brain—both toxicants and other

neurological conditions—may have different effects than would insults on the mature brain.

Given these circumstances, neurodevelopmental assessments have used a combination of

existing tests for children (e.g., IQ, developmental, academic); adaptations of adult tests for

children; and specialized tests that have been developed, standardized, normed, and validated in

clinical populations (e.g., Developmental Neuropsychological Assessment, Behavior Assessment

System for Children, Child Behavior Checklist, tests that assess clinical conditions in children)

(White 2004).

Almost all psychometric tests provide raw scores that can be converted into standard or scaled

scores (mean = 10, SD = 3), T-scores (mean = 50, SD = 10), standard scores (mean = 100,

SD = 15), and corresponding percentiles using normative or reference data. This practice allows

participants’ scores to be compared with one another after removing the effects of age, sex, or

other characteristics on each participant’s raw score. For instance, even within narrow age

intervals, older children have higher average raw scores on tests of mental and psychomotor

development than younger children. By using standard scores, the traits of participants who are

different ages (or other characteristics) can be compared. Moreover, an individual’s performance

over time can be examined in relation to reference or normative data. Such comparisons between

groups or within individuals over time are often made in terms of number of points or in units of

SDs away from the average score.

Note that normative data are not required for comparison of scores between individuals when

appropriate measures are taken to validly analyze raw scores; however, normative data are

necessary and routinely used when making decisions about an individual’s health care, clinical

diagnosis, vocational services, education, legal status, etc. In addition, when appropriate

normative data are available, they can be used as the outcome in statistical models.

There is a longstanding tension regarding the interpretation of results from population-based

studies that examine neurodevelopmental toxicity. Generally, this friction arises from the lack of

consensus regarding the “clinical significance” of effects observed in epidemiological studies. In

clinical situations, it is appropriate to refer to “abnormalities” or “deficits” in a functional area

when an examinee performs poorly on a test (usually 1–2 SDs away from the average score). In

epidemiological studies, effect sizes often fall short of being clinically significant in that they

occur within the “normal range” of test performance and are typically less than half of an SD

away from the average score. However, subtle shifts in a continuous trait can have profound

impacts on the tails of a distribution in a population (Needleman 1990; Rose 1985; 2001; Weiss

2000). For instance, a 5-point decrease in a population’s IQ would nearly double the number of

people classified as intellectually disabled (Braun 2016). Finally, it is important to refer to

subclinical or preclinical findings of lower scores in these situations as “decrements” in

Evaluating Features and Application of Neurodevelopmental Tests in Epidemiological Studies

6

performance or “diminished” performance, rather than “deficits” or “impairments” (White and

Reuben In Press).

Test Domains

In the fields of neurodevelopmental psychometric tests and neuropsychology, psychologists

often talk about “domains” of behavior. These domains are generally highly functional in

nature—that is, they focus on a particular kind of activity such as attention. They are helpful in

some ways simply because they allow investigators to group tests into meaningful categories.

However, some other issues about the domains are important to consider, which are discussed

below.

While these descriptions of neurodevelopment traits are presented as if they are isolated

attributes, it is acknowledged that they are dimensional traits that interact with other domains in

determining behavior. Indeed, this type of framework (e.g., Research Domain Criteria) has been

adopted by the National Institute of Mental Health to complement the Diagnostic and Statistical

Manual approach to characterizing mental health and neurodevelopmental disorders (Morris and

Cuthbert 2012).

First, domains roughly map onto the functioning of specific brain regions, but there is generally

not a one-to-one correspondence between a domain and a brain region. For instance, while the

capacity to pay attention and to monitor or inhibit behaviors is strongly related to functioning of

the frontal lobe, other brain regions (e.g., striatum) may also be involved in these behaviors.

Similarly, learning and memory functions are mediated by the limbic system, especially the

hippocampus and related structures, although other brain structures play a role. Second, many

psychometric instruments that are applied in the fields of neurodevelopment, neuropsychological

assessment, and developmental neurotoxicity are omnibus tests for which performance is

determined by many different kinds of behavioral processes at the same time and do not fit

neatly into a functional domain.

Related, some individual tests require highly complex integration of many kinds of functions,

and even tests or subtests considered to be domain-focused often rely on several brain regions for

successful performance. These kinds of tests may better fit under a category such as “multi-

determined tests,” but this is difficult to do because all tests rely on more than one type of ability

(i.e., no test is a pure measure of the trait). Interestingly, the tests with many functional demands

are often sensitive to an insult such as a neurotoxicant exposure, although they generally do not

reveal very much about what portions of the brain the insult has affected. For example, coding

tests require respondents to look at a code that pairs symbols with digits and to write in the

appropriate digit in a blank space below the symbol according to the code. This task requires an

examinee to recognize visual symbols, write quickly and accurately, scan visual arrays quickly,

form associations to remember pairs of symbols, inhibit interference from outside stimuli, follow

rows and columns, and so on. These multiple determinants of response quality affect the ultimate

score—a deficit in any one of them can reduce the score. This is less true of tests that require

only paying attention, or writing quickly, or remembering data.

Finally, there are subdomains associated with each domain. For example, “attention” can include

the ability to recognize stimuli, capacity to ignore irrelevant stimuli, speed of responding to

stimuli, ability to repeat back numbers in order, and so on. Verbal tests can include the ability to

Evaluating Features and Application of Neurodevelopmental Tests in Epidemiological Studies

7

provide abstract definitions of vocabulary words, comprehension of spoken language, and/or

comprehension of written language. Toxicant-related decrements in performance within each of

these subdomains can have different implications for the effects of the exposure on specific

structures or systems within the brain during neurodevelopment.

The domains defined here refer to those that were used to evaluate the psychometric outcomes

included in the neurodevelopmental research under discussion. Appendix A contains a list of the

domains and the tests categorized within each domain (White 2004).

Omnibus Tests

General intelligence/IQ: Tests measuring general intelligence purport to assess the examinee’s

overall level of cognitive or intellectual abilities, usually by applying a variety of types of

intellectual challenges.

Academic achievement: Tests in this domain evaluate the child’s ability to carry out academic

skills such as reading, spelling, vocabulary, arithmetic, and more complex abilities.

Developmental: These tests assess how successfully an infant or child is acquiring age-

appropriate verbal, motor, and social skills.

Neuropsychological assessment batteries: These tests assess a variety of the domain-specific

functions described below and may or may not include an overall test score (usually they do not).

Clinical Assessment Instruments

Clinical conditions: These tests assess a specific diagnostic outcome or set of outcomes (e.g.,

autism spectrum disorder, attention-deficit disorder, anxiety disorders, neuropsychiatric

conditions) to produce a criterion-based clinical diagnosis.

Mental status: Mental status examinations are screening tools used to determine whether an

individual’s cognitive function is within expected limits for age.

Domain-specific Tests

Attention: This domain includes evaluation of capacity to monitor incoming stimuli, inhibit

responses to irrelevant stimuli, hold small bits of information for immediate use (“pre-memory”),

and listen to instructions and communications. The prefrontal cortex prominently mediates

performance on these tasks.

Executive function: Executive function refers to the capacity to manipulate complex stimuli,

reason abstractly, develop effective strategies for task completion, and problem solve. It is

broadly defined by three subdomains: working memory, cognitive flexibility, and inhibition.

Working memory refers to the capacity to hold and simultaneously manipulate information and

data from stimuli for task completion. Cognitive flexibility is the ability to adjust behavior in the

face of changing demands and goals. Inhibition includes both the ability to ignore irrelevant

stimuli or suppress a triggered behavior to sustain efforts to complete a goal. Relevant brain

structures include the prefrontal cortex and subcortical white matter connections.

Motor function: Fine motor control, speed, accuracy, and coordination are the key components of

motor function. This domain is usually assessed using the hand (manual motor skills). Relevant

Evaluating Features and Application of Neurodevelopmental Tests in Epidemiological Studies

8

brain structures that support completion of these tasks include the motor cortex of the frontal

lobes, the cerebellum, and the extrapyramidal system (e.g., basal ganglia).

Learning and memory: Learning and memory comprise several processes that include coding

visual or verbal information into short-term memory stores, retaining information longer term,

recalling newly learned information spontaneously, and recognizing newly learned information

that may or may not be recalled spontaneously. Retrograde memory refers to the ability to recall

information learned in the more distant past. Brain structures related to learning and memory

include the limbic system, specifically the hippocampus, and frontal cortex.

Social-emotional: This domain encompasses expressions of affect or mood (temporary or

chronic), including anxiety, depression, and irritability; ability to control strong emotions or

reactions to events or people; communication patterns; traits such as tendency to externalize

(often associated with attention-deficit/hyperactivity disorder [ADHD] diagnosis) or internalize

(often associated with diagnosis of depression); reciprocal social behaviors, repetitive and

restricted behaviors (often associated with autism spectrum disorder [ASD]); and personality

traits. Relevant brain structures include the prefrontal cortex and limbic system.

Verbal/language: Verbal skills include recognition of word meanings, ability to define

vocabulary words abstractly, and comprehension of verbalizations. This domain is sometimes

subdivided into verbal and language, with language skills referring more directly to skills

associated with aphasia, such as confrontation naming, repetition, simple comprehension, and

simple writing and reading. Most aspects of language/verbal function are mediated by the

dominant cerebral hemisphere (usually left hemisphere, though aspects of complex interpretation

of verbal information and appreciation of verbally expressed humor often involve the

nondominant hemisphere).

Visuospatial function: This domain includes the ability to evaluate pictures and drawings with

missing details, appreciate and replicate visual designs and drawings, recognize gestalts, detect

embedded figures, understand maps and navigate directions, perform facial and abstract design

recognition, and complete puzzle and block design assembly. (Tests within this domain

sometimes have a significant motor component.) Relevant brain structures include the parietal

and occipital lobes, the cerebellum, the extrapyramidal system, and subcortical white matter

connections.

Processing speed: Time to complete tasks is assessed by this domain, which in recent years has

been added to IQ and other omnibus tests. No parent tests assessing processing speed were

identified in the epidemiological studies from the in-progress EPA IRIS toxicological review of

MeHg, resulting in no evaluation table for this domain in Appendix B. Subtests belonging to

omnibus tests that assess processing speed have been identified and listed for this domain.

Relevant brain structures include the frontal and prefrontal cortex, cerebellum and basal ganglia,

and the white matter connections within the brain.

Evaluating Features and Application of Neurodevelopmental Tests in Epidemiological Studies

9

Methodology for Identifying Psychometric Tests and

Extracting Test Information

This section describes the methods for identifying and selecting psychometric tests and

extracting information on psychometric features of the selected tests. Test selection and

information extraction were conducted to support an evaluation of the psychometric features of

each test to determine the adequacy of tests in assessing neurodevelopmental or central nervous

system (CNS) function in studies of neurotoxicants. Test selection and information extraction

were conducted first and are described here. The test evaluation process and test evaluation

results (found in Appendix B) are referenced in this section but are described in detail in the

Principles for Evaluating Psychometric Tests section of the document.

Process of Selecting Tests

Specific psychometric tests for evaluation were selected and information on relevant test features

was extracted using a set of studies identified from the systematic review and dose-response

analysis for the in-progress EPA IRIS toxicological review of MeHg (see protocol for more

details https://cfpub.epa.gov/ncea/iris_drafts/recordisplay.cfm?deid=345309; see Introduction for

description of the focus on the MeHg literature in this document).

Peer-reviewed and published epidemiological studies compiled by EPA by July 2019 as part of

the in-progress EPA IRIS toxicological review of MeHg were reviewed and a list of

psychometric tests was developed (n = 134 tests) based on these studies. Next, a multistage

process was implemented to identify information to extract for describing the psychometric

features of the identified tests. If accessible, physical manuals or electronic copies of test

manuals for the identified tests were reviewed as a first step, as these manuals provided the best

available primary sources of information. As a secondary source of information, several editions

of two academic textbooks on neuropsychological testing, A Compendium of

Neuropsychological Tests (Spreen and Strauss 1991; 1998; Strauss et al. 2006) and

Neuropsychological Assessment (Lezak 1995; Lezak et al. 2004; Lezak et al. 2012), were

manually searched to identify relevant test features in those sources. If access to a test manual

was available and/or usable information was identified in these academic textbooks, further

information was generally not sought. Tests with information from manuals or academic

textbooks were automatically included in the extraction and evaluation process.

In certain cases, when a physical or electronic copy of a manual or information from one of these

academic textbooks was not available, peer-reviewed literature (original research and literature

reviews) was identified by searching online journal databases for the test name, any related

abbreviations, and relevant keywords (e.g., “psychometric,” “valid*,” “reliability”). EBSCO host

was used to search multiple online databases, including Medline, CINAHL Complete,

PsycARTICLES, PsycINFO, PsycBOOKS, and PsycEXTRA. Titles and abstracts were screened,

and full-text articles were obtained if the abstract discussed psychometric properties, factor

analyses, or comparisons with other neuropsychological tests. Studies on groups of people with

specific conditions that may affect test performance (e.g., deaf patients) were not included.

Ultimately, tests were included in the extraction and evaluation process if enough information

was available to assess a majority of the evaluation principles (see Principles for Evaluating

Psychometric Tests section) following the steps outlined above. After retrieving sources of

Evaluating Features and Application of Neurodevelopmental Tests in Epidemiological Studies

10

information for each test, relevant information was extracted and summarized in an Excel

spreadsheet (titled “DNT_Test_Information_Extraction_Database.xlsx”; referred to in this

document as the “extraction table”; Appendix C).

Some tests were excluded from the extraction and evaluation process due to idiosyncratic

features or insufficient information. Tests were identified as idiosyncratic if they appeared in a

single MeHg study, were used only in a specific population, and/or were only used in studies

later determined to not be conducive to dose-response analysis for the in-progress EPA IRIS

toxicological review of MeHg. Tests were categorized as having insufficient information if no

manual was available and secondary sources, including peer-reviewed literature, did not provide

information to assess a majority of the evaluation principles.

Of the 134 tests initially identified, 81 were included in the extraction and evaluation process

(see Appendix A for the lists of included and excluded tests). Thirty-three tests with

idiosyncratic features and 20 tests with insufficient information were excluded from the

extraction and evaluation process. The number of included tests by information source(s)

included:

• Eight tests with information from manuals only

• Twenty tests with information from manuals and academic textbooks

• Two tests with information from manuals and peer-reviewed literature

• Eighteen tests with information from academic textbooks only

• Three tests with information from academic textbooks and peer-reviewed literature

• Thirty tests with information from peer-reviewed literature only

The included tests (n = 81 tests) were organized into broad domains based on a framework

previously developed by the co-author Dr. Roberta White (White 2004; White et al. 2009; White

2011). These broad domains included omnibus tests (intelligence quotient [IQ], academic

achievement, developmental, and neuropsychological assessment batteries); clinical assessment

instruments (clinical conditions and mental status assessments); and domain-specific functional

tasks (attention, executive function, learning and memory, motor function, social-emotional,

verbal/language, and visuospatial tests). Note that some tests may assess multiple domains.

Subtests and subscales within tests that were used in the epidemiological studies from the in-

progress EPA IRIS toxicological review of MeHg were also identified; however, information

extraction and test evaluation were conducted at the parent-test level and not for specific

subtests, given resource constraints that inhibited the assessment of specific features of subtests.

While each test evaluated in this document has been categorized by domain, there are cases for

which subtests/subscales within omnibus tests assess domain-specific functions. In these cases,

for each domain, the identified subtests or subscales are listed in a footnote below their

respective domain-specific evaluation table in Appendix B. Please see the Principles for

Evaluating Psychometric Tests section for further detail on the evaluation process, the

evaluation results (Appendix B), and a description of the how the evaluations may be applicable

for these cases.

Evaluating Features and Application of Neurodevelopmental Tests in Epidemiological Studies

11

Test Information Extraction

The data in the extraction table (Appendix C) for each test include the following:

• Test name and any alternative names

• Test domain

• Test publication date

• Time required for administration

• Appropriate age range for test administration

• Original publication language(s)

• Availability of the test in other languages

• Availability of culturally adapted version(s) of the test

• Source population or culture from which the test was developed

• Test reliability (internal consistency and test-retest)

• Test validation (content, construct, criterion)

• Sensitivity and specificity of the test

• Description of quantitative outcomes provided by the test

• Test standardization or normative data

• Applicability for use as a screening tool for clinical diagnoses

• Training requirements and qualifications for test administrators, and the availability of

specific instructions or a test manual

• Appropriate test environment

The sample populations used to develop the test and its norms were described in the extraction

table (Appendix C) to the extent possible based on the source material. Direct quotes from the

sources of information were extracted when appropriate. If no information was found for an

extracted topic, it was recorded that no information was present in the available source materials.

The extraction table was used to inform the test evaluation process. During the peer-reviewed

literature search process, supplemental information was found for several tests for which

information from manuals or academic textbooks had already been extracted. In these cases, this

supplemental information was added to the corresponding test extractions.

Evaluating Features and Application of Neurodevelopmental Tests in Epidemiological Studies

12

Potential Limitations of Test Selection and Data Extraction

Approach

One limitation to the selection of tests is that the scope was narrowed to studies identified from

in-progress EPA IRIS toxicological review of MeHg. Studies of other well-characterized

neurotoxicants (e.g., lead, organophosphate pesticides) might yield additional tests that are

relevant to assessing developmental neurotoxicity. While other well-studied neurotoxicants could

have been included (e.g., lead), they were beyond the charge’s scope for developing this

document. The approach used to select studies and extract data on properties of psychometric

tests was limited by a lack of information for 20 of the 134 tests initially identified from in-

progress EPA IRIS toxicological review of MeHg. In addition, conducting complete extraction

and evaluations at the subtest level for the original 81 included studies was beyond the scope of

this effort. Thus, it is possible that subtests may be rated differently from their parent test.

Moreover, because the selection of tests was based on the tests used in epidemiological studies

identified for in-progress EPA IRIS toxicological review of MeHg, test selection may not reflect

the latest versions of tests used for studying other neurodevelopmental toxicants. Despite these

limitations, the tests selected and evaluated in this document are considered to be a

representative selection of tests for effects in the associated domains that would be applicable for

studying an array of neurodevelopmental toxicants, and the principles for evaluating the tests

could be applied to future research.

One issue that limited access to test-specific information was that information was often not

available for older tests or for those tests that have not been used extensively in research. Thus,

in general, lesser-used tests and older tests had less information or a poorer quality of

information related to the features included in the extraction table (Appendix C). In addition, the

sources of information varied among included tests (e.g., test manuals available for some tests

and peer-reviewed literature only for others), which led to variation in the availability and quality

of information. As a result, the features of individual psychometric tests were not systematically

evaluated using the same types of information sources. The more commonly used tests and those

with multiple and contemporaneous editions (e.g., the omnibus IQ tests such as the Wechsler,

Stanford-Binet, and Kaufman scales) were originally developed and evaluated using robust

processes conducted by the test developers (often commercial entities). Thus, they may have

more complete information because of their well-funded, rigorous development and the detailed

technical manuals that accompany the tests. This does not mean that other tests lack reliability or

validity, only that information on these tests may be more difficult to obtain. In some cases, the

manuals of tests with multiple versions did not contain information on specific topics included

in the extraction table when a specific version was being reviewed. In these cases, data from

manuals for other editions of the test were used or data were reported that relied on the expert

knowledge and judgement of the evaluator, Dr. Roberta White. When data were not available

from manuals or other literature (and therefore not reflected in the extraction table),

evaluator expert knowledge and judgement were utilized, and such ratings were noted in the

evaluation tables.

Evaluating Features and Application of Neurodevelopmental Tests in Epidemiological Studies

13

Data Availability

Data relevant for evaluating neurodevelopmental tests in epidemiological studies are included in

the extraction table available in the NTP Chemical Effects in Biological Systems (CEBS)

database: https://doi.org/10.22427/NIEHS-DATA-NIEHS-01 (NTP 2022).

Evaluating Features and Application of Neurodevelopmental Tests in Epidemiological Studies

14

Part 1: Principles for Evaluating Psychometric Tests

The purpose of this section is to provide principles that can be used by scientists and regulators

to determine if a psychometric test is adequate for assessing neurodevelopment or CNS function

(including specific neurobehavioral domains or traits) or to aid in the selection of psychometric

tests for research studies. While this document emphasizes developmental neurotoxicity studies

of chemical exposures, these principles could be extended to other exposures (e.g., psychosocial

stress, nutrition, etc.). The major principles for evaluating psychometric tests, which are

described below, are those commonly used by psychometrists for this purpose. They include

specific methods for evaluating aspects of the four overarching psychometric criterion areas:

reliability, validity, standardized administration methods, and normative data associated with

specific tests. It is critical to note that these criteria apply only to the features of the psychometric

tests themselves and not to the application of the test in a research setting. (Part 2 of this

document proposes criteria for test application.)

The assessment of test-specific aspects of reliability, validity, standardized test administration,

and normative data for the tests featured in Appendix B were based on information derived from

a combination of sources described in the Introduction to this document and summarized in the

extraction table (Appendix C). Evaluation of specific aspects or subcriteria for each of the four

approaches to understanding the psychometric integrity and properties of the tests was completed

independently by both of the evaluators (Dr. Roberta White and Dr. Joseph Braun) using the data

summarized in the extraction table.

The ratings for each subcriterion were the following: adequate, deficient, not applicable, or not

present (i.e., not enough information available to the evaluator). For the normative data

subcriteria, separate ratings were determined for adults and children (adulthood was defined as

beginning at age 18 years). It should be noted that the evaluative ratings used do not necessarily

dictate whether or not a test is appropriate for an individual study. For example, if a test or

outcome is being used to evaluate a specific brain function in a unique population, but the test

lacks adequate population norms, it can be used if its raw scores are appropriately analyzed. In

addition, some criteria were difficult to rate because, in some cases, multiple sources of

information or studies in the peer-reviewed literature on a test were consulted that varied

considerably in quality or level of detail or contained different results across studies. Once

independent ratings were determined by both evaluators, a consensus meeting was held to

finalize them through discussion between the evaluators. Discussion was needed to arrive at a

consensus rating for approximately 20% of the ratings. A final review of ratings was completed

by the evaluators to ensure consistent application of evaluation criteria. Additional test

descriptions and rating justification notes were added to the evaluation tables when needed.

Explanatory notes for rating justifications were added for each instance of a deficient or not

present rating and for some adequate ratings that were not clearly derived from the material

provided in the extraction table (Appendix C). When data were not available in manuals or other

literature (and therefore not reflected in the extraction table), the evaluators based their ratings on

their own knowledge and noted this in the evaluation tables.

While all four psychometric criterion areas (reliability, validity, standardized administration

methods, and normative data) are important in evaluating psychometric tests, it should be noted

that reliability, validity, and standardized administration methods are considered most important

Evaluating Features and Application of Neurodevelopmental Tests in Epidemiological Studies

15

in selecting psychometric tests for research studies and in determining the adequacy of

psychometric tests to assess neurodevelopment or CNS function in epidemiological research. It

is recommended that scientists and regulators consider the strength of the normative data only for

tests that are considered adequate regarding reliability, validity, and standardized administration

methods.

Given the above considerations, the evaluation ratings for normative data are presented

separately from the ratings for reliability, validity, and standardized administration methods

because the adequacy of normative data is most relevant once a valid and reliable test has been

developed. Moreover, adequacy of normative data is only applicable in epidemiological studies

that use normative scores as outcomes rather than raw scores. In addition, many domain-specific

tests are used to test hypotheses regarding specific skills and abilities to assess specific brain

systems and are often not developed with the same resources as larger omnibus tests, resulting in

limitations to or a lack of normative data. These tests can still be valid and reliable, but the scores

they produce might need to be adjusted for age, sex, or other factors predictive of raw scores.

Appendix B contains the evaluation ratings and notes for the tests by domain. For each domain,

the ratings for reliability, validity, and standardized administration methods are provided in one

table, and the ratings for normative data are provided in a second table. Subtests and subscales

within omnibus tests assessing domain-specific functions that have been identified in

epidemiological studies from the in-progress EPA IRIS toxicological review of MeHg are listed

below their respective domain-specific evaluation tables. The evaluation tables provide the

publication date of each test, as age of the test at the time a study was completed can be an

important factor in considering whether a test was appropriately applied. For example, older tests

may contain items or questions that no longer persist in general knowledge (e.g., naming

outdated technology or household items, such as a record player). However, because test age is

not static (i.e., it depends upon lag time between date of test and date of study, as well as reasons

investigators chose the test), evaluation criteria were not developed for this variable. Factors

related to the age of a test at the time it was employed in a study are considered in some detail in

Part 2 of this document.

This document does not provide an overall designation of adequate or inadequate (or any other

ranking) for specific tests. The complexities of choosing, applying, and interpreting

neurobehavioral methods in research settings prevents simplistic summary evaluations. Some

users of this document may consider making this designation when they are trying to determine

if a study using a given test will be included in a meta-analysis, if the results related to a test are

to be used for policy decisions, or if the test will be administered as part of a research study.

Thus, the relative importance of the four criteria (and subcriteria) in making these types of

decisions will differ with the goal of the end user. For example, researchers selecting a test for

administration in a research study might weigh the availability of specific, applicable normative

data more heavily than the other domains because they are conducting a study in a culturally

unique population. As another example, scientists selecting tests for inclusion in a meta-analysis

of a specific neurobehavioral domain might place more weight on the validity of a test if they

want to ensure that only results from tests accurately measuring the specific domain are included.

Some caveats to applying the criteria in this document should be noted. First, designations of

adequate or deficient are applied to the criteria without additional gradations. This is because

there are standards available to designate a test as adequate or deficient for some aspects of the

Evaluating Features and Application of Neurodevelopmental Tests in Epidemiological Studies

16

psychometric criteria, but additional gradations are not available or widely used (White and

Proctor 1992; White et al. 1994). While alternative methods (e.g., risk-of-bias analysis)

could provide finer gradations of each criterion, systematic approaches that could do this for

psychometric tests are not available. Thus, a binary designation allows users to determine if a

given test meets the criteria as described below in a reasonable enough fashion that it would be

acceptable for use in population-based research. This approach is consistent with clinical and

research practice as some psychometric tests are used in clinical or research settings when

the test has known inadequacies. For instance, this situation can arise when there are no

better alternatives for assessing a given domain or when a test assesses a highly specific

cognitive process.

Many psychometric tests include both an omnibus assessment of a neurobehavioral function or

successful neurodevelopment as well as subtests assessing specific domains related to that

function. Therefore, some specific summary or subscale scores within an omnibus test may be

adequate or deficient while others are not. In general, focusing on the summary scores (e.g., IQ

measures, domain summaries) from tests is recommended for most purposes. In cases for which

a test provides multiple domain or trait scores but no summary measure(s), using the overall

pattern of adequacy/deficiency across domain or trait scores is recommended to determine the

adequacy of a given criterion for the test as a whole. If the goal of the user is to apply a limited

number of an instrument’s subtests (one or more) to assess specific domain functioning, only

information relevant to the subscale(s) of interest should be considered by the user. This

information is generally available in test manuals and can also be found in the peer-reviewed or

gray literature.

The major principles as noted above for evaluating psychometric tests (reliability, validity,

standardized administration methods, and normative data) are described below.

Reliability

For a psychometric test to be reliable, its results should be consistent across time (test-retest

reliability), across items (internal reliability), and across raters (inter-rater reliability). Part 2

discusses inter-rater reliability of the document because it is not an intrinsic feature of a test.

Thus, internal reliability demands that the individual items on a given test should measure the

same domain(s) or trait(s) (i.e., internal consistency). Reproducibility, or test-retest reliability,

requires that consistent scores would be obtained from the same individual upon repeated testing.

To assess the internal reliability of a test, items within the test should be correlated with each

other to ensure internal consistency. To assess the test-retest reliability of a test, it should be

administered in a standardized manner to the same person twice, and the score(s) from the

repeated measurements should be consistent.

When assessing internal consistency, a high correlation among items on domain-specific

subscales indicates that the test items measure the same trait (e.g., as indicated by having a high

split-half reliability). The most popular criterion used to assess internal consistency was

developed by Sattler (2001). He recommended that tests with reliability coefficients <0.6 (e.g.,

correlations mentioned above) be deemed unreliable. Moreover, for research purposes, Sattler

(2001) suggested that tests with reliability coefficients ≥0.6 and <0.7 be considered marginally

reliable and those with coefficients ≥0.7 be considered relatively reliable.

Evaluating Features and Application of Neurodevelopmental Tests in Epidemiological Studies

17

For test-retest reliability, high correlations between repeated administrations of a test to the same

person within an appropriate time interval ensures that the test can consistently measure trait(s)

assessed by the instrument in an individual. Test-retest reliability is generally assessed by

intraclass correlation coefficient (ICC; ideally >0.4), Pearson correlation coefficient

(ideally >0.3), or Cohen's kappa coefficient (>0.4).

Determining adequacy: When evaluating a given psychometric test, it must have internal

consistency reliability coefficients of ≥0.6 (e.g., Cronbach’s alpha, ICC) to be considered

“adequate.” Test-retest reliability should meet one of the following criteria as indicated above:

ICC >0.4, Pearson correlation coefficient >0.3, or Cohen's kappa coefficient >0.4. Some

deviations for subtests are acceptable if summary scales or the majority of subtests are at least

marginally reliable.

Validity

Validity is typically assessed across three broad domains: content, construct, and criterion

validity. Each is distinct but ultimately all are related to a test’s ability to measure what it is

designed to measure. It is critical to note that validity is not a static, “all or none” metric and is

re-evaluated as a test is used in varied clinical practice and research settings over time.

Content validity is the extent to which the test items, tasks, and questions assess the trait that the

test is designed to measure. This can be thought of as a sampling issue, wherein the test content

should be representative of the population of all possible test content that could measure that

trait. Content validity is assessed by evaluating test themes, theoretical models, scientific

evidence supporting a test, domain definition, domain operationalization, item selection, and

item review. Review of content validity is often qualitative in nature and relies on expert

evaluation and judgment; however, quantitative techniques like factor analytic approaches are

often used to refine test content and confirm content validity.

Construct validity is the degree to which the test estimates the trait of interest using the items

selected for the test. It usually pertains to complex traits (e.g., intelligence). Note that a construct

is theoretical and requires accumulation of evidence from several sources beyond correlation of

tests purported to measure constructs such as intelligence. Construct validity is evaluated with

formal construct definitions, correlations with other tests that measure the same (convergent

validity) and different (divergent validity) construct(s), and factor analysis. Construct validity is

quantitatively assessed using results (typically correlation coefficients) from well-designed

studies that administer the test of interest to normative and clinical samples of individuals. There

are no strict thresholds to establish construct validity, but minimum correlation coefficients of

0.3 have been proposed (Lezak 1995; Lezak et al. 2004; Lezak et al. 2012). Correlations with

related tests reflect convergent validity, while relationships to tests that measure other traits

should be low, establishing discriminant validity.

Finally, criterion validity assesses the ability of a psychometric test to predict an individual’s

performance or outcome now (concurrent validity) or in the future (predictive validity). It

requires identification of an appropriate criterion for comparison (e.g., clinical disease related to

the trait), assessment of the test and criterion, calculation of classification accuracy, or

correlation with other tests/criteria.

Evaluating Features and Application of Neurodevelopmental Tests in Epidemiological Studies

18

Determining adequacy: Content, construct, and criterion validity should be separately evaluated

for each test.

(1) Content validity: Qualitatively determine that the test is theoretically grounded, had

item content appropriately identified from a large item pool that was expertly judged

and curated, and has defined and theoretically justified domains. Factor analysis can

be used to confirm that included items are specific to the domain(s) of interest.

(2) Construct validity: Must show validity through positive correlations with other

measures of same construct or similar test (i.e., convergent validity). Ideally, the test

should not be correlated with unrelated constructs (i.e., divergent validity). Factor

analysis can be used to support any summary or subtest scales.

(3) Criterion validity: Criterion should be well defined; must be reasonably accurate in

association with or for predicting criterion (e.g., kappa > 0.6).

Standardized Administration Methods

Psychometric tests must be administered in a rigorous and standardized fashion. This precision is

critical in population-based studies when groups of participants with different levels of exposure

are being compared with one another, as non-standardized administration could introduce

random or systematic bias. When comparing results from one study with another, it is also

critical to ensure that data were collected in the same fashion (i.e., the studies carried out the

same test in the same way).

Well-designed psychometric tests include explicit guidelines regarding test material

presentation/organization, instructions to participants, instructions to test administrators on

scoring participant responses and calculating test scores, and explicit phrasing for oral

instructions and/or verbal questions. Some psychometric tests use stimulus material (e.g.,