New Jersey Department of Environmental Protection

Site Remediation Program

DATA QUALITY ASSESSMENT AND

DATA USABILITY EVALUATION

TECHNICAL GUIDANCE

Version 1.0

April 2014

Preamble

The results of analyses performed on environmental matrices are used to determine if

remediation is needed. Because of the nature of environmental matrices, limitations of analytical

methods, characteristics of analytes, and inherent error associated with any sampling and

analysis procedure, the results of environmental analysis may contain an element of uncertainty

and in some cases may be significantly biased, and therefore may not be representative of the

actual concentrations of the analytes in the environmental matrices. Thus, an evaluation of the

quality of the analytical data in relation to the intended use is important in order for the

investigator to make decisions which are supported by data of known and sufficient quality.

There are many ways to evaluate the quality of analytical data in terms of precision, accuracy,

representativeness, comparability, completeness and sensitivity in relation to the intended use

of the data. Precision, accuracy, representativeness, comparability, completeness and

sensitivity are collectively referred to as the PARCCS parameters. This guidance document

describes a NJDEP-accepted, two-step process for data evaluation. The first step in the process

consists of an assessment of data quality. The second step is an evaluation to determine

whether the data can be used to support the decisions that will be made using that data. Use of

this guidance provides consistency in evaluation and presentation of data quality information

that will facilitate review. If an alternative process is used, such a process should be

documented in order to explain the thought process and may involve a commitment of

significant resources to demonstrate that the data is of known and sufficient quality and is

usable relative to its intended purpose.

To assist the investigator in obtaining analytical data of known quality, the Work Group

developed the Data of Known Quality Protocols (DKQPs). The DKQPs include specific

laboratory Quality Assurance and Quality Control (QA/QC) criteria that produce analytical data

of known and documented quality for analytical methods. When Data of Known Quality are

achieved for a particular data set, the investigator will have confidence that the laboratory has

followed the DKQPs, has described nonconformances, if any, and the investigator has adequate

information to make judgments regarding data quality.

i

The Data of Known Quality performance standards are given in Appendix B of the NJDEP Site

Remediation Program, Data of Known Quality Protocols Technical Guidance, April 2014. These

protocols will enhance the ability of the investigator to readily obtain from the laboratory the

necessary information to identify and document the precision, accuracy and sensitivity of data.

ii

Preamble .......................................................................................................................... i

1. Intended Use of Guidance Document ........................................................................ 1

2. Purpose ...................................................................................................................... 2

3. Document Overview ................................................................................................... 5

4. Procedures ................................................................................................................. 6

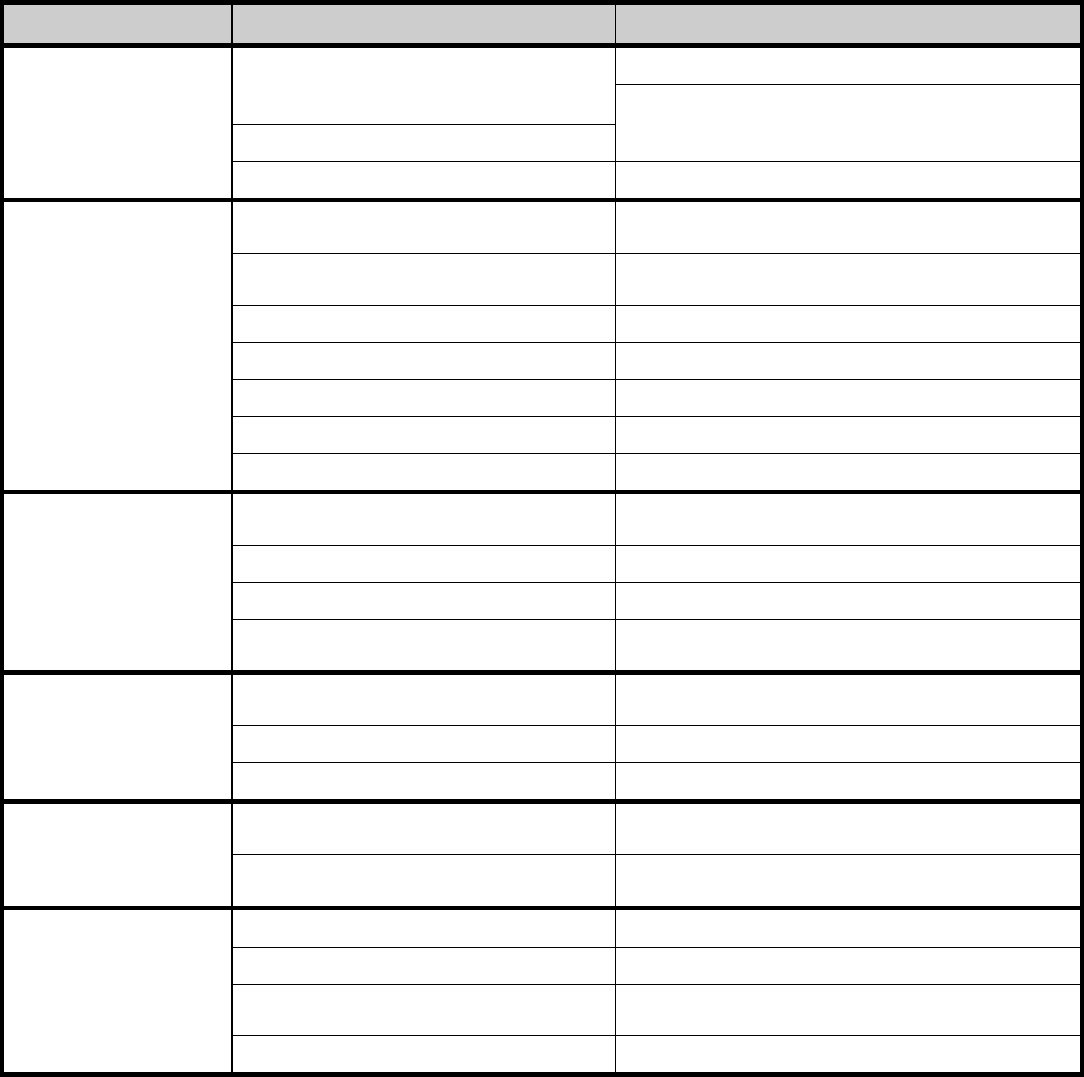

Figure 1: DQA and DUE Flow Chart............................................................................... 7

4.1 Data Quality Objectives ...................................................................................... 8

4.2 Uncertainty in Analytical Data ............................................................................ 9

4.3 Types of Analytical Data .................................................................................... 9

4.4 PARCCs Parameters ....................................................................................... 10

4.4.1 Precision .................................................................................................... 10

4.4.2 Accuracy .................................................................................................... 11

4.4.3 Representativeness ................................................................................... 12

4.4.4 Comparability ............................................................................................. 12

4.4.5 Completeness ............................................................................................ 13

4.4.6 Sensitivity .................................................................................................. 13

4.5 Data Quality Assessment ................................................................................. 14

4.5.1 Batch Quality Control versus Site Specific Quality Control ........................ 15

4.5.2 Evaluating Significant Quality Control Variances ....................................... 16

4.5.3 Poorly Performing Compounds .................................................................. 16

iii

4.5.4 Common Laboratory Contaminants ........................................................... 17

4.5.5 Bias ........................................................................................................... 17

4.6 Data Usability Evaluation ................................................................................. 17

4.6.1 Evaluation of Bias ...................................................................................... 20

4.6.2 General Quality Control Information .......................................................... 23

4.6.2.1 Chain of Custody Forms .......................................................................... 23

4.6.2.2 Sample Preservation Holding Times and Handling Time ........................ 23

4.6.2.3 Equipment, Trip and Field Blanks ............................................................ 26

4.6.2.4 Field Duplicates ....................................................................................... 28

4.6.3 Laboratory Quality Control Information ...................................................... 30

4.6.3.1 Data of Known Quality Conformance/Nonconformance

Summary Questionnaire .......................................................................... 31

4.6.3.2 Reporting Limits....................................................................................... 31

4.6.3.3 Method Blanks ......................................................................................... 33

4.6.3.4 Laboratory Duplicates .............................................................................. 34

4.6.3.5 Surrogates ............................................................................................... 34

4.6.3.6 Laboratory Control Samples (LCS) .......................................................... 37

4.6.3.7 Matrix Spike/Matrix Spike Duplicates and Matrix Spike/Matrix Duplicate 38

4.6.3.8 Internal Standards ................................................................................... 41

4.6.3.9 Serial Dilutions (ICP and ICP/MS) ........................................................... 42

4.6.3.10 Interference Check Solution .................................................................. 42

4.6.3.11 Matrix Spikes and Duplicates ................................................................ 44

4.6.3.12 Internal Standards for ICP/MS (for Metals) ............................................ 45

4.6.4 Using Multiple Lines of Evidence to Evaluate Laboratory QC Information . 45

4.6.5 Data Usability Evaluations for Non-DKQ Analytical Data........................... 47

4.6.6 Data Usability Evaluations Using Multiple Lines of Evidence

from DQOs and the CSM .......................................................................... 49

iv

4.6.7 Factors to be Considered During Data Usability Evaluations .................... 50

4.6.8 Documentation of Data Quality Assessments and Data Usability

Evaluations ............................................................................................... 52

REFERENCES .............................................................................................................. 54

Appendix A Supplemental Information on Data Quality Objectives and Quality

Assurance Project Plans .......................................................................... 56

Appendix B QC Information Summary and Measurement Performance Criteria .......... 58

Appendix C QC Information to be Reviewed During Data Quality Assessments ........ 66

Appendix D Data Quality Assessment Worksheets and Summary of DKQ

Acceptance Criteria .................................................................................. 71

Appendix E Evaluating Significant QA/QC Variances .................................................. 85

Appendix F Poorly Performing Compounds ................................................................. 92

Appendix G Range of Data Usability Evaluation Outcomes ......................................... 95

Appendix G Range of Data Usability Evaluation Outcomes ......................................... 96

Appendix H Data Usability Evaluation Worksheet ...................................................... 105

Appendix I Surrogates and Internal Standards ........................................................... 109

Appendix J Supplemental Examples Using Multiple Lines of Evidence ..................... 113

Appendix K: Glossary .................................................................................................. 119

Appendix L: List of Acronyms ..................................................................................... 130

v

1. Intended Use of Guidance Document

This guidance is designed to help the person responsible for conducting remediation to comply

with the Department's requirements established by the Technical Requirements for Site

Remediation (Technical Rules), N.J.A.C. 7:26E. Because this guidance will be used by many

different people that are involved in the remediation of a contaminated site such as Licensed

Site Remediation Professionals (LSRPs), Non-LSRP environmental consultants and other

environmental professionals, the generic term “investigator” will be used to refer to any person

that uses this guidance to remediate a contaminated site on behalf of a remediating party,

including the remediating party itself.

The procedures for a person to vary from the technical requirements in regulation are outlined in

the Technical Rules at N.J.A.C. 7:26E-1.7. Variances from a technical requirement or guidance

must be documented and be adequately supported with data or other information. In applying

technical guidance, the Department recognizes that professional judgment may result in a range

of interpretations on the application of the guidance to site conditions.

This guidance supersedes previous Department guidance issued on this topic. Technical

guidance may be used immediately upon issuance. However, the NJDEP recognizes the

challenge of using newly issued technical guidance when a remediation affected by the

guidance may have already been conducted or is currently in progress. To provide for the

reasonable implementation of new technical guidance, the NJDEP will allow a 6-month “phase-

in” period between the date the technical guidance is issued final (or the revision date) and the

time it should be used.

This guidance was prepared with stakeholder input. The following people were on the

committee who prepared this document:

• Greg Toffoli, Chair (Department), Office of Data Quality

• Nancy Rothman, Ph.D., New Environmental Horizons, Inc.

• Rodger Ferguson, CHMM LSRP, Pennjersey Environmental Consulting

• Stuart Nagourney (Department), Office of Quality Assurance

• David Robinson, LSRP, Synergy Environmental, Inc.

• Joseph Sanguiliano (Department), Office of Data Quality

• Phillip Worby, Accutest Laboratories, Inc.

1

2. Purpose

The purpose of this document is to provide guidance on how to review and subsequently use

analytical data generated pursuant to the remediation of a discharge of a contaminant(s).

Laboratory Quality Assurance and Quality Control (QA/QC) is a comprehensive program used

to enhance and document the quality of analytical data. QA involves planning, implementation,

assessment, reporting, and quality improvement to establish the reliability of laboratory data.

QC procedures are the specific tools that are used to achieve this reliability.

Evaluating the quality of analytical data to determine whether the data are of sufficient quality for

the intended purpose is a two-step process. The first step of the process is a data quality

assessment (DQA) to identify and summarize any quality control problems that occurred during

laboratory analysis (QC nonconformances). The results of the DQA are used to perform the

second step, which is a data usability evaluation (DUE) to determine whether or not the quality

of the analytical data is sufficient for the intended purpose.

To assist the investigator in obtaining usable, “good’ analytical data, the NJDEP Analytical

Technical Guidance Work Group developed the Data of Known Quality Protocols (DKQPs). The

DKQPs are a collection of analytical methods that contain specific performance criteria and are

based on the conventional analytical methods published by the U.S. Environmental Protection

Agency (EPA). DKQPs have been developed for the most commonly used analytical methods.

DKQPs may be developed for other methods in the future. Analytical data generated from the

DKQPs are termed Data of Known Quality (DKQ).

When the DKQPs are followed the investigator can have confidence that the data are of known

and documented quality. This will enable the investigator to evaluate whether the quality of the

data is usable. (When the performance criteria in the DKQPs are met, it is likely that the data

will be usable for project decisions.) Information regarding the DKQPs and laboratory QA/QC is

presented in the NJDEP guidance document titled NJDEP Site Remediation Program, Data of

Known Quality Protocols Technical Guidance, April 2014 (DKQ Guidance). The DKQ Guidance

and DKQPs are published on the NJDEP web site at:

http://www.nj.gov/dep/srp/guidance/index.html#analytic_methods

.

2

The DKQP Guidance includes the “Data of Known Quality Conformance/Nonconformance

Summary Questionnaire” that the investigator may request the laboratory to use to indicate

whether the data meet the guidelines for DKQ. The guidance also describes the narrative (that

must be included as a laboratory deliverable pursuant to N.J.A.C. 7:26E Appendix A) that

describes QA/QC nonconformances. When DKQ criteria are achieved for a particular data set,

the investigator will have confidence that the laboratory has followed the DKQPs, has described

nonconformances, if any, and has adequate information to make judgments regarding data

quality.

A basic premise of the DKQPs is that good communication and the exchange of information

between the investigator and the laboratory will increase the likelihood that the quality of the

analytical data will meet project-specific Data Quality Objectives (DQOs), and therefore, will be

suitable for the intended purpose. To this end, the “Example: Project Communication Form”

has been included with the DKQP Guidance (Appendix A) to provide an outline of the

information that a laboratory should have prior to analyzing the associated samples.

The process of obtaining analytical data that are of sufficient quality for the intended purpose

and evaluating the quality of analytical data in relation to project-specific DQOs occurs

throughout the course of a project. It is the investigator’s responsibility to perform the DQA/DUE

process; therefore, the investigator’s contact with the laboratory should be limited to explaining

any issues that were not adequately addressed in the narrative (nonconformance summary)

and, if provided, a Data of Known Quality Conformance/Nonconformance Summary

Questionnaire (DKQP Guidance). It should be noted that the investigator, not the laboratory, is

responsible for the usability of data.

It is not unusual for laboratory reports to contain QC nonconformances, especially for those

analyses that have extensive analyte lists such as Method 8260B (Volatile Organics) and 8270C

(Semivolatile Organics). The chances of every analyte passing all the QC criteria are remote

and not expected. In many cases, the DQA and DUE will reveal QC nonconformances that do

not affect the usability of the analytical data for the intended purpose. In these cases, the

investigator and others who will be relying on the data may have confidence that the quality of

the data is appropriate for the intended purpose.

In other cases, the DQA and DUE will reveal QC nonconformances that will affect the usability

of the analytical data for the intended purpose. In these cases, the investigator has developed

3

an understanding of the limitations of the analytical data (e.g., through a conceptual site model

(CSM)) and can avoid making decisions that are not technically supported and may not be fully

protective of human health and the environment.

It is important to note that uncertainty introduced through the collection of non-representative

samples or an inadequate number of samples will, in many cases, exceed the uncertainty

caused by laboratory analysis of the samples. It is imperative that the investigator follow the

appropriate regulations and guidance documents to ensure that the number and location of

samples collected and analyzed are sufficient to provide adequate characterization of site

conditions.

This guidance does not suggest formal data validation (such as that outlined in the NJDEP Site

Remediation Program Standard Operating Procedure (SOP) for Analytical Data Validation of

Target Analyte List (TAL) – Inorganics, Revision No. 5, SOP No. 5.A.2) is to be performed in all

instances. Specifically, such documents describe formal, systematic processes for reviewing

analytical data. These processes involve, for example, verifying derived results, inspection of

raw data, review of chromatograms, mass spectra, inter-element correction factors to ascertain

that the data set meets the data validation criteria, and the DQOs specified in the quality

assurance project plan (QAPP). In most cases, use of the DKQPs will allow the investigator to

perform a DQA without conducting formal data validation. In cases where formal data validation

will be necessary, the investigator will have to evaluate the data in accordance with applicable

NJDEP and/or EPA Guidance/SOPs. Please note that if data validation is necessary, then a full

data deliverable package is required. (An example where full validation may be required could

be where site conditions have made it difficult for the laboratory to meet the quality control

requirements of a DKQP and the issuance of a RAO is in the balance.)

4

3. Document Overview

The DQA and DUE constitutes a two-step process that is designed to evaluate the quality of

analytical data to determine if the data are of sufficient quality for the intended purpose. The

DQA is an assessment of the laboratory quality control data, the laboratory report, and

laboratory narrative by the investigator to identify and summarize QC nonconformances. The

DUE is an evaluation by the investigator to determine if the analytical data (that may include

nonconformances) are of sufficient quality for the intended purpose. The DUE uses the results

of the DQA and evaluates the quality of the analytical data in relation to the project-specific

DQOs and the intended use of the data. The DQA should be performed in real-time when the

data are received throughout the course of a project. If issues with the data are found, an

adjustment to the project may be made in real-time, so that enough data with sufficient quality

may be gathered prior to beginning the DUE. The DUE is performed whenever the data are

used to make decisions.

5

4. Procedures

The process of obtaining analytical data of sufficient quality for the intended purpose and

evaluating the quality of analytical data in relation to project-specific DQOs and the CSM occurs

throughout the course of a project. This process includes the following:

• Development of project-specific DQOs in accordance with professional judgment taking

cognizance of published applicable rules and guidance documents.

• Communication with the laboratory regarding project-specific DQOs and the selection of

appropriate analytical methods with the appropriate analytical sensitivity;

• Performance of QA and QC activities during the analysis of the samples and reporting of QC

results by the laboratory;

• Performance of a DQA by the investigator when analytical results are received from the

laboratory to identify QC nonconformances; and,

• Performance of a DUE by the investigator to determine if the analytical data are of sufficient

quality for the intended purpose. The DUE uses the results of the DQA and evaluates the

quality of the analytical data in relation to the project-specific DQOs and the CSM.

This process is described in Figure 1: DQA and Due Flow Chart.

6

Figure 1: DQA and DUE Flow Chart**

CSM

Figure 1: DQA and DUE Flow Chart

Sampling Plan, Field

QA/QC, and Method

Selection

Analytical Data, Field

Observations,

Hydrogeological and

Physical Data

Collect Additional Lab

or Field Data

Modify/Expand

Investigation/Remediate

Collect Additional Lab

or Field Data

Modify/Expand

Investigation

Representativeness

Evaluation

Does the Information/Data

Represent the Site and

Support the CSM?

DUE - Are the

Analytical Data

Adequate for the

Intended Purpose

Based on a Review

of QC

Nonconformances

and Information?

Data is Representative and of Adequate Quality to Support Environmental Professional’s Opinion

NO

YES

YES

DQA – Identify Non-Conformances

NO

Start

** State Of Connecticut, Department of Environmental Protection, Laboratory Quality Assurance and Quality

Control, Data Quality Assessment And Data Usability Evaluation Guidance Document, May 2009, Revised

December 2010.

7

4.1 Data Quality Objectives

DQOs are developed by the investigator to ensure that a sufficient quantity and quality of

analytical data are generated to meet the goals of the project and support defensible

conclusions that protect human health and the environment. DQOs should be developed at

the beginning of a project and revisited and modified as needed as the project progresses.

Similarly, the quality of analytical data is evaluated in relation to the DQOs throughout the

course of a project.

It is important to document the DQOs for a project in the context of the CSM so there is a

roadmap to follow during the project and so there is documentation that the DQOs were met

after the project is finished. The DQOs for a project can be documented in a project work

plan, a QAPP, environmental investigation report, or other document. DQOs are a required

QAPP element per N.J.A.C. 7:26E 2.2. Sources of detailed information regarding the

development of DQOs and QAPPs are listed in Appendix A of this document.

Typical analytical DQOs include, but are not limited to the following:

• The QA/QC criteria specified in the DKQPs or in other analytical methods with an

equivalent degree of QA/QC as in the DKQPs;

• The applicable regulatory criteria, for example, the Appendix Table 1 - Specific

Ground Water Quality Criteria noted in the Ground Water Quality Standards, N.J.A.C.

7:9C; and

• The target reporting limit (RL) for a specific substance when determining the extent

and degree of contamination.

The DQOs, which are based on the intended use of the analytical data, define how reliable

the analytical data must be to make sound, rational decisions regarding data usability. For

example, analytical data can be used by an investigator to determine if a discharge took

place, evaluate the nature and extent of a discharge, confirm that remediation is complete, or

determine compliance with an applicable standard/screening level as described in the

“Definition of Terms” above.

8

4.2 Uncertainty in Analytical Data

Uncertainty exists in every aspect of sample collection and analysis. For example:

• Sample collection and homogeneity;

• Sample aliquoting:

• Sample preservation;

• Sample preparation; and

• Sample analysis

The overall measurement error is a combination of the sum of all the errors associated with

all aspects of sample collection and analysis. The investigator needs to understand the

impact of these uncertainties in order to establish data of known quality.

It is important to understand this uncertainty because analytical data with an unknown

amount of uncertainty may be difficult to use. However, it may still be possible to use the

analytical data if the investigator understands the degree of uncertainty, which is assessed

using the DQA/DUE process. The intended use of the analytical data determines how much

uncertainty is acceptable and how dependable the analytical data must be.

For example, when analytical data will be used for determining if a site meets the Residential

Direct Contact Soil Remediation Standards with a goal of obtaining an unrestricted Remedial

Action Outcome (RAO), the investigator must have a greater degree of confidence in that

data and must understand whether or not the degree of uncertainty will affect the usability of

the data for its intended purpose. Conversely, in cases where contaminants are known to be

present at concentrations significantly greater than Non-Residential Direct Contact Soil

Remediation Standards and further investigation and remediation will be conducted, the

amount of uncertainty associated with that analytical data can be greater.

4.3 Types of Analytical Data

There are two types of data: data that are generated from DKQPs and data that are not. For

the data generated from DKQPs, a lesser degree of scrutiny needs to be applied since the

9

uncertainty of these data is better understood. For data not generated from DKQPs, a higher

degree of scrutiny may be required since these data may have greater uncertainty. The type

of data will usually determine the level of effort that is required for the DQA and DUEs. For

data generated from DKQPs, an example of the information that should be submitted in a

conformance/nonconformance summary is included in the DKQPs Guidance (“Data of

Known Quality Conformance/Nonconformance Summary Questionnaire”). Because

many environmental investigation and remediation projects have been on-going for a period

of time before the DKQPs were developed and because DKQPs are not published for all

methods of analysis, it is likely that many investigators will need to integrate the data

generated by methods other than the DKQPs with data generated in accordance with the

DKQPs. This evaluation should be performed on a site-specific basis relative to the CSM and

DQOs, but the basic principles should be similar for each situation. Section 4 of the DKQP

Guidance presents information on the types of laboratory QC information that are needed to

demonstrate equivalency with the DKQs.

4.4 PARCCs Parameters

The PARCCs parameters are used to describe the quality of analytical data in quantitative

and qualitative terms using the information provided by the laboratory quality control

information. The PARCCS parameters – precision, accuracy, representativeness,

comparability, completeness, and sensitivity – are described below. The types of QC

information that can be used to evaluate the quality of analytical data using the PARCCS

parameters are provided in Appendix B of this document. Also found in Appendix B is a table

that summarizes DKQ performance parameters and the recommended frequency for the

various types of QC elements. Acceptance criteria associated with PARCCs Parameters are

included in any site-specific QAPP and are also discussed in the “SRP Technical Guidance

for Quality Assurance Project Plans” at

http://www.nj.gov/dep/srp/guidance/index.html#analytic_methods

4.4.1 Precision

Precision expresses the closeness of agreement, or degree of dispersion, between a

series of measurements. Precision is a measure of the reproducibility of sample results.

10

The goal is to maintain a level of analytical precision consistent with the DQOs. As a

conservative approach, it would be appropriate to compare the greatest numeric results

from a series of measurements to the applicable regulatory criteria.

Precision is measured through the calculation of the relative percent difference (RPD) of

two data sets generated from a similar source or percent relative standard deviation

(%RSD) from multiple sets of data. The formula for RPD is presented in the definition for

precision in the Definition of Terms section of this document. For example, the analytical

results for two field duplicates are 50 milligrams per kilogram (mg/kg) and 350 mg/kg for a

specific analyte. The RPD for the analytical results for these samples was calculated to be

150%, which, although it doesn’t actually represent a numerical measure of heterogeneity,

suggests a high degree of heterogeneity in the sample matrix and a low degree of

precision in the analytical results. Duplicate results varying by this amount may require

additional scrutiny, including qualification and/or resampling. When using duplicate results

that have met DKQP acceptance criteria, the QAPP should discuss whether the average

or the higher of the two values would be used for making data usability decisions.

4.4.2 Accuracy

Accuracy is used to describe the agreement between an observed value and an accepted

reference or true value. The goal is to maintain a level of accuracy consistent with the

DQOs. Accuracy is usually reported through the calculation of percent recovery using the

formula in the definition for accuracy included in the Definition of Terms section of this

document. For example, the analytical result for a Laboratory Control Sample (LCS) is 5

mg/kg. The LCS was known to contain 50 mg/kg of the analyte. The percent recovery for

the analytical results for this analyte was calculated to be 10%, which indicates a low

degree of accuracy of the analytical results for the analyte and would indicate a low bias

of that analyte to any associated field sample in that analytical batch. Therefore, the actual

concentration of the analyte in samples is likely to be higher than reported. All of the

possible field sample collection and analytical issues which may affect accuracy should be

evaluated to determine overall accuracy of a specific reported result. These data may

require additional scrutiny with the possibility of qualification or rejection based upon the

DQO. A list of common qualifiers has been included in Appendix D of this Guidance

document.

11

4.4.3 Representativeness

Representativeness is a qualitative measurement that describes how well the analytical

data characterizes an area of concern. Many factors can influence how representative the

analytical results are for an area sampled. These factors include the selection of

appropriate analytical procedures, the sampling plan, matrix heterogeneity and the

procedures and protocols used to collect, preserve, and transport samples. Information to

be considered when evaluating how well the analytical data characterizes an area of

concern is presented in various SRP technical guidance documents and manuals.

For example, as part of a sampling plan, an investigator collected soil samples at

locations of stained soil near the base of several above-ground petroleum storage tanks

known to be more than seventy years old and observed to be in deteriorated condition.

The samples were analyzed for extractable petroleum hydrocarbons (EPH). The

concentrations of all EPH results were below the method RL or not detected (ND). The

investigator evaluated these results in relation to visual field observations that indicated

that petroleum-stained soil was present. The investigator questioned how well the

analytical results characterized the locations where stained soil was observed and

collected several additional samples for EPH analysis to confirm the results. The results of

the second set of samples collected from locations of stained soil indicated the presence

of EPH at concentrations of approximately 5,000 mg/kg. Therefore, the investigator

concluded that the original samples for which the analytical results were reported as ND

for EPH were not representative of the stained soil and that the second set of samples

were representative of the stained soil.

4.4.4 Comparability

Comparability refers to the equivalency of sets of data. This goal is achieved through the

use of standard or similar techniques to collect and analyze representative samples.

Comparable data sets must contain the same variables of interest and must possess

values that can be converted to a common unit of measurement. Comparability is

primarily a qualitative parameter that is dependent upon the other data quality elements.

For example, if the RLs for a target analyte were significantly different for two different

methods, the two methods may not be comparable and more importantly, it may be

12

difficult to use those data to draw inferences and/or make comparisons. Use caution in

combining data sets especially if the quality of the data is uncertain.

4.4.5 Completeness

Completeness is a quantitative measure that is used to evaluate how many valid

analytical data were obtained in comparison to the amount that was planned.

Completeness is usually expressed as a percentage of usable analytical data.

Completeness goals are specified for the various types of samples that will be collected

during the course of an investigation. Completeness goals are used to estimate the

minimum amount of analytical data required to support the conclusions of the investigator.

If the completeness goal is 100% for samples that will be used to determine compliance

with the applicable regulations, all of the samples must be collected, analyzed and yield

analytical data that are usable for the intended purpose. Critical samples include those

samples that are relied upon to determine the presence, nature, and extent of a release or

determine compliance with applicable regulations. The completeness goal for critical

samples is generally 100%. Overall project completeness goals are generally below 100%

(e.g., QAPP DQO for overall project completeness may be 90%) to account for losses due

to unintended issues with sample collection (e.g., well will not purge properly or possible

breakage of sample in-transit to the laboratory) or to account for quality issues which

affect usability of sample data.

4.4.6 Sensitivity

Sensitivity is related to the RL. In this context, sensitivity refers to the capability of a

method or instrument to detect a given analyte at a given concentration and reliably

quantitate the analyte at that concentration. The investigator should be concerned that the

instrument or method can detect and provide an accurate analyte concentration that is not

greater than an applicable standard and/or screening level. In general, RLs should be less

than the applicable standard and/or screening level. Analytical results for samples that are

non-detect for a particular analyte that have RLs greater than the applicable standards

and/or screening levels cannot be used to demonstrate compliance with the applicable

standards and/or screening levels.

13

The issue of analytical sensitivity may be one of the most difficult to address as it pertains

to data usability evaluations. Samples that are contaminated with sufficient quantity of

material, such that dilutions are performed, are a leading cause of RLs exceeding

applicable criteria. However, there may be instances where such exceedances are

insignificant relative to the site specific DQOs. As an example, the project may be on-

going and/or other compounds are “driving” the cleanup such that not meeting applicable

criteria for all compounds at that particular juncture is not an issue.

4.5 Data Quality Assessment

A DQA is the process of identifying and summarizing QC nonconformances. The DQA

process should occur throughout the course of a project. The DKQP Guidance “Data of

Known Quality Conformance/Nonconformance Summary Questionnaire”, laboratory

narrative, and analytical data package should be reviewed by the investigator soon after it is

received, so the laboratory can be contacted regarding any questions, and issues may be

resolved in a timely manner. The DQA is to be performed prior to the DUE. The level of effort

necessary to complete this task depends on the type of analytical data described above in

Section 2.3 of this guidance document. The types of QC information that are to be reviewed

as part of the DQA are described in Appendix C of this document. Results from the DQA are

used during the DUE to evaluate whether the analytical data for the samples associated with

the specific QA/QC information are usable for the intended purpose.

Appendix B of this Guidance document includes a table that summarizes the DKQ

parameters and the recommended frequencies for various types of QC information. The

actual QC checks, target acceptance criteria and information required to be reported under

the DKQPs are provided in Appendix B of the DKQ Guidance.

The DQA is usually most efficiently completed by summarizing QC nonconformances on a

DQA worksheet or another manner that documents the thought process and findings of the

DQA (e.g., NJDEP Full Laboratory Data Deliverable Form available at:

http://www.nj.gov/dep/srp/srra/forms/

).

Sample DQA worksheets are included in Appendix D of this document. These worksheets

may be modified by the user. Appendix D also presents a summary of selected DKQ

acceptance criteria which may be useful during the completion of DQA worksheets.

14

4.5.1 Batch Quality Control versus Site Specific Quality Control

Laboratory QC is performed on a group or “batch” of samples. Laboratory QC procedures

require a certain number of samples be spiked and/or analyzed in duplicate. Since a

laboratory batch may include samples from several different sites, the accuracy and

precision assessment for organic samples will not be germane to any site in the batch

except for the site from which the QC samples originated. QC samples from a specific site

are referred to as site specific QC. Since batch QC for organic samples may include

samples from different sites, it may be of limited value when evaluating precision and

accuracy for a site. For inorganic samples, the sample chosen for the QC sample pertains

to all inorganic samples in the batch because the inorganic methods themselves include

little sample-specific quality control. Typically, organic analyses require an MS/MSD pair

for every twenty samples of similar matrix (e.g., soil, water, etc.). Inorganic analyses

usually have a matrix spike and a matrix duplicate (MD) for every twenty samples;

however, an MS/MSD pair for inorganic analyses is acceptable. Information regarding

MS/MSDs is presented in Section 5.6.3.7 of this document. The results of the MS spike

can be used to evaluate accuracy, while the results of the MS and MSD analysis (or

sample and MD) can be used to assess precision. Similarly, LCSs and LCS/LCSDs are

used by laboratories as a substitute to or in addition to MS/MSD where the LCS is used to

evaluate method accuracy, while a LCS/LCSD pair can be used to evaluate both precision

and accuracy. Information regarding LCS/LCSD is presented in Section 4.6.3.6 of this

document.

There may be instances where the investigator incorporates site or project specific QC

samples as part of the DQO. Examples of where this may be appropriate are:

• Complex or unique matrix;

• Contract specific requirements;

• High profile cases;

• Sites containing contaminants such as dioxins or hexavalent chromium

If project specific QC samples are required to meet the DQO, then the investigator should

supply sufficient sample volume for the analyses.

15

4.5.2 Evaluating Significant Quality Control Variances

Some QC nonconformances are so significant that they must be thoroughly evaluated.

Some examples are the absence of QC analyses, gross exceedance of holding time, and

exceeding low recoveries of spikes and/or surrogates. Appendix E of this document

presents a summary of significant QC variances or gross QC failures.

If the DQA is performed when the laboratory deliverable is received it may be possible for

the investigator to request that the laboratory perform reanalysis of the sample or sample

extract within the holding time. During the DUE, data with gross QC failures in most cases

will be deemed unusable, unless the investigator provides adequate justification for its

use. However, samples with significant QC variances could be used if the results are

significantly above remedial standards/screening levels.

4.5.3 Poorly Performing Compounds

Not all compounds of interest perform equally well for a given analytical method or

instrument. Typically, this is due to the chemical properties of these compounds and/or

the limitations of the methods and instrumentation, as opposed to laboratory error. These

compounds are commonly referred to as "poor performers," and the majority of QC

nonconformances are usually attributed to these compounds. Appendix F of this

document presents a summary of compounds that are typically poorly performing

compounds. Each method specific DKQ acceptance criteria table (QAPP Worksheet)

notes the method-specific poor performers. A laboratory’s list of poorly performing

compounds should not be substantially different from this list. The investigator should,

through the QAPP, have the laboratory confirm which compounds are poor performers for

the methods used prior to the analysis of samples. This information should be used during

the DUE. The investigator may decide not to use the entire data set should “too many”

compounds fail to meet acceptance criteria as this may be an indication of general and

significant instrumental difficulties. For example, the investigator may decide that if QC

results for more than 10% of the compounds fail to meet acceptance criteria for DKQ

Method 8260 or more than 20% fail to meet criteria for DKQ Method 8270, the data may

not be usable to demonstrate that concentrations are less than applicable standards

without additional lines of evidence to support such a decision.

16

4.5.4 Common Laboratory Contaminants

During the course of the analysis of samples, substances at the laboratory may

contaminate the samples. The contamination in the sample may come from contaminated

reagents, gases, and/or glassware; ambient contamination; poor laboratory technique; et

cetera. A list of common laboratory contaminants can be found in Appendix G of this

document. However, not all sample contamination can be attributed to the compounds on

the laboratory contaminant list. During the DUE, the investigator must take the CSM and

site-specific information into account to support a hypothesis that the detection of common

laboratory contaminants in environmental samples is actually due to laboratory

contamination and not due to releases at the site or due to sampling efforts.

4.5.5 Bias

When QC data for analytical results indicates that low or high bias is present, this means

that the true values of the target analytes are lower or higher than the reported

concentration, respectively. Bias can also be indeterminate, which means that the

analytical results have poor analytical precision or have conflicting bias in the data.

Additionally, as bias ultimately can affect the actual concentration reported, all bias has

the potential to affect accuracy. Bias is evaluated by the investigator as part of the DUE.

Bias can be caused by many factors, including improper sample collection and

preservation, exceedances of the holding times, the nature of sample matrix, and method

performance. The sample matrix can cause matrix interferences. Typically, matrices such

as peat, coal, coal ash, clay, and silt can exhibit significant matrix interferences by binding

contaminants or reacting with analytes of concern. The investigator should contact the

laboratory to determine the appropriate laboratory methods to address these difficult

matrices. The evaluation of bias is further discussed in Section 5.6.1 of this document.

4.6 Data Usability Evaluation

The DUE is an evaluation by the investigator to determine if the analytical data are of

sufficient quality for the intended purpose and can be relied upon by the investigator with the

appropriate degree of confidence to support the conclusions that will be made using the data.

17

The investigator uses the results of the DQA to evaluate the usability of the analytical data

during the DUE in the context of project-specific DQOs and the CSM.

One of the primary purposes of the DUE is to determine if any bias that might be present in

the analytical results, as identified during the DQA, affects the usability of the data for the

intended purpose. The DUE can use multiple lines of evidence from different types of

laboratory QC information or from site-specific conditions described in the CSM to evaluate

the usability of the analytical data.

The initial DUE should evaluate precision, accuracy, and sensitivity of the analytical data

compared to DQOs. Representativeness, completeness, and comparability should be

evaluated as part of a DUE and should be considered when incorporating analytical data into

the CSM.

More scrutiny regarding the quality of analytical data may be necessary when the

investigator intends to use the data to demonstrate compliance with an applicable

standard/screening level than when the data are used to design additional data collection

activities or when remediation will be conducted. Data that may not be deemed to be of

sufficient quality to demonstrate compliance with applicable standard/screening level may be

useful for determining that a discharge has occurred in cases when remediation will be

conducted or to guide further data collection activities.

Typically, the most challenging DUE decisions are for situations when the analytical results

are close to, or at, the applicable standard/screening level and there are QC

nonconformances that might affect the usability of the data. In situations such as this, the

NJDEP expects that the investigator will use an approach that is protective of human health

and the environment. Coordination with the laboratory to understand QC information,

additional investigation, and re-analysis of samples may be necessary in some cases. If the

DQA is performed when the laboratory deliverable is received and issues are raised, it may

be possible to perform re-analysis of the sample extract within the holding time and still use

the sample data.

To help expedite the DUE, it may be useful to determine if the QC nonconformances

identified in the DQA are significant for a particular project. The types of questions listed

below are not inclusive. They are intended to give examples to the investigator to help

18

evaluate QC nonconformance for a particular project. See the DUE Worksheet provided in

Appendix I of this document for additional examples.

• Will remediation be conducted at the area of concern? If remediation will be conducted,

the investigator should use the QC information supplied by the laboratory (or request

additional assistance from the laboratory when necessary) to minimize QC issues for

the samples to be collected to evaluate the effectiveness of remediation. Alternately,

if remediation will not be conducted, the analytical data should be of sufficient quality

to demonstrate compliance with an appropriate and applicable standard/screening

level.

• Were significant QC variances reported? Analytical data with gross QC failures are

usually deemed unusable (rejected) unless the investigator provides adequate

justification for its use. Significant QC variances are discussed in Appendix E of this

document.

• Were QC nonconformances noted for substances that are not constituents of concern

at the site as supported by the CSM? QC nonconformance assessments for

contaminants that are not of concern may not be critical to meeting project DQOs.

However, limiting the list of contaminants of concern without appropriate investigation

and analytical testing (i.e., incomplete CSM) can inadvertently overlook substances

that should be identified as contaminants of concern.

• Were QC nonconformances reported for compounds that are poorly performing

compounds? If the nonconformances are noted for poorly performing compounds that

are not contaminants of concern for the site, then they have little or no impact on data

usability. However, if the nonconformances are noted for poorly performing

compounds that are compounds of concern for the site, then the investigator may

have to address these issues, including but not limited to re-sampling and/or re-

analysis. Poorly performing compounds are discussed in Section 3.3 and Appendix F

of this document.

The DUE process is discussed in detail using examples in the sections that follow. The

examples presented below are for illustrative purposes only and are not meant to be a strict

or comprehensive evaluation of all types of laboratory QC information or all the possible

19

outcomes of data quality evaluations. The discussion begins with examples of less complex

QC information and concludes with the use of multiple lines of evidence to evaluate more

complicated DUE issues using more than one type of laboratory QC information and

information from the CSM for a hypothetical site. The standards/screening levels identified in

the examples are for illustrative purposes and may not be consistent with actual levels.

Appendix H of this document illustrates many common QC issues and a range of potential

DUE outcomes for each issue. The DUE is usually most efficiently completed by using a

worksheet or another manner that documents the thought process and findings of the DUE.

Appendix I of this document presents a DUE Worksheet that can be used and modified as

needed to summarize the types of issues that should be discussed in the investigator written

opinion regarding data usability.

4.6.1 Evaluation of Bias

The types of bias are discussed in Section 4.5.5 of this document. Bias can be low, high

or indeterminate.

High or low bias can be caused by many factors. Investigators should be cautioned that it

is never acceptable to “adjust laboratory reported” compound concentrations or RLs

based on percent recovery.

Indeterminate or non-directional bias means that the analytical results exhibit a poor

degree of precision (e.g., as demonstrated by high RPD in sample/MD measurements) or

there are cumulative conflicting biases in the data set (e.g., surrogate recoveries for a

sample are low but LCS recoveries are high). Duplicate sample results are used to

evaluate the degree of precision between the measurements. Indeterminate bias may

occur when heterogeneous matrices, such as contaminated soil or soil containing wastes

such as slag, are sampled. The heterogeneity of the matrix causes the analytical results to

vary and may cause a large RPD between the sample results. The degree to which the

analytical results represent the environmental conditions is related to the number of

samples taken to characterize the heterogeneous matrix and how those samples are

selected and collected. For example, as a greater number of samples are analyzed, the

analytical results will better represent the concentrations of the analytes present in the

environment.

20

Bias for a particular result should not be evaluated until all sources of possible bias in a

sample analysis have been evaluated. Evaluating the impact of bias on one’s data set is

not always straightforward. For example, judging bias only on surrogate recovery and

ignoring LCS recovery results may lead to erroneous conclusions. Therefore, overall bias

for a result must be judged by the cumulative effects of the QC results.

21

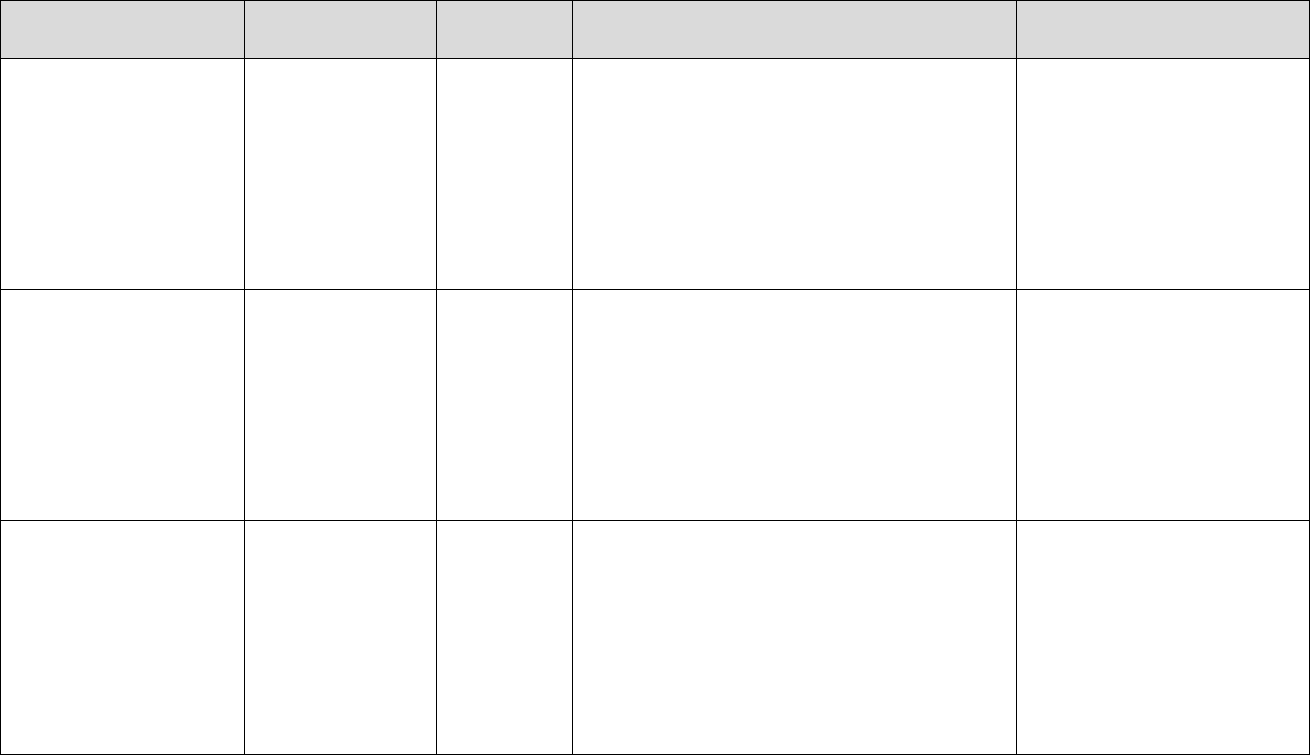

Examples of the actions suggested based on the type of bias observed (L= low;

I=indeterminate; H=high; None = within limits) on non-detect data (ND) are shown below.

For the purposes of the table, bias refers to agreement with method defined QA/QC limits.

Table1: Summary Actions Due to Bias.

ACTIONS

Bias

L

I

H

w/in limits

Conc.

ND<Reg Lev

Further

Further

None

None

ND=Reg Lev

Further

Further

None

None

ND>Reg Lev

Not usable to

determine

clean areas

Not usable to

determine

clean areas

Not usable to

determine

clean areas

Not usable to

determine

clean areas

Further = Look at Site; evaluate complete data set; Reanalyze; speak to

lab; resample if necessary.

Ultimately it is the investigators’ responsibility to use professional judgment when

determining the use of any data.

• If the detected concentrations of analytes are below the applicable

standard/screening level, the bias may have limited impact on the usability of the

data. If the concentration is just below the regulatory limit, evaluation of bias can be

critical, especially when data are being used to demonstrate compliance (i.e.,

issuance of a RAO).

• If the detected concentrations of analytes are above the applicable standard/screening

level, the bias may have limited impact on the usability of the data unless these data

are being used to demonstrate compliance (i.e., issuance of a RAO).

22

4.6.2 General Quality Control Information

The following subsections discuss issues associated with QC information related to

sample management, preservation, holding times, and field QC samples.

4.6.2.1 Chain of Custody Forms

Chain of Custody (COC) forms are used to document the history of sample possession

from the time the sample containers leave their point of origin (usually the laboratory

performing the analyses) to the time the samples are received by the laboratory. COCs

are considered legal documents. Sometimes incorrect information is on the COC form,

such as incorrect dates, sample identification numbers, and analysis requested.

Usually these errors are found through the course of the project. However, simply

correcting this information without documentation of the problem and the resolution

may amount to falsification of the chain of custody or cause confusion. The error may

be corrected by the investigator with a single-line cross-out of the error,

initialing/signing, dating of the correction, and an explanation for the correction. If the

laboratory notices an error on the COC, this should be noted in their sample receiving

documentation and in the laboratory narrative and the laboratory should contact the

investigator. Any changes to the COC should be approved by the investigator and

documented by the laboratory.

4.6.2.2 Sample Preservation Holding Times and Handling Time

Once a sample is collected, changes in the concentrations of analytes in the sample

can occur. To minimize these changes, the sample must be collected, stored, and

preserved as specified in the analytical method and for non-aqueous volatile organic

compounds as specified in the NJDEP's N.J.A.C. 7:26E-2.1(a)8. The sample must also

be analyzed within the specified holding and handling times. The holding time for a

sample has two components. The first component is the time from when a sample is

collected to when it is prepared for analysis or, if no preparation step is required, the

time from when the sample is collected to when it is analyzed. (For environmental

samples, handling time is included in this first component.) If a test requires a

preparation step, such as solvent extraction for determination of polychlorinated

23

biphenyls (PCBs) or acid digestion for determination of metals, there is a second

holding-time component referred to as the extract holding time. This is the time

between when the sample is prepared and when the resultant extract or digestate is

analyzed. Failure to analyze a sample within the prescribed holding time could render

the data unusable. The laboratory should be made aware (usually in the QAPP) that if

holdings times are not going to be met, then the laboratory should contact the

investigator and check to see if the samples should still be analyzed. The use of

laboratory data from a sample with a failed holding time must be evaluated for usability.

The determination made by the investigator to use data with failed holding times is

based on the critical nature of the sample, the type of sample and the analytical results.

The conventional conclusion with organic samples that exceeded holding times is that

there is a loss of compound and the concentration may be biased low.

It should be noted that certain constituents are not necessarily adversely affected by

holding time exceedances providing the samples are preserved and stored properly. If

the contaminants of concern were PCBs, PCDDs/PCDFs and metals, holding time

exceedance may not adversely affect usability. In these situations, the data should be

qualified and discussed by the investigator. However, attempts should be made to

meet the method required holding times.

Example 1: Meeting standards –exceeded holding times

Benzene and 1,2-dichloroethane were found in a water sample at concentrations of 0.9

ug/L and 1 ug/L, respectively. This sample was to be the last round of sampling prior to

the intention of issuing a RAO. Applicable ground water quality criteria for benzene and

1,2-dichloroethane are 1 and 2 ug/L respectively. However, the data were obtained

from samples that exceeded holding time to analysis by 4 days. Because the overriding

consensus with a holding time exceedance is that data are biased low and, because of

the proximity of the concentration to the applicable criteria, the investigator should

probably not use these data.

24

Example 2 – Holding time exceedance – ground water monitoring

Trichloroethene, tetrachloroethene and 1,1,1-trichloroethane are present in a water

sample at concentrations of 80 ug/L, 140 ug/L and 125 ug/L, respectively. Compound-

specific ground water criteria apply in this situation for the trichloroethene,

tetrachloroethene and 1,1,1-trichloroethane at concentrations of 1 ug/L, 1 ug/L and 30

ug/L, respectively. The sample is part of a routine, quarterly monitoring program of a

contaminated ground water aquifer and the sample results are similar to those

determined from previous rounds of sampling and analyses. It is expected that

quarterly monitoring will continue for a minimum of three additional years. However, the

data were from a sample that exceeded the holding time to analysis by 3 days. Based

on this information, the data would most likely be used because there will be additional

rounds of sampling prior to terminating the remedial activities, data are consistent with

previous results and the concentrations reported were significantly above the

applicable standards such that the effect of a holding time exceedance on the accuracy

of the numbers reported would probably be negligible.

Sample preservation can be either physical or chemical. Physical preservation might

be cooling, freezing, or storage in a hermetically sealed container. Chemical

preservation refers to addition of a chemical, usually a solvent, acid, or base to prevent

loss of any analyte in the sample. An example of physical storage is the freezing of soil

samples for determination of volatile organic compounds (VOCs). This procedure and

other procedures for preserving soil samples for the determination of VOCs can be

found in the NJDEP Field Sampling Procedures Manual.

NJDEP expects that all non-aqueous samples collected for the purpose of laboratory

analysis for VOCs be collected and preserved in accordance with the procedures

described in N.J.A.C. 7:26E-2.1(a)8 and all appropriate analytical methods and

technical guidance. If proper preservation of soils sampled for volatiles is not

performed, VOCs may be biased low and may be unusable. Based on this evaluation,

additional investigation and/or remediation may be warranted. Improperly preserved

samples should not be used to determine compliance with regulatory standards and/or

criteria.

25

4.6.2.3 Equipment, Trip and Field Blanks

Equipment-rinsate, trip, and field blank samples can be used to evaluate contamination

in a sample as a result of improperly decontaminated field equipment or contamination

introduced during transportation or collection of the sample. Trip and field blanks

(including laboratory analyte-free water which may be used to produce an equipment-

rinsate blank) must be transported to the site with sample containers and must be

received at the site within one day of preparation in the laboratory. Blanks may be held

on-site for no more than two calendar days and must arrive back at the lab within one

day of shipment from the field. If the handling time is not met, then it is possible that the

field blanks will not represent the site conditions. Handling times are established more

from logistical reasons than from scientific reasons. It is possible that sample

containers kept on-site or in construction trailers on site have a greater chance of

picking up contamination the longer they are stored. This may present a challenge to

the investigator for scheduling sample collection activities especially following

weekends and holidays.

The investigator should be cognizant that laboratories have a limited amount of time to

prepare/extract and analyze samples, some of which may require additional effort such

as reanalysis and as such, the quicker samples get to the laboratory, the better it is for

all parties concerned.

Low concentrations of contaminants may be detected in samples as a result of non-

site-related contamination. Organic compounds typically found include, but are not

limited to, methyl ethyl ketone (MEK), acetone, and methylene chloride which are

commonly used as laboratory solvents. Bis(2-ethylhexyl) phthalate is also a common

laboratory contaminant; however, it is also observed from field sample collection

activities such as use of plastics. Additional scrutiny should be taken if these are

contaminants of concern at the site.

The presence of any analytes in any blanks is noted in the DQA review of the data.

The concentrations of the analytes in the blanks are compared to any detected analyte

concentrations in the associated samples, taking into account any dilution factors.

Analytes that are detected in the blanks, but ND in the sample, can be ignored.

Analytes detected in the laboratory method blank (not the field and/or trip blank) and

26

detected in any associated sample should be flagged by the laboratory with a "B" suffix

to draw attention to the data user.

SRP has been using a 3 times to 10 times policy to evaluate the potential presence of

compounds in an environmental sample when the same compounds are also found in

a blank sample. The specific policy is as follows.

If the concentration of a given compound in a sample is less than or equal to three (3)

times the concentration of that compound in the associated equipment, trip or field

blank, then it is unlikely that the compound is present in the sample. If the

concentration is between 3 and 10, although it is present in a corresponding blank, the

presence of the compound in the site sample is considered real; however, if the

concentration is greater than 10 times the concentration in the corresponding blank,

the impact of the blank on the sample results is considered negligible.

All compounds that are present in a sample at a concentration of less than or equal to

10 times the concentration in the corresponding blank should be qualified

(conventionally, a “B” qualifier is added next to the concentration of the affected

compound) to indicate possible blank contamination.

1

Example 3: Application of 3x Rule:

Benzene was found in a ground water sample collected at the site at concentration of 2

μg/L. Benzene is also present at a concentration of 1.0 μg/l in the associated

equipment blank. The concentration in the sample is less than 3 times but less the

concentration of the blank. Therefore, the result may not be real; however, the result

should be qualified B and discussed by the investigator.

Example 4: Application of 10x Rule

1

Strict validation protocols may have more robust procedures for blank qualification (e.g., in

addition to the “B” qualifier, concentrations between 3 and 10 times the associated blank should

also be reported with the "J" qualifier.) However, for the purpose of the DUE, addition of the “B”

qualifier (or other user-defined qualifier) will suffice to denote corresponding blank contamination.

27

Benzene was found in a ground water sample collected at the site at concentration of 4

μg/L. Benzene is also present at a concentration of 0.5 μg/l in the associated

equipment blank. The concentration in the sample is greater than 3 times but less than

10 times the concentration of the blank. Therefore, the result is real and should be

qualified B which may indicate quantitative uncertainty. Additional site investigation

may be warranted including an evaluation of the sampling protocol.

Example 5: Application of 10x Rule

Benzene was found in a ground water sample collected at the site at concentration of

20 μg/L. Benzene is also present at a concentration of 0.5 μg/l in the associated

equipment blank. The concentration in the sample is greater than 10 times the

concentration of the blank. Therefore, the sample result is considered real and may be

used.

The investigator should review all blank related results in relation to the CSM for the

site, including results for other samples in the vicinity, in order to determine if this

evaluation is reasonable before concluding that a compound is or is not site related.

This policy cannot be used to eliminate detections of analytes that can be attributed to

a release or a potential release. Special attention should be paid to concentrations that

may be blank related at or near regulatory/screening levels.

4.6.2.4 Field Duplicates

Field duplicates are replicate or split samples collected in the field and submitted to the

laboratory as two different samples. Field duplicates measure both field and laboratory

precision. Blind duplicates are field duplicate samples submitted to the laboratory

without being identified as duplicates. Duplicate samples are used to evaluate the

sampling technique and homogeneity/heterogeneity of the sample matrix. The results

of field duplicates are reported as the RPD between the sample and duplicate results.

As a conservative approach, the higher of the two results for field duplicate samples

would be compared to the applicable regulatory criteria.

In general, solid matrices have a greater amount of heterogeneity than liquid matrices.

When the RPD for detected constituents (concentrations greater than the RL) is

28

greater than or equal to 50 percent for nonaqueous matrices or greater than or equal to

30 percent for aqueous matrices, the investigator is advised to consider the

representativeness of the sample results in relation to the CSM. If the field duplicates

are not collected and analyzed from your site, then field duplicate precision

cannot be part of your DUA.

Field duplicate results should be evaluated along with any laboratory duplicate results

that are available in an attempt to identify whether the issue is related to the sample

matrix, collection techniques, or the laboratory analysis of the sample. (Laboratory

duplicates are obtained from one environmental sample in one sample container that is

extracted and analyzed twice. Refer to Section 4.3.4 of this guidance document for

additional information.) If the laboratory duplicates are acceptable, but the field

duplicates are not, the likely source of this lack of reproducibility is heterogeneity of the

matrix or the sampling or compositing technique. If the laboratory duplicates are not

acceptable, laboratory method performance may be the source for the lack of

reproducibility. The RL for the analyte in question must be considered in this evaluation

because, typically, analytical precision decreases as the results get closer to the RL.

One could also evaluate precision by comparing a sample result to a sample duplicate

result (no spiking is performed), although representativeness of the samples could be a

factor when evaluating the results of duplicate analyses. Furthermore, if the results for

a specific analyte are ND in both samples, the evaluation of precision, through

calculation of RPD, cannot be performed

Example 6: Duplicate Sample Results – Heterogeneity

Duplicate soil sample analytical results for lead for two soil samples were 500 mg/kg

and 1,050 mg/kg. The RL was 1 mg/kg. The RPD for these samples is approximately

71 percent, which is greater than the guideline of 50 percent. The lack of precision for

these sample results indicate that the samples are heterogeneous and may not be

representative of the site location for lead. The investigator is advised to consider the

representativeness of the sample results in relation to the CSM. Additional

investigation and analysis are needed to evaluate the actual concentrations and

distribution of lead at the site.

29

4.6.3 Laboratory Quality Control Information

The DKQPs and commonly used analytical methods for environmental samples have

been verified to produce reliable data for most matrices encountered. The reliability of

the results to represent environmental conditions is predicated on many factors

including:

• The sample must be representative of field conditions;

• The sample must be properly preserved and analyzed within handling and holding

times;

• The preparation steps used to isolate the analytes from the sample matrix must be

such that no significant amounts of the analytes are lost;

• The analytical system should not have contamination above the RL;

• The analytical system must be calibrated and the calibration verified prior to sample

analysis; and

• No significant sample matrix interferences are present which would affect the

analysis.

With the exception of the first bullet, the laboratory can provide the data user with

laboratory QC information that provides insight into these key indicators. The

determination that a sample is representative of the field conditions is based on

reviewing the CSM, the sampling plan, the field team’s SOPs and field logs, and the

results for other samples including field and laboratory duplicates.

The primary laboratory QC data quality information that the investigator considers

during the DQA are the DKQP “Data of Known Quality

Conformance/Nonconformance Summary Questionnaire”, the chain of custody

form, sample preservation, handling and holding times, RLs, laboratory and field

duplicates, surrogates, MSs and MSDs (when requested by the investigator), method

blanks, and laboratory control samples. However, there are other non-standard types of

30

QC information (e.g., regulator pressure from a canister) that are required to be

reported by the DKQPs that are described in Appendix B of the DKQP Guidance.

4.6.3.1 Data of Known Quality Conformance/Nonconformance Summary Questionnaire

The DKQP “Data of Known Quality Conformance/Nonconformance Summary

Questionnaire” is used by the laboratory to certify whether the data meet the

requirements for “Data of Known Quality.” The DKQP “Data of Known Quality

Conformance/Nonconformance Summary Questionnaire” is presented in

Appendix A of the DKQ Guidance and can be found at the NJDEP website at

http://www.nj.gov/dep/srp/guidance/index.html#analytic_methods

. All of the

questions on the “Data of Known Quality Conformance/Nonconformance Summary

Questionnaire” should be answered, the questionnaire should be signed, and a

narrative of nonconformances included with the analytical data package. If all of the

questions are not answered, or the questionnaire is not signed, or if a narrative of

nonconformances is not included with the data package, then the investigator should

contact the laboratory to obtain a properly completed questionnaire and/or the

missing narrative. If the laboratory cannot supply the requested information, the

investigator should demonstrate equivalency with the DKQPs for the data set by

following the guidance presented in Sections 5 and 6 of the DKQP Guidance.

4.6.3.2 Reporting Limits

The RL is the lowest concentration that a method can achieve for a target analyte

with the necessary degree of accuracy and precision. As defined in N.J.A.C. 7:26E

2.1(a)3, the RL for an organic compound is derived from the lowest concentration

standard for that compound used in the calibration of the method as adjusted by

sample-specific preparation and analysis factors (for example, sample dilutions and

percent solids). The RL for an inorganic compound is derived from the concentration

of that analyte in the lowest level check standard (which could be the lowest

calibration standard in a multi-point calibration curve). RLs are method and

laboratory-specific. Laboratories are required to report the RLs for all compounds for

all samples per Appendix A of N.J.A.C. 7:26E.

31

RLs and their association with meeting standards and/or screening levels present

one of the most significant challenges to laboratories and investigators. A commonly

occurring scenario that arises is with volatile analyses and default impact to ground

water standards where multiple aliphatic compounds are present, the sample is

diluted because of the presence of one analyte with a very high standard, resulting in

an inability to “see down to the standard” for another compound. This frequently