1

Lecture Notes

on

Compositional Data Analysi s

V. Pawlowsky-Glahn

J. J. Egozcue

R. Tolosana-Delgado

2007

Prof. Dr. Vera Pawlowsky-Glahn

Catedr´atica de Universidad (Full professor)

University of Girona

Dept. of Computer Science and Applied Mathematics

Campus Montilivi — P-1, E-17071 Girona, Spain

vera.pawlo[email protected]du

Prof. Dr. Juan Jos´e Egozcue

Catedr´atico de Universidad (Full professor)

Technical University of Catalonia

Dept. of Applied Mathematics III

Campus Nord — C-2, E-08034 Barcelona, Spain

Dr. Raimon Tolosana-Delgado

Wissenschaft licher Mitarbeiter (fellow scientist)

Georg-August-Universit¨at G¨ottingen

Dept. of Sedimentology and Environmental Geology

Goldschmidtstr. 3, D-37077 G¨ottingen, Germany

[email protected]ettingen.de

Preface

These notes have been prepared as support to a short course on compositional data

analysis. Their aim is to transmit the basic concepts and skills for simple applications,

thus setting the premises for more advanced projects. One should be aware that frequent

updates will be required in the near future, as the theory presented here is a field of

active research.

The notes are based both on the monograph by John Aitchison, Statistical analysis

of compositional data (1986), and on recent developments that complement the theory

developed there, mainly those by Aitchison, 1997; Barcel´o- Vidal et al., 200 1; Billheimer

et al., 2001; Pawlowsky-Glahn and Egozcue, 2001, 2002; Aitchison et al., 2002; Egozcue

et al., 2003; Pawlowsky-Glahn, 2003; Egozcue and Pawlowsky-Glahn, 2005. To avoid

constant references to mentioned documents, only complementary references will be

given within the text.

Readers should be aware that for a thoro ugh understanding of compositional data

analysis, a good knowledge in standard univariate statistics, basic linear algebra and

calculus, complemented with an introduction to applied multivariate statistical analysis,

is a must. The specific subjects of interest in multivariate statistics in real space can

be learned in parallel from standard textbooks, like for instance Krzanowski (1988)

and Krzanowski and Marriott (1994) (in English), Fahrmeir and Hamerle (1984) (in

German), or Pe˜na (2002) (in Spanish). Thus, the intended audience goes from advanced

students in applied sciences to practitioners.

Concerning notation, it is important to note that, to conform to the standard praxis

of registering samples as a matrix where each row is a sample and each column is a

variate, vectors will be considered as row vectors t o make the transfer from theoretical

concepts to practical computations easier.

Most chapters end with a list of exercises. They are formulated in such a way that

they have to be solved using an a ppropriate software. A user friendly, MS-Excel based

freeware to facilitate this task can be downloaded from the web at the following address:

http://ima.udg.edu/Recerca/EIO/inici

eng.html

Details about this package can be found in Thi´o-Henestrosa and Mart´ın-Fern´andez

(2005) or Thi´o-Henestrosa et al. (2005). There is a lso available a whole library o f

i

ii Preface

subroutines for Matlab, developed mainly by John Aitchison, which can be obtained

from John Aitchison himself or from anybody of the compositional data analysis group

at the University of Girona. Finally, those interested in working with R (or S-plus)

may either use the set of functions “mixeR” by Bren (2003), or the full-fledged package

“compositions” by van den Boogaart and Tolosana-Delgado (2005).

Contents

Preface i

1 Introduction 1

2 Compositional data and their sample space 5

2.1 Basic concepts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

2.2 Principles of compositional ana lysis . . . . . . . . . . . . . . . . . . . . . 7

2.2.1 Scale invariance . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

2.2.2 Permutation invaria nce . . . . . . . . . . . . . . . . . . . . . . . . 9

2.2.3 Subcompositional coherence . . . . . . . . . . . . . . . . . . . . . 9

2.3 Exercises . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

3 The Aitchison geometry 11

3.1 General comments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

3.2 Vector space structure . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

3.3 Inner product, norm and distance . . . . . . . . . . . . . . . . . . . . . . 14

3.4 Geometric figures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

3.5 Exercises . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

4 Coordinate representation 17

4.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

4.2 Compositional observations in real space . . . . . . . . . . . . . . . . . . 18

4.3 Generating systems . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

4.4 Orthonormal coordinates . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

4.5 Working in coordinates . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

4.6 Additive log- r atio coordinates . . . . . . . . . . . . . . . . . . . . . . . . 27

4.7 Simplicial matrix notation . . . . . . . . . . . . . . . . . . . . . . . . . . 28

4.8 Exercises . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

5 Exploratory data analysis 33

5.1 General remarks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

5.2 Centre, total variance and variation matrix . . . . . . . . . . . . . . . . . 34

iii

iv Contents

5.3 Centring and scaling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

5.4 The biplot: a graphical display . . . . . . . . . . . . . . . . . . . . . . . 36

5.4.1 Construction of a biplot . . . . . . . . . . . . . . . . . . . . . . . 36

5.4.2 Interpretation of a compositional biplot . . . . . . . . . . . . . . . 38

5.5 Exploratory analysis of coordinates . . . . . . . . . . . . . . . . . . . . . 39

5.6 Illustration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

5.7 Exercises . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

6 Distributions on the simplex 49

6.1 The normal distribution on S

D

. . . . . . . . . . . . . . . . . . . . . . . 49

6.2 Other distributions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

6.3 Tests of normality on S

D

. . . . . . . . . . . . . . . . . . . . . . . . . . . 50

6.3.1 Marginal univariate distributions . . . . . . . . . . . . . . . . . . 51

6.3.2 Bivariate angle distribution . . . . . . . . . . . . . . . . . . . . . 53

6.3.3 Radius test . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

6.4 Exercises . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

7 Statistical inference 57

7.1 Testing hypothesis a bout two groups . . . . . . . . . . . . . . . . . . . . 57

7.2 Probability and confidence regions for compositional data . . . . . . . . . 60

7.3 Exercises . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

8 Compositional processes 63

8.1 Linear processes: expo nential growth or decay of mass . . . . . . . . . . 63

8.2 Complementary processes . . . . . . . . . . . . . . . . . . . . . . . . . . 66

8.3 Mixture process . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

8.4 Linear regression with compositional response . . . . . . . . . . . . . . . 72

8.5 Principal component analysis . . . . . . . . . . . . . . . . . . . . . . . . 75

References 81

Appendices a

A. Plotting a ternary diagram a

B. Parametrisation of an elliptic region c

Chapter 1

Introduction

The awareness of problems related to the statistical analysis of comp ositional data

analysis dates back to a paper by Karl Pearson (1897) which title began significantly

with the words “On a form o f spurious correlation ... ”. Since then, as stated in

Aitchison and Egozcue (2005), the way to deal with this type of data has g one through

roughly four phases, which they describe as follows:

The pre-1960 phase rode on the crest of the developmental wave of stan-

dard multivariate statistical analysis, a n appropriate form of analysis for

the investigation of problems with real sample spaces. Despite the obvious

fact that a compositional vector—with components the proportions of some

whole—is subject to a constant-sum constraint, and so is entirely different

from the unconstrained vector of standard unconstrained multivariate sta-

tistical analysis, scientists and statisticians alike seemed almost to delight in

applying all the intricacies of standard multivariate analysis, in particular

correlation analysis, to compositional vectors. We know that Ka r l Pearson,

in his definitive 1897 paper on spurious correlations, had pointed out the

pitfalls of interpretation of such activity, but it was not until around 1960

that specific condemnation of such an approach emerged.

In the second phase, the primary critic of the application of standard

multivariate analysis to compositional data was the geologist Felix Chayes

(1960), whose main criticism was in the interpretation of product-moment

correlation between components of a g eochemical composition, with negative

bias the distorting factor from the viewpoint o f any sensible interpretation.

For this problem of negative bias, often referred to as the closure problem,

Sarmanov and Vistelius (1959) supplemented the Chayes criticism in geolog-

ical applications and Mosimann (1962) drew the attention of biologists to it.

However, even conscious researchers, instead of working towards an appro-

priate methodology, adopted what can only be described as a pathological

1

2 Chapter 1. Intr oduction

approach: distortion of standard multivariat e techniques when applied to

compositional data was the main goal of study.

The third phase was the realisation by Aitchison in the 1980’s that com-

positions provide information about relative, not a bsolute, values of com-

ponents, that therefore every statement about a composition can be stated

in terms of ratios of components (Aitchison, 1981, 1982, 1983, 1984). The

facts that logra tios are easier to handle mathematically than ratios and

that a logratio transformation provides a one-to-one mapping on to a real

space led to the advocacy of a methodology based on a variety of logratio

transformations. These transformations allowed the use of standard uncon-

strained multivariate statistics applied to transformed data, with inferences

translatable back into compositional statements.

The fourth phase arises from the realisation t hat the internal simpli-

cial operation of perturbation, the external operation of powering, and the

simplicial metric, define a metric vector space (indeed a Hilbert space) (Bill-

heimer et al. 1997, 2001; Pawlowsky-Glahn and Egozcue, 2001). So, many

compositional problems can be investigated within this space with its spe-

cific algebraic-geometric structure. There has thus arisen a staying-in-the-

simplex approach to the solution of many compositional problems (Mateu-

Figueras, 2003; Pawlowsky-Glahn, 2003 ). This staying-in-the-simplex point

of view proposes to represent compositions by their coordinates, as they live

in an Euclidean space, and to interpret them and their relationships from

their representation in the simplex. Accordingly, the sample space of ran-

dom compositions is identified to be the simplex with a simplicial metric and

measure, different from the usual Euclidean metric and Lebesgue measure

in real space.

The third phase, which mainly deals with (log-ratio) transformation of

raw data, deserves special attention because these techniques have been very

popular and successful over more than a century; from the Galton-McAlister

introduction of such an idea in 1879 in their logarithmic transformation for

positive dat a, through variance-stabilising transformations for sound analy-

sis of variance, to the general Box-Cox transformation (Box and Cox, 19 64)

and the implied transformations in generalised linear modelling. The logra-

tio transformation principle was based on the fact that there is a one-to-one

correspondence between compositional vectors and associated logratio vec-

tors, so that any statement about compositions can be reformulated in terms

of logratios, and vice versa. The advantag e of the transformation is that it

removes the problem of a constrained sample space, the unit simplex, to one

of an unconstrained space, multivariate real space, opening up all available

standard multivariate techniques. The original transformations were princi-

pally the additive logratio transformation (Aitchison, 1986, p.113) and the

3

centred logratio transformation (Aitchison, 1986, p.79). The logratio trans-

formation methodology seemed to be accepted by the statistical community;

see for example the discussion of Aitchison (1982). The logratio method-

ology, however, drew fierce o pposition f r om other disciplines, in particular

from sections of the geological community. The reader who is interested in

following the arg uments that have arisen should examine the Letters to the

Editor of Mathematical Geology over the period 1988 through 2 002.

The notes presented here correspond to the fourth phase. They pretend to sum-

marise the state-of-the-art in the staying-in-the-simplex approach. Therefore, the first

part will be devoted to the algebraic-geometric structure of the simplex, which we call

Aitchison geometry.

4 Chapter 1. Intr oduction

Chapter 2

Compositional data and their

sample space

2.1 Basic concep ts

Definition 2.1. A row vector, x = [x

1

, x

2

, . . . , x

D

], is defined as a D-part composition

when all its components are strictly positive real numbers and they carry only relative

information.

Indeed, that compositional information is relative is implicitly stated in the units, as

they ar e always parts of a whole, like weight or volume percent, ppm, ppb, or molar

proportions. The most common examples have a constant sum κ and are known in the

geological literature as closed data (Chayes, 1971 ). Frequently, κ = 1, which means

that measurements have been made in, or transformed to, parts per unit, or κ = 100,

for measurements in percent. Other units are possible, like ppm or ppb, which are

typical examples for compositional data where only a part of the composition has been

recorded; or, as recent studies have shown, even concentration units (mg/L, meq/L,

molarities and molalities), where no constant sum can be feasibly defined (Buccianti

and Pawlowsky-Glahn, 2005; Otero et al., 2005).

Definition 2.2. The sample space of compositional data is the simplex, defined as

S

D

= {x = [x

1

, x

2

, . . . , x

D

] |x

i

> 0, i = 1, 2, . . . , D;

D

X

i=1

x

i

= κ}. (2.1)

However, this definition does not include compositions in e.g. meq/L. Therefore, a more

general definition, together with its interpretation, is given in Section 2.2.

Definition 2.3. For any vector of D real positive components

z = [z

1

, z

2

, . . . , z

D

] ∈ R

D

+

5

6 Chapter 2. Sample space

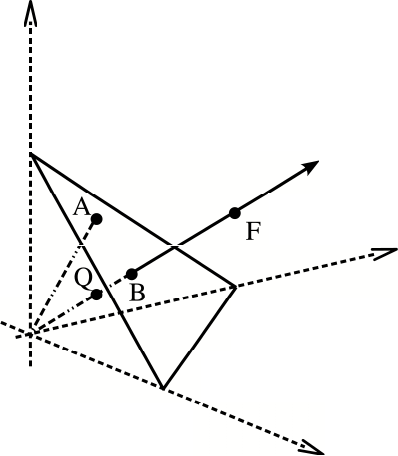

Figure 2.1: Left: Simplex imbedded in R

3

. Right: Ternary diagram.

(z

i

> 0 for a ll i = 1, 2, . . . , D), the closure of z is defind as

C(z) =

"

κ · z

1

P

D

i=1

z

i

,

κ · z

2

P

D

i=1

z

i

, ··· ,

κ · z

D

P

D

i=1

z

i

#

.

The result is the same vector rescaled so that the sum of its components is κ. This

operation is required for a formal definition of subcomposition. No te that κ depends

on the units of measurement: usual values are 1 (proportions), 100 (%), 10

6

(ppm) and

10

9

(ppb).

Definition 2.4. Given a composition x, a subcomposition x

s

with s parts is obtained

applying the closure operation to a subvector [x

i

1

, x

i

2

, . . . , x

i

s

] of x. Subindexes i

1

, . . . , i

s

tell us which parts are selected in the subcomposition, not necessarily the first s ones.

Very often, compositions contain many variables; e.g., the major oxide bulk compo-

sition of igneous rocks have around 10 elements, and they are but a few of the total

possible. Nevertheless, one seldom represents the full sample. In fact, most of the

applied literature on compositional data analysis (mainly in geology) restrict their fig-

ures to 3 -part (sub)compositions. For 3 parts, the simplex is an equilateral triangle, as

the one represented in Figure 2.1 left, with vertices at A = [κ, 0, 0], B = [0, κ, 0] and

C = [0, 0, κ]. But t his is commonly visualized in the fo r m of a ternary diagram—which

is an equiva lent representation—. A ternary diagram is an equilateral triangle such

that a generic sample p = [p

a

, p

b

, p

c

] will plot at a distance p

a

from the opposite side

of vertex A, at a distance p

b

from the opposite side of vertex B, and at a distance p

c

from the opposite side of vertex C, as shown in Figure 2.1 right. The triplet [p

a

, p

b

, p

c

]

is commonly called the barycentric coordinates of p, easily interpretable but useless in

plotting (plotting them would yield the three-dimensional left-hand plot o f Figure 2.1).

What is needed (to get the right-hand plot of the same figure) is the expression of the

2.2 Principles of compositional analysis 7

coordinates of the vertices and o f the samples in a 2-dimensional Cartesian co ordinate

system [u, v], and this is given in Appendix A.

Finally, if only some parts of the composition are available, we may either define

a fill up or residual value, or simply close the observed subcomposition. Note that,

since we almost never analyse every possible part, in fact we are always working with

a subcomposition: the subcomposition of analysed parts. In any case, both methods

(fill-up or closure) should lead to identical results.

2.2 Principles of compositional analysis

Three conditions should be fulfilled by any statistical method to be applied to com-

positions: scale invariance, permutation invariance, and subcompositional coherence

(Aitchison, 1986).

2.2.1 Scale invariance

The most important characteristic of compositional data is that they carry onl y relative

information. Let us explain this concept with an example. In a paper with the sugges-

tive title of “unexpected trend in the compositional maturity of second-cycle sands”,

Solano-Acosta and Dutta (2005) analysed the lithologic composition of a sandstone and

of its derived recent sands, looking at the percentage of grains made up o f only quartz,

of only feldspar, or of rock f r agments. For medium sized grains coming from the parent

sandstone, they report an average composition [Q, F, R] = [53, 41, 6] %, whereas for the

daughter sands the mean values are [37, 53, 10] %. One expects that feldspar and rock

fragments decrease as the sediment matures, thus they should be less important in a

second generation sand. “Unexpectedly” (or apparently), this does not happen in their

example. To pass from the parent sandstone to t he daughter sand, we may think of

several different changes, yielding exactly the same final composition. Assume those

values were weight percent (in gr/100 gr of bulk sediment). Then, one of the following

might have happened:

• Q suffered no change passing from sandstone t o sand, but 35 gr F and 8 gr R were

added to the sand (for instance, due to comminution o f coarser grains of F and R

from the sandstone),

• F was unchanged, but 25 gr Q were depleted from the sandstone and at the same

time 2 gr R were added (for instance, because Q was better cemented in the

sandstone, thus it tends to form coarser grains),

• any combination of the former two extremes.

8 Chapter 2. Sample space

The first two cases yield final masses of [53, 76, 14] gr, resp ectively [28, 41, 8] gr. In a

purely compositional data set, we do not know if we added or subtracted mass from

the sandstone to the sand. Thus, we cannot decide which of these cases really oc-

curred. Without further (non-compositional) information, there is no way to distin-

guish between [5 3, 76, 14] gr and [28, 41, 8] gr, as we only have the value of the sand

composition after closure. Closure is a projection of any p oint in the positive orthant

of D-dimensional real space onto the simplex. All points on a ray starting at the

origin (e. g . , [53, 76, 14] and [28, 41, 8 ]) are projected onto the same point of S

D

(e.g.,

[37, 53, 10] %). We say that the ray is an equivalence class and the point on S

D

a rep-

resentant of the class: Figure 2.2 shows this relationship. Moreover, if we change the

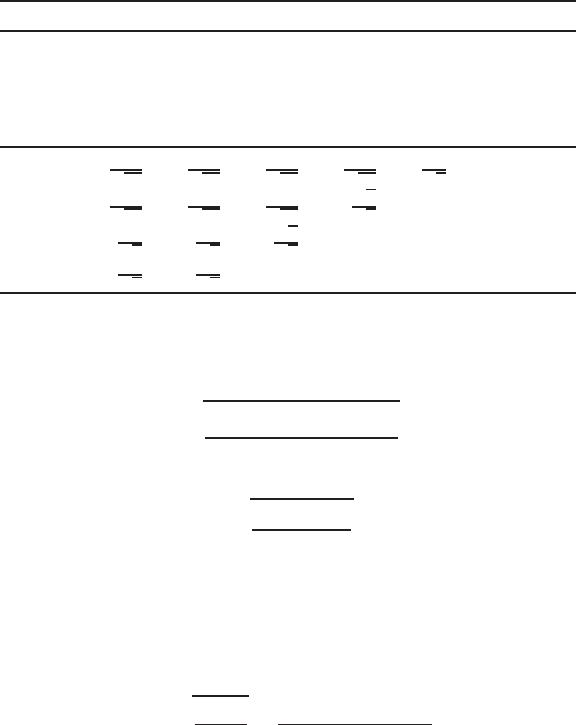

Figure 2 .2 : Repr e sentation of the compositional equivalence relationship. A represents the original

sandstone composition, B the final sand comp osition, F the amount of each part if feldspar was added

to the system (first hyp othesis), and Q the amount of each part if quartz was depleted from the system

(second hypothesis). Note that the po ints B, Q and F are compositionally equivalent.

units of our data (for instance, from % to ppm), we simply multiply all our points by

the constant of change of units, moving them along their rays to t he intersections with

another triangle, parallel to the plotted one.

Definition 2.5. Two vectors of D positive real compo nents x, y ∈ R

D

+

(x

i

, y

i

≥ 0 for all

i = 1, 2, . . . , D), are com positionally equivalent if there exists a positive scalar λ ∈ R

+

such that x = λ · y and, equivalently, C(x) = C(y).

It is highly reasonable t o ask our analyses to yield the same result, independently of

the value of λ. This is what Aitchison (1986) called scale invariance:

2.3 Exercises 9

Definition 2.6. a function f(·) is scale-invariant if for any p ositive real value λ ∈ R

+

and for any composition x ∈ S

D

, the function satisfies f (λx) = f(x), i.e. it yields the

same result for a ll vectors compositionally equivalent.

This can only be achieved if f(·) is a function only of log-ratios of the parts in x

(equivalently, of r atios of parts) (Aitchison, 1997; Barcel´o-Vidal et al., 2001).

2.2.2 Permutation invariance

A function is permutation-inva riant if it yields equivalent results when we change the

ordering of our parts in the composition. Two examples might illustrate what “equiva-

lent” means here. If we are computing the distance between our initial sandstone and

our final sand compositions, this distance should be the same if we work with [Q, F, R]

or if we work with [F, R, Q] (or any other permutation of the parts). On the other

side, if we are interested in the change occurred from sandstone to sand, results should

be equal after reordering. A classical way to get rid of the singularity of the classical

covariance matrix of compositional data is to erase one component: this procedure is

not permutation-invariant, as results will largely depend on which component is erased.

2.2.3 Subcompositional coherence

The final condition is subcompositional coherence: subcompositions should behave as

orthogonal projections do in conventional real analysis. The size of a projected segment

is less or equal than the size of the segment itself. This general principle, though

shortly stated, has several practical implications, explained in the next chapters. The

most illustrative, however are the following two.

• The distance measured between two full comp ositions must be greater (or at least

equal) then the distance between them when considering any subcomposition.

This particular behaviour of the distance is called subcompo s itional dominance.

Excercise 2.4 proves that the Euclidean distance between compositional vectors

does not fulfill this condition, and is thus ill-suited to measure distance between

compositions.

• If we erase a non-info r mative part, our results should not change; for instance if

we have available hydrogeochemical data from a source, and we are interested in

classifying the kind of rocks that wat er wa shed, we will mostly use the relations

between some major oxides and ions (SO

2+

4

, HCO

−

3

, Cl

−

, to mention a few), and

we should get the same results working with meq/L ( including implicitly water

content), o r in weight percent of the io ns of interest.

10 Chapter 2. Sample space

2.3 Exercises

Exercise 2.1. If data have been measured in ppm, what is the value of the constant

κ?

Exercise 2.2. Plot a ternary diagram using different values for t he constant sum κ.

Exercise 2.3. Verify that data in table 2.1 satisfy the conditions for being composi-

tional. Plot them in a ternary diagram.

Table 2.1: Simulated dat a set.

sample 1 2 3 4 5 6 7 8 9 10

x

1

79.07 31.74 18.61 49.51 29.22 21.99 11.74 24.47 5.14 15.54

x

2

12.83 56.69 72.05 15.11 52.36 59.91 65.04 52.53 38.39 5 7.34

x

3

8.10 11.57 9.34 35.38 18.42 18.10 23.22 23.00 56.47 27.11

sample 11 12 13 14 15 16 17 18 19 20

x

1

57.17 52.25 77.40 10.54 46.14 16.29 32.27 40.73 49.29 6 1.49

x

2

3.81 23.73 9.13 20.34 15.97 69.18 36.20 47.41 42.74 7.63

x

3

39.02 24.02 13.47 69.12 37.89 14.53 31.53 11.86 7.97 30.88

Exercise 2.4. Compute the Euclidean distance between the first two vectors of table

2.1. Imagine we originally measured a fourth variable x

4

, which was constant for all

samples, and equal to 5%. Take the first two vectors, close them to sum up to 95%, add

the fourth variable to them (so that they sum up to 100%) and compute the Euclidean

distance between the closed vectors. If the Euclidean distance is subcompositionally

dominant, the distance measured in 4 parts must be greater or equal to the distance

measured in the 3 part subcomposition.

Chapter 3

The Aitchison geometry

3.1 General comments

In real space we are used to add vectors, to multiply them by a constant or scalar

value, to look f or properties like orthogonality, or to compute the distance between two

points. All this, and much more, is possible because the real space is a linear vector

space with a n Euclidean metric structure. We are f amiliar with its geometric structure,

the Euclidean geometry, and we represent our observations within this geometry. But

this geometry is not a proper geometry f or compositional data.

To illustrate this assertion, consider the compositions

[5, 65, 30], [10, 60, 30], [50, 20, 30], and [55, 15, 3 0].

Intuitively we would say that the difference between [5, 65, 30] and [10, 60, 30] is not

the same as the difference between [50, 20, 30] a nd [55, 15, 30]. The Euclidean distance

between them is certainly the same, as there is a difference of 5 units both between

the first and the second components, but in the first case the proportion in the first

component is doubled, while in the second case the relative increase is about 10%, and

this relative difference seems more adequate to describ e compositional variability.

This is not the only reason for discarding Euclidean geometry as a proper tool for

analysing compositional dat a. Problems might appear in many situations, like those

where results end up outside the sample space, e.g. when translating compositional vec-

tors, or computing j oint confidence regions for random compositions under assumptions

of normality, or using hexagonal confidence regions. This last case is paradigmatic, a s

such hexagons are often naively cut when they lay partly outside the ternary diagram,

and this without regard to any probability adjustment. This kind of problems are not

just theoretical: they are practical and interpretative.

What is needed is a sensible geometry to work with compositional data. In the

simplex, things a ppear not as simple as we feel they are in real space, but it is possible

to find a way of working in it that is completely analogous. First of all, we can define

11

12 Chapter 3. Aitchison geometry

two operations which give the simplex a vector space structure. The first one is the

perturbation operation, which is analogous to addition in real space, the second one is

the power transformation, which is analogous to multiplication by a scalar in real space.

Both require in their definition the closure operation; recall that closure is nothing else

but the projection of a vector with positive components onto the simplex. Second, we

can obtain a linear vector space structure, and thus a geometry, on the simplex. We j ust

add an inner product, a norm and a distance to the previous definitions. With the inner

product we can project compositions onto particular directions, check for orthogonality

and determine angles between compositional vectors; with the norm we can compute

the length of a composition; the possibilities of a distance should be clear. With all

together we can operate in the simplex in the same way as we operate in real space.

3.2 Vector space structure

The basic operations required for a vector space structure of the simplex fo llow. They

use the closure operation given in Definition 2.3.

Definition 3.1. Perturbation of a composition x ∈ S

D

by a composition y ∈ S

D

,

x ⊕ y = C [x

1

y

1

, x

2

y

2

, . . . , x

D

y

D

] .

Definition 3.2. Power transformation of a composition x ∈ S

D

by a constant α ∈ R,

α ⊙ x = C [x

α

1

, x

α

2

, . . . , x

α

D

] .

For an illustration of the effect of perturbation and power transformation on a set

of compositions, see Figure 3.1.

The simplex , (S

D

, ⊕, ⊙), with the perturbation operation and the power trans-

formation, is a vector space. This means the f ollowing properties hold, making them

analogous to translation and scalar multiplication:

Property 3.1. (S

D

, ⊕) has a commutative group structure; i.e., for x, y, z ∈ S

D

it

holds

1. commutative property: x ⊕ y = y ⊕x;

2. associative property: (x ⊕y) ⊕ z = x ⊕ (y ⊕ z);

3. neutral element:

n = C [1, 1 , . . . , 1] =

1

D

,

1

D

, . . . ,

1

D

;

n is the barice nter of the simplex and is unique;

3.3 Inner product, norm and distance 13

Figure 3.1: Left: Perturbation of initial comp ositions (◦) by p = [0.1, 0.1, 0.8] resulting in compo-

sitions (⋆). Right: Power transformatio n of compositions (⋆) by α = 0.2 resulting in compositions

(◦).

4. inverse of x: x

−1

= C

x

−1

1

, x

−1

2

, . . . , x

−1

D

; thus, x ⊕ x

−1

= n. By analog y with

standard operations in real space, we will write x ⊕ y

−1

= x ⊖ y .

Property 3.2. The power transformation sa tisfi e s the properties of an external product.

For x, y ∈ S

D

, α, β ∈ R it holds

1. associative property: α ⊙ (β ⊙ x) = (α · β) ⊙x;

2. distributive property 1: α ⊙ (x ⊕ y) = (α ⊙ x) ⊕ (α ⊙ y);

3. distributive property 2: (α + β) ⊙x = (α ⊙x) ⊕(β ⊙ x);

4. neutral element: 1 ⊙ x = x; the n e utral element is uniq ue.

Note that the closure operation cancels out any constant and, thus, the closure

constant itself is not important from a mathematical point of view. This fact allows

us to omit in intermediate steps of any computation the closure without problem. It

has also important implications for pra ctical reasons, as shall be seen during simplicial

principal component analysis. We can express this prop erty for z ∈ R

D

+

and x ∈ S

D

as

x ⊕ (α ⊙ z) = x ⊕ (α ⊙ C(z)). (3.1)

Nevertheless, one should be always aware that the closure constant is very importa nt

for the correct interpretation of the units of the problem at hand. Therefore, controlling

for the right units should be the last step in any analysis.

14 Chapter 3. Aitchison geometry

3.3 Inner product, norm and distance

To obtain a linear vector space structure, we take the following inner product, with

associated norm and distance:

Definition 3.3. Inner product of x, y ∈ S

D

,

hx, yi

a

=

1

2D

D

X

i=1

D

X

j=1

ln

x

i

x

j

ln

y

i

y

j

.

Definition 3.4. Norm of x ∈ S

D

,

kxk

a

=

v

u

u

t

1

2D

D

X

i=1

D

X

j=1

ln

x

i

x

j

2

.

Definition 3.5. Distance between x a nd y ∈ S

D

,

d

a

(x, y) = kx ⊖ xk

a

=

v

u

u

t

1

2D

D

X

i=1

D

X

j=1

ln

x

i

x

j

− ln

y

i

y

j

2

.

In practice, alternative but equivalent expressions of the inner product, norm and

distance may be useful. Two possible alternatives of the inner product follow:

hx, yi

a

=

1

D

D−1

X

i=1

D

X

j=i+1

ln

x

i

x

j

ln

y

i

y

j

=

D

X

i<j

ln x

i

ln x

j

−

1

D

D

X

j=1

ln x

j

!

D

X

k=1

ln x

k

!

,

where the notation

P

i<j

means exactly

P

D−1

i=1

P

D

j=i+1

.

To refer t o the properties of (S

D

, ⊕, ⊙) as an Euclidean linear vector space, we shall

talk globally about the Ai tchi son geometry on the simplex, and in particular about the

Aitchison distance, norm and inner product. Note that in mathematical textbooks,

such a linear vector space is called either real Euclidean space or finite dimensional real

Hilbert space.

The alg ebraic-geometric structure of S

D

satisfies standard properties, like compati-

bility o f the distance with perturbation and power transformation, i.e.

d

a

(p ⊕ x, p ⊕ y) = d

a

(x, y), d

a

(α ⊙ x, α ⊙ y) = |α|d

a

(x, y),

for any x, y, p ∈ S

D

and α ∈ R. For a discussion of these and other properties, see

(Billheimer et al., 2001) or (Pawlowsky-Glahn and Egozcue, 2001). For a comparison

with other measures of difference obtained as restrictions of distances in R

D

to S

D

, see

(Mart´ın-Fern´andez et al., 1998; Mart´ın-Fern´andez et al., 1999; Aitchison et al., 20 00;

Mart´ın-Fern´andez, 2 001). The Aitchison distance is subcompositionally coherent, as all

this set of operations induce the same linear vector space structure in the subspace cor-

responding to the subcomposition. Finally, the distance is subcomposionally dominant,

as shown in Exercise 3.7.

3.4. GEOMETRIC FIGURES 15

3.4 Geometric figures

Within t his framework, we can define lines in S

D

, which we call com positional lines,

as y = x

0

⊕ (α ⊙ x), with x

0

the starting point and x the leading vector. Note that

y, x

0

and x are elements of S

D

, while the coefficient α varies in R. To illustrate what

we understand by compositional l i nes, Figure 3.2 shows two families of parallel lines

x y

z

x y

z

Figure 3.2: Orthogonal grids of compositional lines in S

3

, equally s paced, 1 unit in Aitchison distance

(Def. 3.5). The grid in the right is rotated 45

o

with respect to the grid in the left.

in a ternary diagram, forming a square, orthogonal grid of side equal to one Aitchison

distance unit. Recall that parallel lines have the same leading vector, but different

starting points, like for instance y

1

= x

1

⊕ (α ⊙ x) and y

2

= x

2

⊕ (α ⊙ x), while

orthogonal lines are those fo r which the inner product of the leading vectors is zero,

i.e., for y

1

= x

0

⊕ (α

1

⊙ x

1

) and y

2

= x

0

⊕ (α

2

⊙ x

2

), with x

0

their intersection point

and x

1

, x

2

the corresponding leading vectors, it holds hx

1

, x

2

i

a

= 0 . Thus, o rthogonal

means here that the inner product given in Definition 3.3 of the leading vectors of two

lines, one of each family, is zero, and one Aitchison distance unit is measured by the

distance given in Definition 3.5.

Once we have a well defined geometry, it is straightforward to define any geometric

figure we might be interested in, like for instance circles, ellipses, or rhomboids, as

illustrated in Figure 3.3.

3.5 Exercises

Exercise 3.1. Consider the two vectors [0.7, 0.4, 0.8] and [0.2, 0.8, 0.1]. Perturb one

vector by the other with and without previous closure. Is there any difference?

Exercise 3.2. Perturb each sample of the data set given in Table 2.1 with x

1

=

C [0.7, 0.4, 0.8] and plot the initial and the resulting perturbed data set. What do you

observe?

16 Chapter 3. Aitchison geometry

x2

x1

x3

n

Figure 3.3: Circles and ellipses (left) and perturbation of a segment (right) in S

3

.

Exercise 3.3. Apply the power transformation with α ranging from −3 to +3 in steps

of 0.5 to x

1

= C [0.7, 0.4, 0.8] and plot the resulting set of compositions. Join them by

a line. What do you observe?

Exercise 3.4. Perturb the compositions obtained in Ex. 3 .3 by x

2

= C [0.2, 0.8, 0 .1 ].

What is t he result?

Exercise 3.5. Compute the Aitchison inner product of x

1

= C [0.7, 0.4, 0.8] and x

2

=

C [0.2, 0.8, 0.1]. Are they orthogona l?

Exercise 3.6. Compute the Aitchison norm of x

1

= C [0.7, 0.4, 0 .8 ] and call it a. Apply

to x

1

the power transformation α ⊙ x

1

with α = 1/a. Compute the Aitchison norm of

the resulting composition. How do you interpret the result?

Exercise 3.7. Re-do Exercise 2 .4 , but using the Aitchison distance given in Definition

3.5. Is it subcompositionally dominant?

Exercise 3.8. In a 2- pa rt composition x = [x

1

, x

2

], simplify the formula for the Aitchi-

son distance, taking x

2

= 1 − x

1

(so, using κ = 1). Use it to plot 7 equally-spaced

points in the segment (0, 1) = S

2

, from x

1

= 0.014 to x

1

= 0.986.

Exercise 3.9. In a mineral assemblage, several radioactive isotopes have been mea-

sured, obtaining [

238

U,

232

Th,

40

K] = [150, 30, 11 0]ppm. Which will be the composition

after ∆t = 10

9

years? And after another ∆t years? Which was the composition ∆t

years ago? And ∆t years before that? Close these 5 compositions and represent them

in a ternary diagram. What do you see? Could you write the evolution as an equation?

(Half-life disintegration periods: [

238

U,

232

Th,

40

K] = [4.468; 14.05; 1.277] · 10

9

years)

Chapter 4

Coordinate representation

4.1 Introduction

J. Aitchison (1986) used the fact that for compositional data size is irrelevant —as

interest lies in relative proportions o f t he components measured— to introduce trans-

formations based on ratios, the essential ones being the additive log-ratio transformation

(alr) and the centred log -ratio transformation (clr). Then, he applied classical statistical

analysis to the transformed observations, using the alr transformation for modelling, and

the clr transformation for those techniques based on a metric. The underlying reason

was, that the alr transformation does not preserve distances, whereas the clr transfor-

mation preserves distances but leads to a singular covar ia nce matrix. In mathematical

terms, we say that the alr transformation is an isomorphism, but not an isometry, while

the clr transformation is an isometry, a nd thus also an isomorphism, but between S

D

and a subspace of R

D

, leading to degenerate distributions. Thus, Aitchison’s approach

opened up a r ig ourous strategy, but care had to be applied when using either of both

transformations.

Using the Euclidean vector space structure, it is possible to give an a lg ebraic-

geometric foundation to his approach, and it is p ossible to go even a step further.

Within this framework, a transformation of coefficients is equivalent to express obser-

vations in a different coordinate system. We a r e used t o work in an orthogonal system,

known as a Cartesian coordinate system; we know how to change coordinates within

this system and how to rotate axis. But neither the clr nor the alr transformations

can be directly associated with an orthogonal coordinate system in the simplex, a fact

that lead Egozcue et al. (2003) to define a new transformation, called ilr (for isometric

logratio) transformation, which is an isometry between S

D

and R

D−1

, thus avoiding

the drawbacks of both the alr and the clr. The ilr stands actually for t he association

of coordinates with compositions in an orthonormal system in g eneral, and this is the

framework we are going to present here, together with a particular kind of coordinates,

named bala nces, because of their usefulness for modelling and interpretation.

17

18 Chapter 4. Coordinate representation

4.2 Compositional observations in real space

Compositions in S

D

are usually expressed in terms of the canonical basis {

~

e

1

,

~

e

2

, . . . ,

~

e

D

}

of R

D

. In fact, any vector x ∈ R

D

can be written as

x = x

1

[1, 0, . . . , 0] + x

2

[0, 1, . . . , 0] + ···+ x

D

[0, 0, . . . , 1] =

D

X

i=1

x

i

·

~

e

i

, (4.1)

and this is the way we a r e used to interpret it. The problem is, that the set of vectors

{

~

e

1

,

~

e

2

, . . . ,

~

e

D

} is neither a generating system nor a basis with respect to the vector

space structure of S

D

defined in Chapter 3. In fact, not every combination of coefficients

gives an element of S

D

(negative and zero values are not allowed), and the

~

e

i

do not

belong to the simplex as defined in Equation (2.1). Nevertheless, in many cases it

is interesting to express results in terms of compositions, so that interpretations are

feasible in usual units, and therefore one of our purposes is to find a way to state

statistically rigourous r esults in this coordinate system.

4.3 Generating systems

A first step for defining an appropriate orthonormal basis consists in finding a generating

system which can be used to build the basis. A natural way to obtain such a generating

system is to take {w

1

, w

2

, . . . , w

D

}, with

w

i

= C (exp(

~

e

i

)) = C [1, 1, . . . , e, . . . , 1] , i = 1, 2, . . . , D , (4.2)

where in each w

i

the number e is placed in the i-th column, and the operation exp(·) is

assumed to operate compo nent-wise on a vector. In fact, taking into account Equation

(3.1) and the usual rules of precedence for o perations in a vector space, i.e., first the

external operation, ⊙, and afterwards the internal operation, ⊕, any vector x ∈ S

D

can

be written

x =

D

M

i=1

ln x

i

⊙w

i

=

= ln x

1

⊙[e, 1, . . . , 1] ⊕ ln x

2

⊙ [1, e, . . . , 1] ⊕ ···⊕ ln x

D

⊙ [1, 1, . . . , e] .

It is known that the coefficients with respect to a generating system are not unique;

thus, the following equivalent expression can be used as well,

x =

D

M

i=1

ln

x

i

g(x)

⊙w

i

=

= ln

x

1

g(x)

⊙[e, 1, . . . , 1] ⊕ ··· ⊕ln

x

D

g(x)

⊙[1, 1, . . . , e] ,

4.3. Gener ating systems 19

where

g(x) =

D

Y

i=1

x

i

!

1/D

= exp

1

D

D

X

i=1

ln x

i

!

,

is the component-wise geometric mean of the composition. One recognises in the coef-

ficients of this second expression the centred logratio transformation defined by Aitchi-

son (1986). Note that we could indeed replace the denominator by any constant. This

non-uniqueness is consistent with the concept of compositions as equivalence classes

(Barcel´o-Vidal et al., 2001).

We will denote by clr the transformation that gives the expression o f a composition

in centred logratio coefficients

clr(x) =

ln

x

1

g(x)

, ln

x

2

g(x)

, . . . , ln

x

D

g(x)

= ξ. (4.3)

The inverse tra nsformation, which gives us the coefficients in the canonical basis of real

space, is then

clr

−1

(ξ) = C [exp(ξ

1

), exp(ξ

2

), . . . , exp(ξ

D

)] = x. (4.4)

The centred logratio transformation is symmetrical in the components, but the price is

a new constraint on the transformed sample: the sum of the components has to be zero.

This means that the transformed sample will lie on a plane, which goes through the ori-

gin of R

D

and is orthogonal to the vector of unities [1, 1, . . . , 1]. But, more important ly,

it means also that for random compositions the covariance matrix of ξ is singular, i.e.

the determinant is zero. Certainly, generalised inverses can be used in this context when

necessary, but not all statistical packages are designed f or it and problems might arise

during computation. Furthermore, clr coefficients are not subcompositionally coherent,

because the geometric mean of the parts o f a subcomposition g(x

s

) is not necessarily

equal to that of the full composition, and thus the clr coefficients are in general not the

same.

A formal definition of the clr coefficients is the following.

Definition 4.1. For a composition x ∈ S

D

, the clr coefficients are the components of

ξ = [ξ

1

, ξ

2

, . . . , ξ

D

] = clr(x), the unique vector satisfying

x = clr

−1

(ξ) = C (exp(ξ)) ,

D

X

i=1

ξ

i

= 0 .

The i-th clr coefficient is

ξ

i

=

ln x

i

g(x)

,

being g( x) the geometric mean of the components of x.

20 Chapter 4. Coordinate representation

Although the clr coefficients are not co ordinates with respect to a basis of the sim-

plex, they have very important properties. Among them the translation of operations

and metrics from t he simplex into the real space deserves special attention. Denote or-

dinary distance, norm and inner product in R

D−1

by d(·, ·), k·k, and h·, ·i respectively.

The following property holds.

Property 4.1. Consider x

k

∈ S

D

and real cons tants α, β; then

clr(α ⊙ x

1

⊕ β ⊙ x

2

) = α · clr(x

1

) + β · clr ( x

2

) ;

hx

1

, x

2

i

a

= hclr(x

1

), clr(x

2

)i ; (4.5)

kx

1

k

a

= kclr(x

1

)k , d

a

(x

1

, x

2

) = d(clr(x

1

), clr(x

2

)) .

4.4 Orthonormal coordinates

Omitting one vector of the generating system given in Equation (4.2) a basis is obtained.

For example, omitting w

D

results in {w

1

, w

2

, . . . , w

D−1

}. This basis is not orthonormal,

as can be shown computing the inner product of any two of its vectors. But a new basis,

orthonormal with respect to the inner product, can be readily obtained using the well-

known Gram-Schmidt procedure (Egozcue et al., 2003). The basis thus obtained will

be just one out of the infinitely many orthonormal basis which can be defined in any

Euclidean space. Therefore, it is convenient to study their g eneral characteristics.

Let {e

1

, e

2

, . . . , e

D−1

} be a generic orthonormal basis of the simplex S

D

and consider

the (D − 1, D)-matrix Ψ whose rows are clr(e

i

). An orthonormal basis satisfies that

he

i

, e

j

i

a

= δ

ij

(δ

ij

is the Kronecker-delta, which is null for i 6= j, and one whenever

i = j). This can be expressed using (4.5),

he

i

, e

j

i

a

= hclr(e

i

), clr(e

j

)i = δ

ij

.

It implies that the (D − 1, D)-matrix Ψ satisfies ΨΨ

′

= I

D−1

, being I

D−1

the identity

matrix of dimension D − 1. When the product of these matrices is reversed, then

Ψ

′

Ψ = I

D

− (1/D)1

′

D

1

D

, with I

D

the identity matrix of dimension D, and 1

D

a D-

row-vector of ones; note this is a matrix of rank D − 1. The compo sitions of the basis

are recovered from Ψ using clr

−1

in each row of t he matrix. Recall that these rows of

Ψ also add up to 0 because they are clr coefficients (see Definition 4.1).

Once an orthonormal basis has been chosen, a composition x ∈ S

D

is expressed as

x =

D−1

M

i=1

x

∗

i

⊙ e

i

, x

∗

i

= hx, e

i

i

a

, (4.6)

where x

∗

=

x

∗

1

, x

∗

2

, . . . , x

∗

D−1

is the vector of coordinates of x with respect to the

selected basis. The function ilr : S

D

→ R

D−1

, assigning the coordinates x

∗

to x has

4.4 Orthonor mal coordinates 21

been called ilr (isometric log-ratio) transformation which is an isometric isomorphism o f

vector spaces. For simplicity, sometimes this function is also denoted by h, i.e. ilr ≡ h

and also the asterisk (

∗

) is used to denote coordinates if convenient. The following

properties hold.

Property 4.2. Consider x

k

∈ S

D

and real cons tants α, β; then

h(α ⊙ x

1

⊕β ⊙ x

2

) = α · h(x

1

) + β ·h(x

2

) = α · x

∗

1

+ β · x

∗

2

;

hx

1

, x

2

i

a

= hh(x

1

), h(x

2

)i = hx

∗

1

, x

∗

2

i ;

kx

1

k

a

= kh(x

1

)k = kx

∗

1

k , d

a

(x

1

, x

2

) = d(h(x

1

), h(x

2

)) = d(x

∗

1

, x

∗

2

) .

The main difference between Property 4.1 for clr and Property 4.2 for ilr is that the

former refers to vectors of coefficients in R

D

, whereas the latter deals with vectors of

coordinates in R

D−1

, thus matching the actual dimension of S

D

.

Taking into account Properties 4.1 and 4.2, and using the clr imag e matrix of the

basis, Ψ, the coordinates of a composition x can be expressed in a compact way. As

written in (4.6), a coordinate is an Aitchison inner product, a nd it can be expressed as

an or dinary inner product of the clr coefficients. Grouping all coordinates in a vector

x

∗

= ilr(x) = h(x) = clr(x) · Ψ

′

, (4.7)

a simple matrix product is obtained.

Inversion of ilr, i.e. recovering the composition from its coordinates, corresponds

to Equation (4.6). In fact, taking clr coefficients in both sides of (4.6) and taking into

account Property 4.1 ,

clr(x) = x

∗

Ψ , x = C (exp(x

∗

Ψ)) . (4.8)

A suitable algorithm to recover x f r om its coordinates x

∗

consists of the following steps:

(i) construct t he clr-matrix of the basis, Ψ; (ii) carry out the matrix product x

∗

Ψ; and

(iii) apply clr

−1

to obtain x.

There are some ways to define orthonormal bases in the simplex. The main criterion

for the selection of an orthonormal basis is that it enhances the interpretability of

the representation in coordinates. For instance, when performing principal component

analysis an o r tho gonal basis is selected so that the first coordinate (principal component)

represents the direction of maximum variability, etc. Par ticular cases deserving our

attention are those bases linked to a sequential binary partition of the compositional

vector (Ego zcue and Pawlowsky-Glahn, 2005). The main interest of such bases is that

they are easily interpreted in terms of grouped parts of the composition. The Cartesian

coordinates of a composition in such a basis are called balance s and the compositions of

the basis balanc i ng elements. A sequential binary partition is a hierarchy of the parts of

a composition. In the first order of the hierarchy, all parts are split into two groups. In

22 Chapter 4. Coordinate representation

Table 4.1: Exa mple of sig n matrix, used to encode a seq uential binary partition and build an

orthonormal basis. The lower part of the table shows the matrix Ψ of the basis .

order x

1

x

2

x

3

x

4

x

5

x

6

r s

1 +1 +1 −1 −1 +1 +1 4 2

2 +1 −1 0 0 −1 −1 1 3

3 0 +1 0 0 −1 −1 1 2

4 0 0 0 0 +1 −1 1 1

5 0 0 −1 +1 0 0 1 1

1 +

1

√

12

+

1

√

12

−

1

√

3

−

1

√

3

+

1

√

12

+

1

√

12

2 +

√

3

2

−

1

√

12

0 0 −

1

√

12

−

1

√

12

3 0 +

√

2

√

3

0 0 −

1

√

6

−

1

√

6

4 0 0 0 0 +

1

√

2

−

1

√

2

5 0 0 +

1

√

2

0 0 −

1

√

2

the following steps, each group is in turn split into two groups, and the process continues

until all groups have a single part, as illustrated in Table 4.1. For each order of the

partition, one can define the balance between the two sub-groups formed at that level:

if i

1

, i

2

, . . . , i

r

are the r parts of the first sub-group (coded by +1), and j

1

, j

2

, . . . , j

s

the

s parts of the second (coded by −1), the balance is defined as the nor malised logratio

of the geometric mean of each group of parts:

b =

r

rs

r + s

ln

(x

i

1

x

i

2

···x

i

r

)

1/r

(x

j

1

x

j

2

···x

j

s

)

1/s

= ln

(x

i

1

x

i

2

···x

i

r

)

a

+

(x

j

1

x

j

2

···x

j

s

)

a

−

. (4.9)

This means that, for the i-th ba la nce, the parts receive a weight of either

a

+

= +

1

r

r

rs

r + s

, a

−

= −

1

s

r

rs

r + s

or a

0

= 0, (4.10)

a

+

for those in the numerator, a

−

for those in the denominator, and a

0

for those not

involved in that splitting. The balance is then

b

i

=

D

X

j=1

a

ij

ln x

j

,

where a

ij

equals a

+

if the code, at the i-th order partition, is +1 for the j-th part; the

value is a

−

if the code is −1; and a

0

= 0 if the code is null, using the values of r and s

at the i-th order partition. Note that the matrix with entries a

ij

is just the matrix Ψ,

as shown in the lower part of Table 4.1.

4.4 Orthonor mal coordinates 23

Example 4.1. In Egozcue et al. ( 2003) an orthonormal basis of the simplex was

obtained using a Gram-Schmidt t echnique. It corresponds to the sequential binary

partition shown in Table 4.2. The main feature is that the entries of the Ψ matrix can

Table 4.2: Example of sign matrix for D = 5, us e d to encode a sequential binary partition in a

standard way. The lower part of the table shows the matrix Ψ of the basis.

level x

1

x

2

x

3

x

4

x

5

r s

1 +1 +1 +1 + 1 −1 4 1

2 +1 +1 +1 −1 0 3 1

3 +1 +1 −1 0 0 2 1

4 +1 −1 0 0 0 1 1

1 +

1

√

20

+

1

√

20

+

1

√

20

+

1

√

20

−

2

√

5

2 +

1

√

12

+

1

√

12

+

1

√

12

−

√

3

√

4

0

3 +

1

√

6

+

1

√

6

−

√

2

√

3

0 0

4 +

1

√

2

−

1

√

2

0 0 0

be easily expressed as

Ψ

ij

= a

ji

= +

s

1

(D − i)(D − i + 1)

, j ≤ D − i ,

Ψ

ij

= a

ji

= −

r

D −i

D − i + 1

, j = D − i ;

and Ψ

ij

= 0 otherwise. This matrix is closely related to the so-called Helmert matrices.

The interpretation of balances relays on some of its properties. The first one is the

expression itself, specially when using geometric means in the numerator and denomi-

nator as in

b =

r

rs

r + s

ln

(x

1

···x

r

)

1/r

(x

r+1

···x

D

)

1/s

.

The geometric means are cent r al values of the parts in each group of parts; its ratio

measures the relative weight o f each group; the logarithm provides the appropriate

scale; and the square root coefficient is a normalising constant which allows to compare

numerically different balances. A positive balance means that, in (geometric) mean,

the group of parts in the numerator has more weight in the composition than the group

in the denominator (and conversely for negative balances).

A second interpretative element is related to the intuitive idea of balance. Imagine

that in an election, the parties have been divided into two groups, the left and the right

24 Chapter 4. Coordinate representation

wing ones (there are more than one party in each wing). If, from a journal, you get only

the percentages within each group, you are unable to know which wing, and obviously

which party, has won the elections. You probably are going to ask for the balance

between the two wings as the information you need to complete the actual state o f the

elections. The balance, as defined here, permits yo u to complete the information. The

balance is the remaining relative information about the elections once the information

within the two wings ha s been removed. To be more precise, assume that the parties

are six and the composition of the votes is x ∈ S

6

; assume the left wing contested

with 4 parties represented by the group of parts {x

1

, x

2

, x

5

, x

6

} a nd only two parties

correspond to the r ig ht wing {x

3

, x

4

}. Consider the sequential binary partition in Table

4.1. The first partition just separates the two wings and thus the balance informs

us about the equilibrium between the two wings. If one leaves out this balance, the

remaining balances inform us only about the left wing (balances 3,4) and only a bout

the right wing (balance 5). Therefore, to retain only balance 5 is equivalent to know the

relative information within the subcomposition called right wing. Similarly, balances

2, 3 a nd 4 only inform about what ha ppened within the left wing. The conclusion is

that the balance 1, the forgotten information in the journal, does not inform us a bout

relations within the two wings: it only conveys information about the balance between

the two groups representing the wings.

Many questions can be stated which can be handled easily using the balances. For

instance, suppose we are interested in the relationships between the parties within

the left wing and, consequently, we want to remove the information within the right

wing. A traditional approach to this is to remove parts x

3

and x

4

and then close the

remaining subcomposition. However, this is equivalent to project the composition of 6

parts orthogonally on the subspace associated with the left wing, what is easily done

by setting b

5

= 0. If we do so, the obtained projected composition is

x

proj

= C[x

1

, x

2

, g(x

3

, x

4

), g( x

3

, x

4

), x

5

, x

6

] , g(x

3

, x

4

) = (x

3

x

4

)

1/2

,

i.e. each part in the right wing has been substituted by the geometric mean within

the right wing. This composition still has the information on the left-right balance,

b

1

. If we are also interested in removing it (b

1

= 0 ) the remaining information will be

only that within the left-wing subcomposition which is represented by the orthogonal

projection

x

left

= C[x

1

, x

2

, g(x

1

, x

2

, x

5

, x

6

), g( x

1

, x

2

, x

5

, x

6

), x

5

, x

6

] ,

with g(x

1

, x

2

, x

5

, x

6

) = (x

1

, x

2

, x

5

, x

6

)

1/4

. The conclusion is t hat the balances can be very

useful to project compositions onto special subspaces just by retaining some balances

and making other ones null.

4.5. WORKING IN COORDINATES 25

4.5 Working in coordinates

Coordinates with respect to an orthonormal basis in a linear vector space underly

standard rules of operation. As a consequence, perturbation in S

D

is equivalent to

translation in real space, and power transformation in S

D

is equivalent to multiplication.

Thus, if we consider the vector of coordinates h(x) = x

∗

∈ R

D−1

of a compositional

vector x ∈ S

D

with respect to an arbitrary orthonormal basis, it holds (Property 4.2)

h(x ⊕ y) = h(x) + h(y) = x

∗

+ y

∗

, h(α ⊙ x) = α · h(x) = α · x

∗

, (4.11)

and we can think about perturbation as having the same properties in the simplex

as translation has in real space, and of the power transformation as having the same

properties as multiplication.

Furthermore,

d

a

(x, y) = d(h(x), h(y)) = d(x

∗

, y

∗

),

where d stands for t he usual Euclidean distance in real space. This means that, when

performing analysis of compositional data, results that could be obta ined using com-

positions and the Aitchison geometry are exactly the same as those obtained using the

coordinates of the compositions and using the ordinary Euclidean geometry. This lat ter

possibility reduces the computations to t he ordinary o perations in real spaces thus fa-

cilitating the applied procedures. The duality of the representation of compositions, in

the simplex a nd by coordinates, introduces a rich framework where both representations

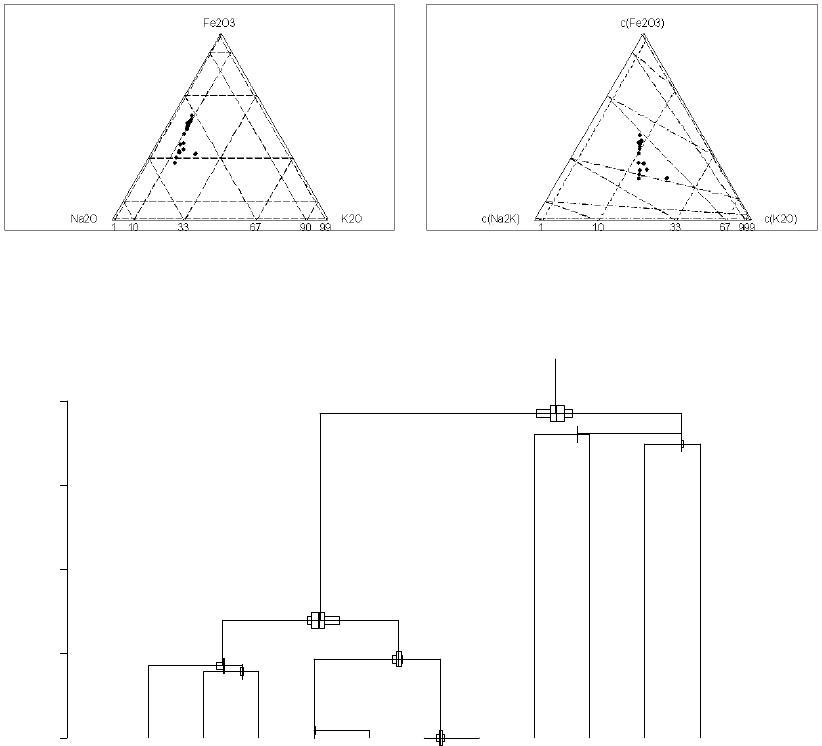

can be interpreted to extract conclusions f r om the analysis (see Figures 4.1, 4.2, 4.3,

and 4.4, for illustration). The price is that the basis selected for representation should

be carefully selected for an enhanced interpretation.

Working in coo r dinates can be also done in a blind way, just selecting a default

basis a nd coordinates and, when the results in coordinates are obtained, translating the

results back in the simplex for interpretation. This blind strategy, although acceptable,

hides to the analyst features of the analysis that may be relevant. For instance, when

detecting a linear dependence of compositional data on an external covariate, data can

be expressed in coordinates and t hen the dependence estimated using standard linear

regression. Back in the simplex, data can be plotted with the estimated regression line

in a ternary diagram. The procedure is completely acceptable but the visual picture o f

the residuals and a possible non-linear trend in them can be hidden or distorted in the

ternary diagram. A plot of the fitted line and the data in coordinates may reveal new

interpretable features.

26 Chapter 4. Coordinate representation

x2

x1

x3

n

-2

-1

0

1

2

-2 -1 0 1 2

Figure 4.1 : Perturbation of a segment in S

3

(left) and in coordinates (right).

x2

x1

x3

0

-1

-2

1

2

-3

3

-2

-1

0

1

2

-4 -3 -2 -1 0 1 2 3 4

-3

-2

-1

0

1

2

3

Figure 4.2 : Powering of a vector in S

3

(left) and in coordinates (right).

-2

-1

0

1

2

3

4

-2 -1 0 1 2 3

Figure 4.3: Circles and ellipses in S

3

(left) and in coordinates (right).

4.6. Additive log-ratio c oordinates 27

x2

x1

x3

n

-4

-2

0

2

4

-4 -2 0 2 4

Figure 4.4 : Couples of parallel lines in S

3

(left) and in coordinates (right).

There is one thing t hat is crucial in the proposed approach: no zero values are al-

lowed, as neither division by zero is admissible, nor taking the logarithm of zero. We

are not going to discuss this subject here. Methods on how to approach the prob-

lem have been discussed by Aitchison (1986), Fry et al. (1996), Mart´ın-Fern´andez

et al. (2000 ), Mart´ın-Fern´andez (2001), Aitchison and Kay (2003), Bacon-Shone (2003),

Mart´ın-Fern´andez et al. (2003) and Mart´ın-Fern´andez et al. (2003).

4.6 Additive log-ratio coordinates

Taking in Equation 4.3 as denominator one of the parts, e.g. the last, then one coefficient

is always 0, and we can suppress the associated vector. Thus, the previous generating

system becomes a basis, taking the other (D − 1) vectors, e.g. {w

1

, w

2

, . . . , w

D−1

}.

Then, any vector x ∈ S

D

can be written

x =

D−1

M

i=1

ln

x

i

x

D

⊙ w

i

=

= ln

x

1

x

D

⊙ [e, 1, . . . , 1, 1] ⊕··· ⊕ln

x

D−1

x

D

⊙[1, 1, . . . , e, 1] .

The coordinates correspond to the well known additive log-ratio transformation (alr)

introduced by (Aitchison, 198 6). We will denote by alr the transformation that gives

the expression of a composition in additive log-ratio coordinates

alr(x) =

ln

x

1

x

D

, ln

x

2

x

D

, ..., ln

x

D−1

x

D

= y.

Note that the alr transformation is not symmetrical in the components. But the essen-

tial problem with alr coordinates is the non-isometric character o f this transformation.

28 Chapter 4. Coordinate representation

In fact, they are coordinates in an oblique basis, something that affects distances if

the usual Euclidean distance is computed from the alr coordinates. This approach is

frequent in many applied sciences and should be avoided ( see for example (Albar`ede,

1995), p. 42).

4.7 Simplicial matrix notation

Many o perations in real spaces are expressed in matrix notation. Since the simplex is

an Euclidean space, matrix not ations may be a lso useful. However, in this framework

a vector of real constants cannot be considered in the simplex although in the real

space they are readily identified. This produces two kind of matrix products which

are introduced in this section. The first is simply the expression of a perturbation-

linear combination of compositions which appears as a power-multiplication of a real

vector by a compositional matrix whose rows are in the simplex. The second o ne is the

expression of a linear transformation in the simplex: a composition is transformed by

a matr ix, involving perturbation and powering, to obtain a new composition. The real

matrix implied in this case is not a general one but when expressed in coordinates it is

completely g eneral.

Perturbation-linear combination of compositions

For a row vector of ℓ scalars a = [a

1

, a

2

, . . . , a

ℓ

] and an a r ray o f row vectors V =

(v

1

, v

2

, . . . , v

ℓ

)

′

, i.e. an (ℓ, D)-matrix,

a ⊙ V = [a

1

, a

2

, . . . , a

ℓ

] ⊙

v

1

v

2

.

.

.

v

ℓ

= [a

1

, a

2

, . . . , a

ℓ

] ⊙

v

11

v

12

··· v

1D

v

21

v

22

··· v

2D

.

.

.

.

.

.

.

.

.

.

.

.

v

ℓ1

v

ℓ2

··· v

ℓD

=

ℓ

M

i=1

a

i

⊙ v

i

.

The components of this matrix product are

a ⊙ V = C

"

ℓ

Y

j=1

v

a

j

j1

,

ℓ

Y

j=1

v

a

j

j2

, . . . ,

ℓ

Y

j=1

v

a

j

jD

#

.

4.7. Ma trix notation 29

In coordinates this simplicial matrix product takes the form of a linear combination of

the coordinate vectors. In fact, if h is the function assigning the coordinates,

h(a ⊙ V) = h

ℓ

M

i=1

a

i

⊙ v

i

!

=

ℓ

X

i=1

a

i

h(v

i

) .

Example 4.2. A compo sition in S

D

can be expressed as a perturbation-linear combi-

nation of the elements of the basis e

i

, i = 1, 2 , . . . , D −1 as in Equation (4.6). Consider

the (D −1, D)-matrix E = (e

1

, e

2

, . . . , e

D−1

)

′

and the vector of coo r dinates x

∗

= ilr(x).

Equation (4.6) can be re-written as

x = x

∗

⊙ E .

Perturbation-linear transformation of S

D

: endomorphisms

Consider a row vector of coordinates x

∗

∈ R

D−1

and a general (D −1, D −1)-matrix A

∗

.

In the real space setting, y

∗

= x

∗

A

∗

expresses an endomorphism, obviously linear in the

real sense. Given the isometric isomorphism of the real space of coordinates with the

simplex, the A

∗

endomorphism has an expression in the simplex. Taking ilr

−1

= h

−1

in

the expression of the real endomorphism and using Equation (4.8)

y = C(exp[x

∗

A

∗

Ψ]) = C(exp[clr(x)Ψ

′

A

∗

Ψ]) (4.12)

where Ψ is the clr matrix of the selected ba sis and the right-most member has been

obtained applying Equation (4.7) to x

∗

. The (D, D)-matr ix A = Ψ

′

A

∗

Ψ has entries

a

ij

=

D−1

X

k=1

D−1

X

m=1

Ψ

ki

Ψ

mj

a

∗

km

, i, j = 1, 2, . . . , D .

Substituting clr(x) by its expression as a function of the logarithms of parts, the com-

position y is

y = C

"

D

Y

j=1

x

a

j1

j

,

D

Y

j=1

x

a

j2

j

, . . . ,

D

Y

j=1

x

a

jD

j

#

,

which, taking into account that products and powers match the definitions of ⊕ and ⊙,

deserves the definition

y = x ◦ A = x ◦ (Ψ

′

A

∗

Ψ) , (4.13)

where ◦ is t he perturbation- matrix product representing an endomorphism in the sim-

plex. This matrix product in the simplex should not be confused with that defined

between a vector of scalars and a matrix of compositions and denoted by ⊙.

An important conclusion is that endomorphisms in t he simplex ar e represented by

matrices with a peculiar structure given by A = Ψ

′

A

∗

Ψ, which have some remarkable

properties:

30 Chapter 4. Coordinate representation

(a) it is a (D, D) real matrix;

(b) each row and each column of A adds to 0;

(c) rank(A) = rank(A

∗

); particularly, when A

∗

is full-rank, rank(A) = D − 1;

(d) the identity endomorphism corresponds to A

∗

= I

D−1

, the identity in R

D−1

, and

to A = Ψ

′

Ψ = I

D

−(1/D)1

′

D